Neuroscience Papers Set 7

By Brian PhoMay 20, 2022 ⋅ 89 min read ⋅ Papers

The Search for True Numbers of Neurons and Glial Cells in the Human Brain: A Review of 150 Years of Cell Counting

- For the past 50 years, we’ve believed that the human brain consist of about 100 billion neurons and one trillion glia with a glia-to-neuron ratio of ten to one, meaning ten glia for each neuron.

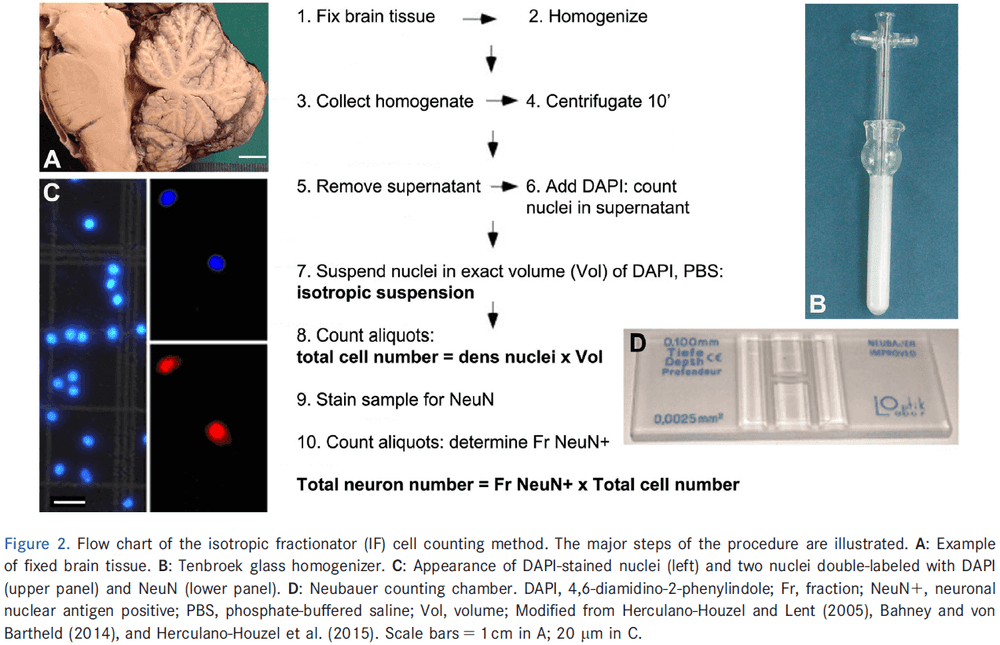

- A new counting method, the isotopic fractionator, has challenged this number and ratio.

- The isotopic fractionator finds a glia-to-neuron ratio of less than 1:1 and less than 100 billion glia in the human brain.

- A survey of original evidence shows that histological data always supported a 1:1 ratio of glia to neurons in the entire human brain.

- This paper includes a brief history of cell counting in human brains, types of counting methods, ranges of previous estimates, and the current state of knowledge about neuronal and glia cell numbers.

- Recent studies find that the cellular composition of the human brain is very different than what was believed and taught for almost half a century.

- Cell counting in the human brain has had a complex history, in part because cells can be quantified and reported in three different ways.

- E.g. Total neuron numbers, total glia numbers, and the ratio of glia to neurons (GNR).

- Brain cell counting can be roughly divided into three historical phases.

- The first phase was collecting data only for parts of the human brain, specifically the cerebral cortex.

- The second phase was serious estimates of total numbers for both glia and neurons.

- Even though these cell density-based estimates supported a total GNR of about 1:1, this was either not recognized or not communicated properly as major textbooks and reviews cited GNRs of 10:1 or 50:1.

- This would remain unchallenged from the 1960s until 2009.

- The third and most recent phase began with the study by Azedvedo et al. in 2009 that revealed the discrepancy between textbook knowledge and the published literature.

- Surprisingly, the idea of one trillion glia wasn’t due to technical disadvantages or counting methods, but rather the failure to notice that published numbers for all three components: neuron counts, glia counts, and GNR, contradicted each other.

- In cell counting, the unit being counted is the cell body with its nucleus.

- For glia, most studies combine astrocyte, oligodendrocyte, and microglia counts.

- Three types of cell counting methods

- Model-based or design-based counting of stained cells, nuclei, or nucleoli (histology/stereology).

- Tissues are fixed, usually in a formaldehyde-based fixative, embedded in a suitable medium, sectioned into thin slices, stained with a dye, and cells counted under a microscope.

- Model-based approaches rely on analyzing thin sections and extrapolating, while design-based approaches use thicker sections and applies a random sampling scheme to count.

- Both methods can result in inaccuracies due to assumptions so they should be calibrated against the ultimate standard: 3D serial section reconstructions of an entire region or sample.

- Major challenges: ensure samples are truly representative of the reference volume, prevent double counting of particles that appear in multiple sections, account for shrinkage due to age and tissue changes, distinguish between neurons and glia.

- The importance of counting absolute cell numbers rather than cell densities was underscored by the finding that tissues shrink differently with age.

- E.g. Neglecting this finding led to the false belief that neuron numbers declines steadily and significantly during normal aging.

- This type of cell counting is valuable for well-defined regions but has limitations with larger tissues of heterogeneous composition.

- DNA extraction and measurement of total DNA content.

- Extracts and measures DNA content to calculate cell numbers based on knowledge of DNA content per cell nucleus.

- Major challenges: complete recovery of DNA required, contamination with other nucleic acids, not all cells are euploid meaning their DNA content can vary.

- This technique was mostly used in the 1950s-70s to obtain relative comparisons rather than absolute numbers.

- Direct enumeration of cells in homogenized brain tissue (isotropic fractionator).

- Dissected chilled tissue was weighed, homogenized by mixing, diluted in a known volume of medium, stained with methylene blue, mixed, and then aliquots of the diluted medium were counted on a hemocytometer.

- Major challenges: rapid degradation of unfixed cells, lack of distinction between cell types, rupturing of cells during mechanical disintegration (mixing).

- This method is easy, fast, and accurate, generating estimates of cell numbers independent of tissue volume or cell density.

- Model-based or design-based counting of stained cells, nuclei, or nucleoli (histology/stereology).

4. Studies indicate equivalency between the isotopic fractionator (IF) and stereology.

4. Studies indicate equivalency between the isotopic fractionator (IF) and stereology.

- Considering the results obtained from different investigators using different methods, most of the data are relatively consistent.

- E.g. The majority of studies estimated total neuron numbers for the entire cerebral cortex to be between 10-20 billion.

- Since the cerebral cortex takes up 80-85% of the adult human brain volume, it was often equated with the whole brain, leading to oversimplification and the myth of one trillion glia.

- Three major components of the human brain

- Cerebral cortex (80-85% volume)

- There’s been confusion on whether cerebral cortex means only gray matter or gray and white matter.

- The majority of studies excluded white matter.

- The number of neurons in white matter is relatively small so excluding it doesn’t make a significant difference for total cell numbers.

- Some studies were ambiguous in whether their estimates applied to one or both hemispheres.

- Another source of error is that counting only 100-200 neurons is sufficient for extrapolation. Recent computer simulations suggest that considerably more neurons should be counted.

- The majority of histology-derived estimates converge at 10-20 billion neurons, consistent with the IF.

- The notion that large numbers of neurons (30-50%) are lost during decades of normal human aging have been refuted.

- Actual losses appear to be on much smaller scales and are more region-specific.

- The GNR of 1.5 in gray matter of the adult human cortex was confirmed by numerous subsequent investigations.

- The GNR varies locally in gray matter between 1.2 in occipital and 3.6 in frontal areas.

- One of the major, if not most serious, problems with histology-based counting methods is the technical difficulty of recognizing glia and distinguishing them from small neurons.

- To this day, we still haven’t found a solution.

- Therefore, the design of methods that can accurately distinguish neurons from glia is important.

- Cerebellum (10% volume)

- In the cerebellum, a large number of neurons and low number of non-neuronal cells had been suspected by early investigators.

- The GNR for the entire human cerebellum appears to be about 0.05.

- The unusual cellular composition of the cerebellum was a key factor in failed attempts to calculate the true GNR for the total human brain and a major reason behind the one trillion glia myth.

- Remaining (2-8% volume)

- Until 2009, there had been no attempts to estimate the total number of neurons or glia in this part of the brain.

- The total number of neurons and glia in the brainstem, diencephalon, and striatum doesn’t add up to the numbers even close to those in the cerebral cortex or cerebellum.

- This is in part due to it’s small volume.

- Cerebral cortex (80-85% volume)

- Our current best estimate for the cellular composition of the entire human brain is between 67-86 billion neurons and around 40-50 billion glia.

- The GNR is important because it informs us about brain development, physiology, diseases, aging, and brain evolution.

- E.g. Comparing brain regions.

- No notes on the history of the GNR.

- Differences in GNR across species are largely determined by changes in neuronal densities rather than changes in glia densities.

- Small neurons are difficult to distinguish from glia.

- A breakthrough seemed to have been achieved by using an antibody against a neuron-specific nuclear antigen (NeuN).

- Remarkably, errors in neuron number, glia number, and GNR, were in the most prestigious neuroscience textbook: The Principles of Neuroscience.

- The lack of citations for the 10:1 GNR over a 50-year period is an example of a major failure in the scientific process that’s supposed to self-correct invalid claims or reports.

- Why should we care about cell counting?

- Three examples of how cell counting has had a significant impact

- Aging human brain

- Is there a significant loss of neurons in the normal aging brain?

- Studies from the 1950-1980 reported and generally believed that the normal aging brain loses large numbers of neurons each day after age 30.

- E.g. A 60-year-old would have half as many cerebral neurons as what they had started with.

- However, we now know that the shrinkage of brains after fixation depends on age and that the reference volumes of brains from older people different from younger people.

- When this is controlled for, there was very little, if any, normal loss of neurons in most parts of the brian.

- This myth had found its way into textbooks, curricula, and common knowledge, making it difficult to correct.

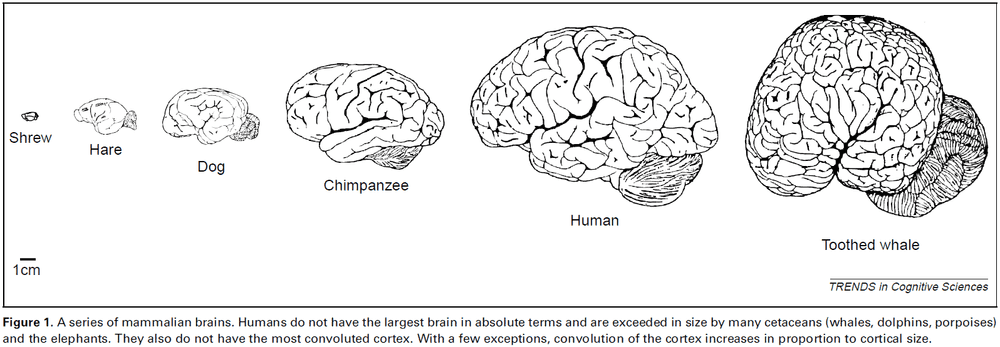

- Evolution of the brain

- Throughout the 20th century, the prevailing notion was that the human brain had an exceptional cellular composition among species, which was responsible for the superior cognitive abilities of humans.

- E.g. Previous work suggested that the human neocortex showed an abnormally high GNR when compared with other mammals.

- However, the development of more efficient cell counting methods such as the IF made it possible to re-examine this claim.

- Instead, we find that the GNR is highly conserved between structures and species, suggesting an important and close regulation of glia numbers in response to neuron density and size.

- Developmental neuroscience

- Neuron and glia numbers and their ratios fluctuate in relatively narrow ranges, even in different species and different brain structures, suggesting an optimal relationship between cell types.

- How are these ratios achieved and maintained during development?

- Aging human brain

- The assumption that the distribution of glia in one small part of the brain was representative for the whole brain likely contributed to the widely overstated GNR in reviews and textbooks.

- Reasons behind the perpetuation of the claim of one trillion cells in the human brain

- Failure to realize that the number of neurons and glia didn’t add up

- Focus on parts of the human brain that weren’t representative

- Neglecting the role of the cerebellum

- Missing primary literature

- Copying information from previous reviews without scrutiny

- Inaccurate quoting of others’ work

- Hesitation to challenge the prevailing dogma

- A precise balance of glia to neurons in human brain regions seems essential for normal functioning and this balance is disturbed in disease and trauma.

The Big Data Problem: Turning Maps into Knowledge

- The ability to record from every neuron in the brain of an awake and behaving animal is unquestionably useful.

- Having a wiring diagram overlaid on such activity maps is probably a dream come true for most systems neuroscientists.

- However, the vast number of neurons in the brain results in enormous amounts of data.

- This paper argues that big data and the challenges associated with it (mining, storing, distributing) aren’t the key challenge we face in making neural maps useful.

- Instead, the author suggests that the essential ingredient is the experimental design used to gather and analyze such data.

- In essence, the hard work is in formulating the precise question that we want to know and what an answer would look like.

- Big data: any data that exceeds the size of a standard laptop hard drive.

- It’s important to distinguish between big data and complex data.

- Complex data: any data that’s complicated, hard to interpret, and hard to compress.

- Connectomics relies on recovering a circuit diagram by imaging the whole region of interest at the resolution of an electron microscope (EM).

- The size of raw connectomics data is truly daunting.

- E.g. A mouse brain imaged at 5 nm x 5 nm x 40 nm resolution in a volume of 500 mm3 would generate 500 petabytes of data.

- However, we can compress this data by focusing on what we want, which is the connectivity matrix between the 100 million neurons that a mouse brain has.

- If we assume 1000 connections for each neuron, the connectivity matrix has entries resulting in a data set of a few hundred gigabytes.

- This connectivity matrix would be complex data but not big data.

- Thus, the whole-brain EM volume data can be reduced and compressed by six orders of magnitude.

- If we consider recording all of the spikes in all neurons of the brain, we can envision a similar compression.

- E.g. 500 gigabytes of activitome data.

- Raw data sets might be very large, but once converted into information, the volumes aren’t big data anymore.

- What’s the best approach to collecting big data?

- We could envision either large-scale industrial data collection or the traditional small-scale individual lab approach.

- Once the data are collected, the question becomes how to best turn this information into knowledge.

- The challenge in neuroscience will be to come up with good questions and intelligent experiments.

- The author doubts that new technologies for helping us identify and isolate neuronal subtypes will lead to a paradigm shift or a fundamentally new way of doing neuroscience.

- The name of the game will always be to think carefully and deeply about how behavior emerges from neurons.

A quantitative description of membrane current and its application to conduction and excitation in nerve

- This paper discusses results from the preceding set of papers, puts them into mathematical form, and shows that they account for conduction and excitation in quantitative terms.

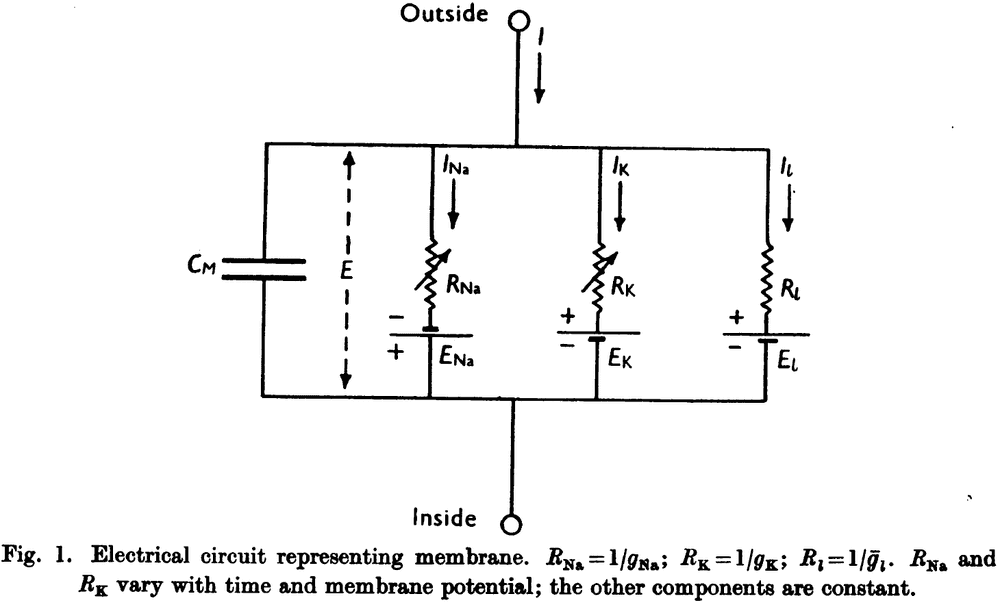

- The previous results suggest that the electrical behavior of the neuron cell membrane may be represented by the electrical circuit in figure one.

- In the circuit, current can be carried through the cell membrane either by charging the membrane capacity or by movement of ions through parallel resistors.

- The current is divided into three components: sodium ions (), potassium ions (), and a small leakage current () made up of chloride and other ions.

- Each component has its own driving force measured as an electrical potential difference and permeability coefficient.

- E.g. where is the equilibrium potential for sodium ions.

- Our experiments suggest that and are functions of time and membrane potential but the other variables (E) are constant.

- To understand how these complicated phenomena interact to form the action potential and refractory period, we should obtain expressions relating the sodium and potassium conductances to time and membrane potential.

- While our experiments are unlikely to give any information about the nature of the molecular events underlying changes in permeability, our evidence does refute some theories while being consistent with others.

- The first important point is that the changes in membrane permeability appear to depend on membrane potential and not membrane current.

- E.g. If sodium concentration is higher or lower compared to the intracellular concentration, thus the driving force flips signs, the membrane current changes sign but still appears to follow the same time course.

- How might changes in the distribution of a charged particle affect the ease with which sodium ions cross the membrane?

- The first effect of depolarization is a movement of negatively charged molecules from the outside to the inside of the membrane resulting in an outward current.

- How do the negatively charged molecules cross the cell membrane?

- One hypothesis is that sodium ions cross the membrane in combination with a lipoid soluble carrier, allowing them to pass the cell lipid bilayer (membrane).

- Another hypothesis is that sodium movement depends on the distribution of charge particles that don’t act as carriers but allow sodium to pass through the membrane when they occupy particular sites in the membrane (with hindsight, this is the correct hypothesis).

- Much of what’s been said about changes in sodium permeability also apply to the change in potassium permeability.

- However, the potassium system differs from the sodium system in the following ways: the activating molecules have an affinity for potassium but not sodium, they move more slowly, and they aren’t blocked or inactivated.

- Another hypothesis is that only one system is present but that its selectivity changes soon after membrane depolarization.

- One of the most striking properties of the membrane is the extreme steepness of the relation between ionic conductance and membrane potential.

- Details of the mechanism will probably not be settled for some time, but it seems difficult to escape the conclusion that the changes in ionic permeability depend on the movement of some component of the membrane which behaves as though it has a large charge or dipole moment.

- No notes on the section “mathematical description of membrane current during a voltage clamp”.

- The discussion in the previous part shows that there’s little hope for calculating the time course of the sodium and potassium conductances from first principles since we don’t know how or why they change; we just know that they do change.

- Our goal here is to find equations that describe the conductances with reasonable accuracy and are sufficient for theoretical calculation of the action potential and refractory period.

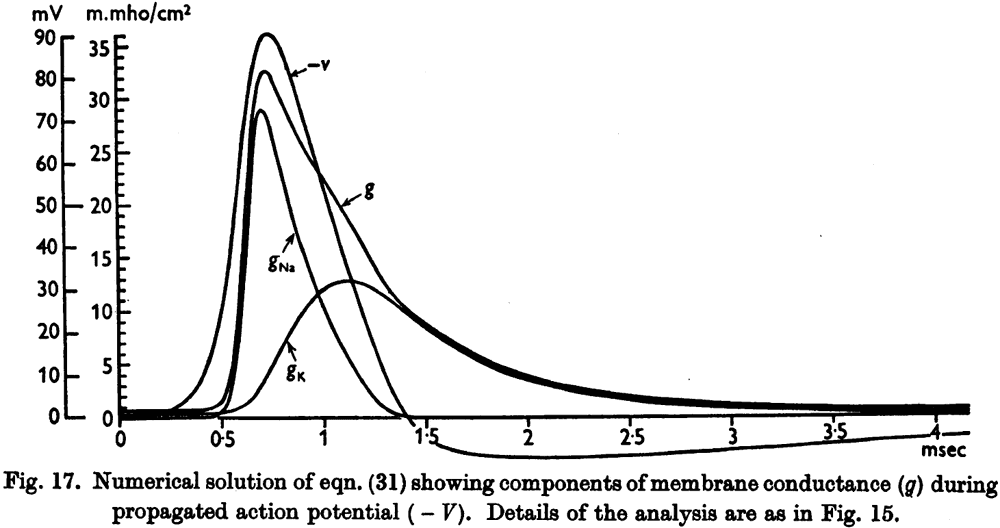

- The rapid rise in voltage is due almost entirely to sodium conductance but after the peak, the potassium conductance takes a larger share. By the time potassium peaks, the sodium conductance is negligible.

- The presented results show that the derived equations predict, with fair accuracy, many of the electrical properties of the squid giant axon.

- E.g. The form, duration, and amplitude of membrane and propagating spike, the conduction velocity, impedance changes, refractory period, subthreshold responses, and oscillations.

- The agreement between equation and evidence must not be taken as evidence that our equations are anything more than an empirical description of the time-course changes in permeability to sodium and potassium.

- We do establish that the fairly simple permeability changes in response to changes in membrane potential are a sufficient explanation of the wide range of phenomena that have been fitted by solutions of the equations.

- The equations in this paper are limited in that they only cover short-term responses of the membrane and that they only apply to the isolated squid giant axon.

- Some additional process, on top of the action potential process, must happen in a nerve to maintain the ionic gradients, which are the immediate source of energy used in impulse conduction (with hindsight, this is hinting at ion pumps).

Brodmann: a pioneer of human brain mapping—his impact on concepts of cortical organization

- Korbinian Brodmann (1868-1918) is an important pioneer in neuroscience for his work on brain mapping.

- Brodmann’s maps dominate his legacy, detailing 48 cortical areas of the human cerebral cortex with some further subdivisions in later modifications.

- The goal of this paper is to remember Brodmann’s life and work, and his influence on and importance for actual research concepts in various fields of modern brain mapping.

- Brodmann was born in a small village in Southern Germany as the illegitimate son of Sophie Benkler, a maidservant in the house of the wealthy Brodmann family.

- At age 21, Brodmann studied medicine for one year where he attended a lecture on medical physics by Wilhelm Rontgen, the discoverer of the X-ray and a recipient of the Nobel Prize for Physics.

- From age 21 to 27, Brodmann continued to study medicine and philosophy, graduating at 27 to work as a physician.

- The year 1896 (age 28) brought a fateful encounter for Brodmann’s scientific future. In this year, Brodmann met Oskar Vogt who fascinated him about the future of brain research.

- Vogt finally convinced Brodmann to accept a position in Alexandersbad and to specialize in psychiatry and hypnosis.

- Brodmann went on to receive a postdoctoral position in the Neurobiological Central Station in Berlin.

- This station was founded by Vogt in 1901 and consisted of a medical practice for hypnosis, a research lab, and animal and photographic facilities.

- It was a completely private institution financially supported by the Krupp family and Vogt’s private income as a physician.

- It was here that Brodmann started his pioneering work on the cytoarchitecture of mammalian brains.

- From 1898 to 1900, Brodmann had planned to improve his experience in psychiatry and neuroanatomy.

- From 1901 onwards, Brodmann was an assistant at the neurobiological laboratory of the University of Berlin under Doctor O. Vogt until 1910 when Brodmann became an assistant physician of the Royal University Clinic for Mental and Nervous Conditions.

- The lab was important to Brodmann because it was the first time that he would be financially secure.

- The years between 1901 and 1910 were the period of Brodmann’s work on cytoarchitecture and localization, but his work wasn’t exclusively focused on cytoarchitecture.

- E.g. Brodmann published papers on hypnosis, astrocytes, neuropathology, psychopathology, polarization microscopy of myelinated nerve fibers, brain activity and blood flow, and hundreds of printed reviews other publications.

- Brodmann was a very critical reviewer and his reviews increased his number of opponents.

- His most influential studies are those on cytoarchitecture and the organization of the cerebral cortex.

- In a series of eight papers, Brodmann and his contemporary Campbell founded the field of cytoarchitectonic mapping.

- The commercial technology of the time was inadequate for Brodmann’s ambitious plans so they had to design and manufacture a large microtome themselves, improved embedding and staining procedures, created novel photographic techniques, and prepared complete series of sections of entire human hemispheres.

- Brodmann’s monograph from 1909 is a summary of his concept of cytoarchitecture and describes the principles of his comparative neuroanatomical approach.

- Brodmann’s six-layer concept of the cortex is universally accepted in modern studies and textbooks.

- Brodmann’s most important finding from comparative topography is that the cerebral cortex’s organization manifests a common architectonic plan in all mammals; a standard pattern of layers.

- The aim of his studies wasn’t to subdivide the cortex but to show that each cortical area has an evolutionary history.

- Brodmann wrote in his monograph: “Although my studies of localization are based on purely anatomical considerations … my ultimate goal was the achievement of a theory of function and its pathological deviations”.

- His search for functions of cytoarchitectonically defined areas led to the idea that single loci are active in different combinations when realizing a complex function.

- Brodmann revolutionized research on the microstructure of the human brain by establishing the common six-layered laminar bauplan and its regional modifications for the entire neocortex of mammals.

- One limitation of Brodmann’s maps is that he didn’t register the between-individual variability of the localization and size of areas.

- One fix for this is that cortical maps must be probabilistic as borders of cytoarchitectonic areas are highly variable between subjects.

- Another limitation is the observer-dependent definition of borders which has since been overcome with computerized image analysis.

- In 1908, Brodmann submitted his thesis to obtain a professorship position at the University of Berlin, which was rejected because of a dirty battle of office politics.

- In the end, Brodmann cancelled his position in Berlin and moved to the Clinic for Psychiatry and Neurology of the University Tubingen.

- In 1910, Brodmann was appointed as head of the anatomy lab.

- However, the beginning of World War I stopped all scientific plans and between 1914 and 1916, he served as a physician in a field hospital and took care of soldiers with brain injuries.

- Brodmann met his wife in 1917 (age 49) and had a daughter one year later.

- In 1918, Brodmann reached the climax of his career and was appointed as head of the Department for Topographical Anatomy in the famous Research Institute for Neurology in Munich.

- However, on August 22 1918, Brodmann suddenly died due to a devastating infection.

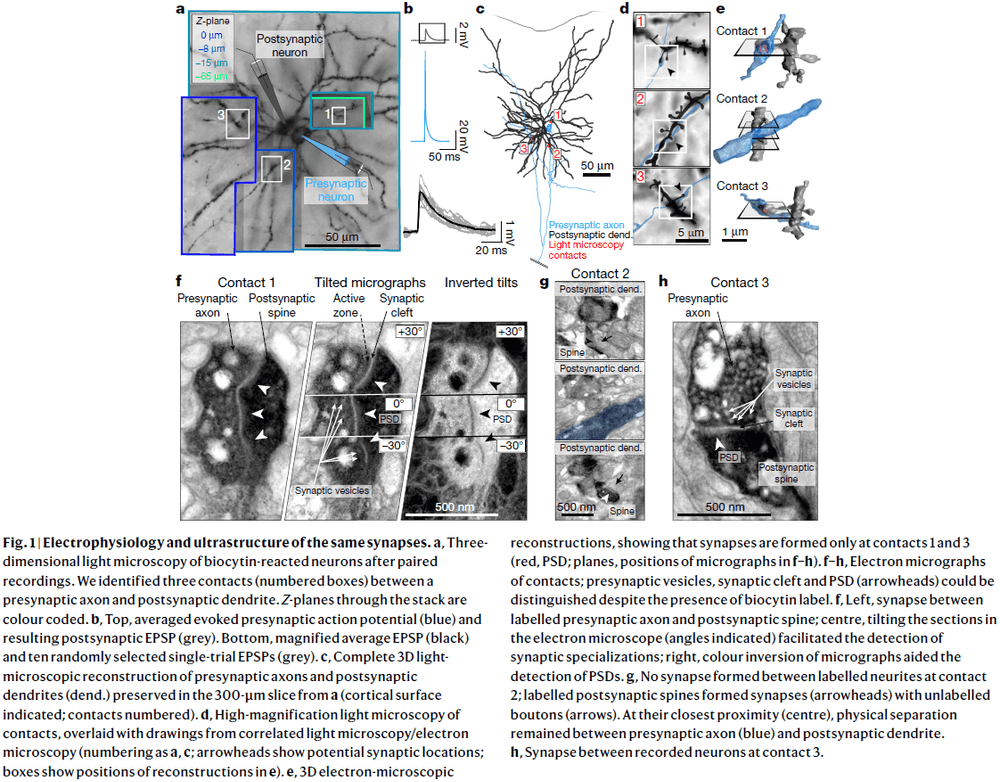

Structure and function of a neocortical synapse

- We don’t know how the structure of a synapse relates to its physiological transmission strength, a key limitation for inferring brain function from neuronal wiring diagrams.

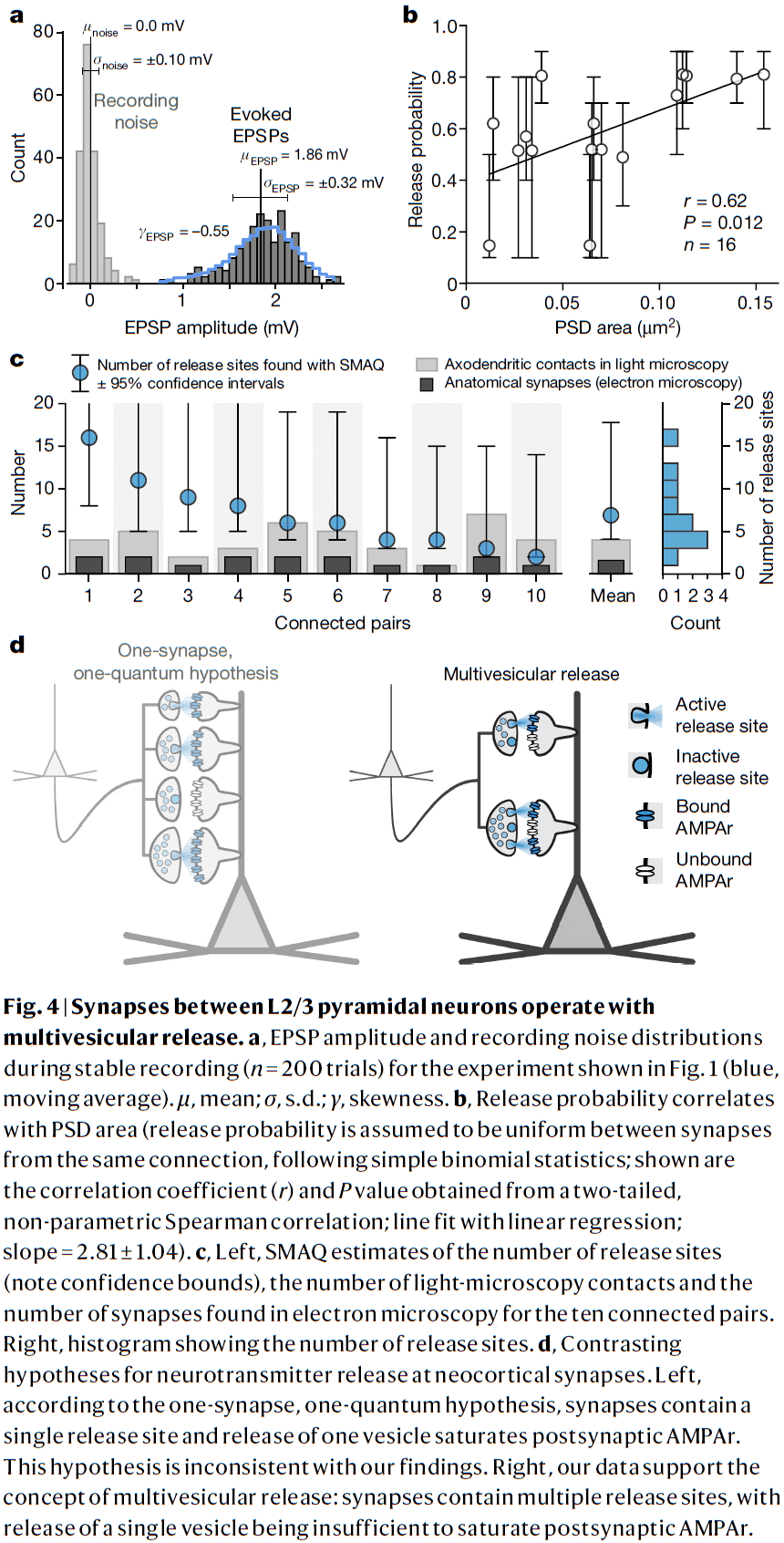

- This paper combines slice electrophysiology of connected pyramidal neurons in the mouse somatosensory cortex with correlated light microscopy and high-resolution electron microscopy of all synaptic contacts between the recorded neurons.

- We find a linear relationship between synapse size and strength, providing the missing link in assigning physiological weights to synapses.

- Previous studies of the frog neuromuscular junction revealed that synaptic transmission is probabilistic and follows binomial models with three parameters:

- Number of neurotransmitter release sites

- Synaptic response to the release of one vesicle (quantum) of neurotransmitter

- Probability of a vesicle being released

- Synaptic strength is measured as the amplitude of the excitatory postsynaptic potential (EPSP) and is the product of the three parameters.

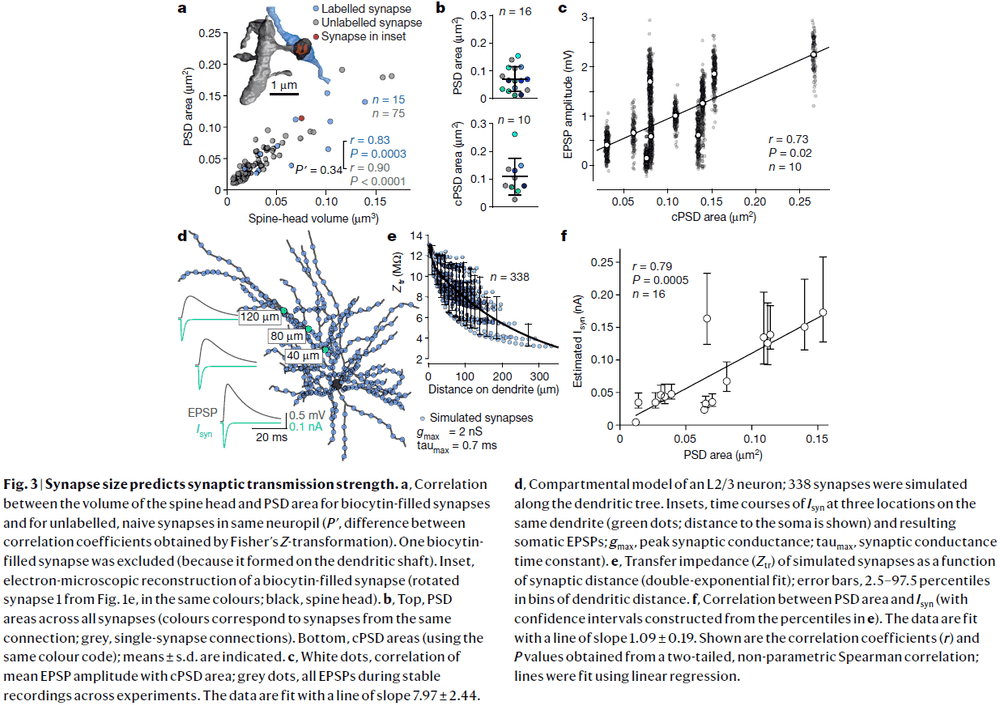

- Structurally, the area of the postsynaptic density (PSD) is proportional to its number of AMPA receptors and scales with the volume of the dendritic spine head.

- Whether and how the EPSP amplitude relates to PSD area remains unknown for any given synapse in the brain.

- Furthermore, it’s unclear whether individual neocortical synapses release more than one vesicle.

- Here we relate synaptic structure and function to determine the size-strength relationship and mode of vesicle release.

- We recorded EPSP amplitude and variance between connected layer 2/3 pyramidal neurons and identified all axodendritic contacts using both light microscopy and electron microscopy.

- Synapse size predicts synaptic strength

- We computed the total PSD area for a connection by summing the individual PSDs of our six multisynaptic connections and correlated it with mean EPSP amplitude.

- We found a strong correlation (r=0.73, p=0.02) between total PSD area and mean EPSP amplitude.

- Multivesicular release in neocortex L2/3

- PSD area correlated with the release probability of connections.

- Crucially, our estimates for the number of release sites exceeded the number of synapses for all connections by an average factor of 4.4.

- This implies that L2/3 pyramidal cells must operate predominately through multivesicular release.

- We also found no correlation between estimated quantal size and PSD area, consistent with multivesicular release.

- Electron microscopy revealed synapses at only a minority of all axodendritic contacts between the neurons identified by light microscopy, prompting re-evaluation of studies that use light microscopy as they overestimate the actual number of synapses.

- The notion that different neocortical synapse types use different release modes is questionable.

- Multivesicular release is a hallmark of many central and peripheral synapses.

- Multivesicular release should endow neocortical circuits with considerably higher computational power than is supposed under the one-synapse, one-quantum hypothesis.

- E.g. Enables dynamic tuning of synaptic strength by adjusting the number of release sites within synapses without the structural remodelling.

- We’ve also shown a linear relationship between synaptic size to strength, confirming the functional relevance of quantifying synapse size by electron microscopy.

CORTICAL PLASTICITY: From Synapses to Maps

- Cortical representations in adult animals aren’t fixed but are dynamic and continuously modified by experience.

- The cortex can preferentially allocate area to represent the most used peripheral inputs.

- Changes in cortical representations appear to underlie learning and the rules governing this cortical representational plasticity are becoming increasingly well understood.

- The fields of cortical synaptic plasticity and cortical map plasticity have been implicitly linked by the hypothesis that synaptic plasticity underlies cortical map reorganization.

- Recent experiments and theories have provided increasingly strong support for this hypothesis.

- The goal of this paper is to review the fields of both synaptic and cortical map plasticity and the work that attempts to unite them.

- The cortex reorganizes its effective local connections and responses following peripheral or central changes in input and in response to behavior.

- This capacity for reorganization partly accounts for certain forms of perceptual and motor learning.

- The challenge is to prove that synaptic plasticity is necessary and sufficient for lesion- and experience-driven dynamic cortical representation changes; to prove a causal link between cortical synaptic plasticity and cortical map reorganization.

- This challenge becomes more difficult as we don’t yet understand exactly how the cortex processes information.

- E.g. What’s the function of recurrent connections? What’s the function of each cortical layer? What’s the role of inhibition?

- Flow of information through the neocortex

- Sensory information reaches the cortex from thalamus by the thalamocortical axons that terminate primarily in layer 4.

- Accordingly, layer 4 cells also show the shortest latency to sensory stimuli.

- Data supports the hypothesis that at each level of cortical processing, neurons are sampling from a larger input space, receiving convergent information from the previous level, and forming larger and more complex integrated and combinatorial receptive fields.

- There’s also substantial horizontal interconnectivity which integrates information from neighboring and distant regions.

- Horizontal connections may be particularly relevant for cortical map reorganization since it appears that areas that develop novel receptive fields after peripheral input manipulations may rely, in large part, on connections from neighboring cortical sectors.

- Cortical plasticity

- The cortex can allocate cortical area in a use-dependent manner.

- Review of Hebb’s rule.

- The detection of temporally correlated inputs provides a mechanism for forming topographic maps and for cortical cell assemblies to represent learned stimuli.

- A fundamental idea in neuroscience is that a change in the synaptic efficacy between two neurons is a substrate for learning and memory.

- One of the first examples of associative/Hebbian plasticity was described in hippocampal CA1 neurons.

- Review of long-term potentiation (LTP) and long-term depression (LTD).

- No notes on the protocols used to induce LTP.

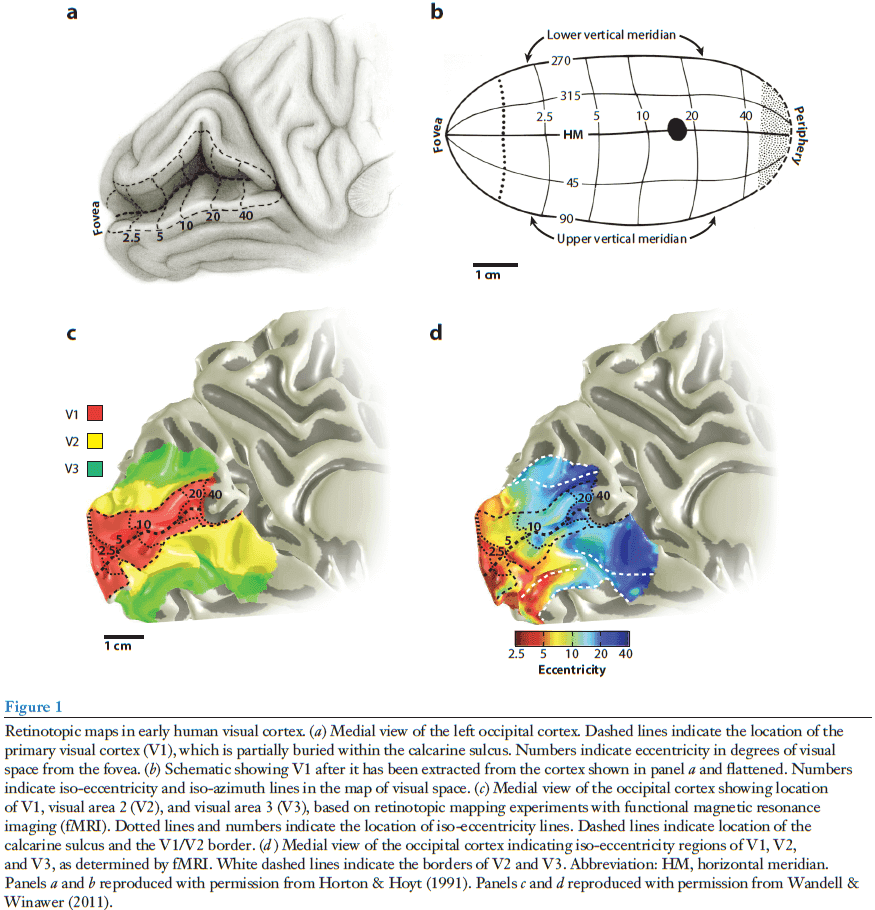

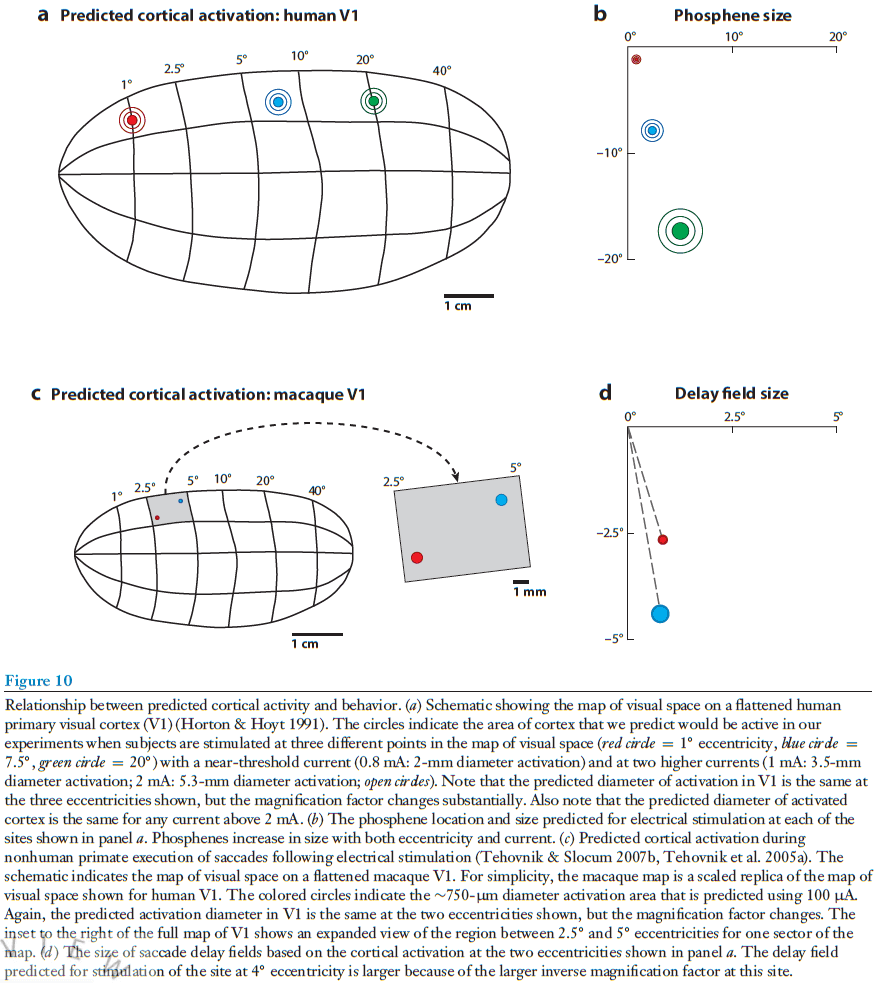

- Primary cortical sensory areas are organized topographically.

- E.g. S1, A1, and V1.

- Review of cortical plasticity following input destruction.

- E.g. Destroying the nerves supplying finger digit information.

- Hebbian plasticity is thought to play an important role in both cortical development and cortical reorganization in adult animals.

- Since Hebbian plasticity is based on the temporal correlations of inputs and since inputs from neighboring skin regions are generally more correlated than nonadjacent areas, neighboring cortical areas should represent neighboring surface areas, thus establishing a topographic map.

- A prediction from this hypothesis is that if the correlation between neighboring skin surfaces changes, then these changes should be reflected in cortical maps.

- Evidence supports this prediction and is consistent with the hypothesis that Hebbian synapses underlie cortical map formation and alteration.

- Review of the barrel cortex in rodents.

- In addition to the expansion of spared inputs, a retraction of the lesioned inputs occurred in parallel.

- Recordings made weeks after lesions to both retinas revealed that the cortical area previously responsive to the lesioned area acquired new receptive fields corresponding to areas surrounding retina around the lesion.

- However, this only occurs when both retinas are lesioned and not only one retina due to many V1 neurons having binocular receptive fields.

- E.g. Lesions to only one retina showed that the cortical topography was relatively unchanged.

- Further studies suggest that neurons can acquire novel inputs not only from neighboring retinal areas but also from distant nonadjacent areas.

- The examples of cortical map reorganization discussed so far were due to depriving the cortex of its normal inputs by lesioning, but we can also show changes in cortical map organization by reverse deprivation aka training.

- Skill-learning studies show that an increase in the activity of a limited subset of inputs can result in representational expansion.

- This shows that the cortex can dynamically allocate area in a use-dependent manner to different engaged inputs throughout life.

- E.g. An almost twofold expansion of the cortical representation of nipple-bearing skin in lactating female rats compared to nonlactating female rats.

- E.g. An almost twofold expansion in the size of representation of finger tips in monkeys that had to perform a difficult small-object retrieval task.

- E.g. Increased right hand representations in Braille readers compare to their left hand and non-Braille readers.

- Criteria to judge whether synaptic plasticity is necessary and sufficient for cortical map reorganization

- Synaptic plasticity in the appropriate pathways should be seen with cortical representational reorganization.

- E.g. The appropriate thalamocortical and corticocortical pathways should differ compared to control animals.

- The manipulations that block synaptic plasticity should also block cortical reorganization.

- E.g. Pharmacological and genetic synaptic blockers.

- Induction of synaptic plasticity in vivo should be sufficient to generate cortical reorganization measured by changes in cortical map topography.

- Synaptic plasticity and the learning rules that govern this plasticity should be sufficient to fully explain the experimental data.

- E.g. Computers models/simulations that incorporate known experimental forms of plasticity should be sufficient to generate models that account for cortical representational reorganization.

- Synaptic plasticity in the appropriate pathways should be seen with cortical representational reorganization.

- Virtually every level of the nervous system seems to exhibit plasticity under certain circumstances.

- But the primary site of plasticity appears to be the cortex; cortical and not subcortical.

- E.g. After training monkeys on a tactile task where adjacent fingers received simultaneous stimulation, many somatosensory cortical units developed combined-digit receptive fields but thalamic recordings didn’t reveal any combined-digit receptive fields. Thus, the convergence of information from different fingers seems to happen in the cortex.

- Related findings have also been reported in the visual system.

- Topographic changes could come from the strengthening or sprouting of thalamocortical axons and/or intracortical horizontal projections.

- It appears that novel receptive fields initially result from horizontal information flow mediated through corticocortical connections.

- E.g. After focal lesions to both retinae, collateral axons from cortical neurons surrounding the lesioned visual field branched predominately into the deprived area as opposed to the normal area. In contrast, labeling of thalamocortical axons showed that they didn’t extend into all of the reactivated cortex.

- E.g. Pairing whiskers in rodents led to early changes due to corticocortical plasticity and that later changes were likely due to the potentiation of existing thalamocortical connections or the formation of new ones.

- While these results suggest that the cortex is the primary site of cortical reorganization, it’s difficult to rule out contributions of plasticity occurring elsewhere.

- One possibility is that initial reorganization occurs in the cortex and that later reorganization occurs in subcortical areas in a retrograde fashion.

- If associative LTP is the primary mechanism underlying cortical reorganization, and if associative LTP depends on NMDA receptors, then blocking NMDA receptors should prevent cortical map reorganization.

- Studies find that NMDA receptor antagonists do interfere with cortical map development and plasticity in squirrel monkeys and cats.

- A problem with interpreting these experiments, however, is distinguishing whether reorganization was blocked due to NMDA being involved in synaptic plasticity, or reorganization was blocked because of experimental manipulations that inactivated the cortex.

- Review of computational models of the development and reorganization of cortical maps.

- Two fundamental mechanisms invoked by computational models

- Hebbian learning where cooperative synapses are strengthened.

- Competitive mechanism of balancing weakened and strengthened synapses.

- While Hebbian plasticity is often emphasized as the mechanism underlying cortical map formation and reorganization, cortical reorganization to deafferentation can essentially be simulated based only on passive decay and postsynaptic normalization.

- E.g. A nerve transection deprives the cortex of all inputs from digits D1, D2, and D3. Due to passive decay, synapses from the D1, D2, and D3 pathways are weakened. As a result of the weakening, synapses from D4 are strengthened due to postsynaptic normalization.

- Postsynaptic normalization is a critical component of many models but there’s been little direct experimental evidence for such as mechanism.

- One issue is that observations of postsynaptic normalization may require a time course longer than the life span of slice experiments.

- Evidence supports the notion that the level of postsynaptic depolarization determines whether LTD or LTP is induced.

- E.g. Low frequency stimulation (1 Hz) results in LTD, while high-frequency stimulation (100 Hz) results in LTP.

- We do not yet have a sufficient understanding of synaptic and cellular plasticity to fully account for the experimental data on cortical representational reorganization.

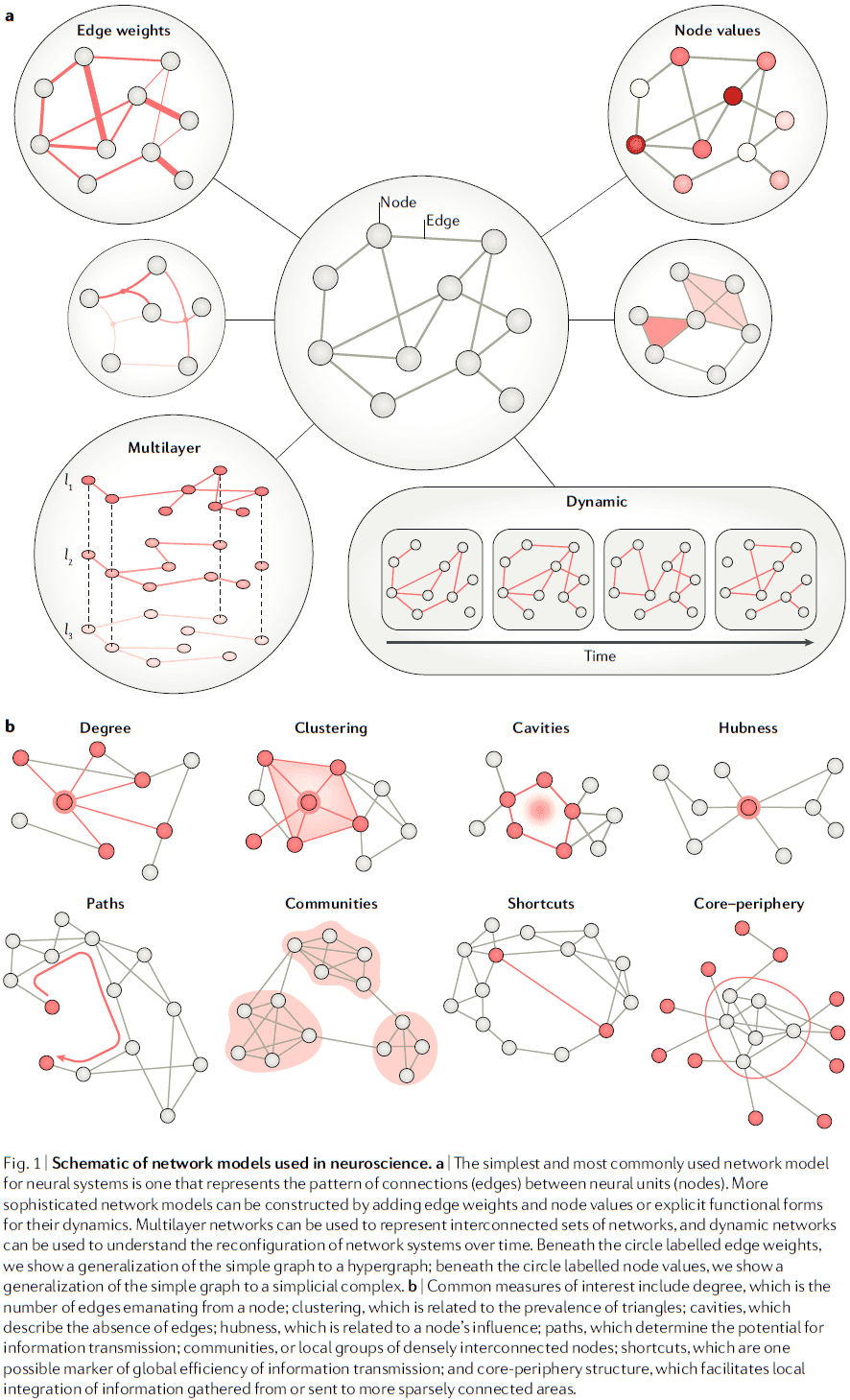

On the nature and use of models in network neuroscience

- This paper examines the field of network neuroscience by focusing on organizing principles that can reduce confusion and facilitate interdisciplinary collaboration.

- First, we describe the fundamental goals in constructing network models.

- Second, we review the most common network models along three dimensions: data-theory, structural-functional, and elementary-coarse.

- Third, we draw on biology, philosophy, and other disciplines to establish validation principles for these models.

- Review of the neuron doctrine; that neurons aren’t a continuous entity but are defined units with complex interconnections.

- Following the neuron doctrine, network neuroscience seeks to understand systems defined by individual functional units, often called nodes, and their relations, interactions, or connections, often called edges.

- Both nodes and edges, and any associated dynamics, form a network model that can be used to describe, explain, or predict behavior of the real physical network that it represents.

- These network models can be analyzed using quantitative techniques such as graph theory, hypergraphs, and simplicial complexes.

- E.g. Pairwise interactions in an adjacency matrix.

- Network models can be constructed across different spatial and temporal scales.

- E.g. Nodes can be chosen to reflect anatomical or functional units. Edges can be chosen to reflect synapses, white matter tracts, structural covariance, or physical proximity.

- More sophisticated network models can be made by including heterogeneous and dynamic elements.

- E.g. Multilayer and multiplex network models.

- Temporal dynamics can be applied to both nodes and edges, enabling studies of network as reconfigurable dynamical systems that evolve over time.

- After constructing these network models, they can be used to obtain deeper insights into how patterns of relationships between units support emergent functions and behavior.

- E.g. Topological shortcuts may support long-distance interactions and support efficient large-scale information transmission.

- Important questions then follow on how the specific pattern of edges between nodes supports or hinders critical neurophysiological processes related to synchrony, communication, coding, and information transmission.

- Despite these benefits, the rapid growth of network neuroscience had lead to many different kinds of network models and an abstraction away from neuroscience.

- Furthermore, different assumptions about network neuroscience can hamper communication, collaboration, and discovery.

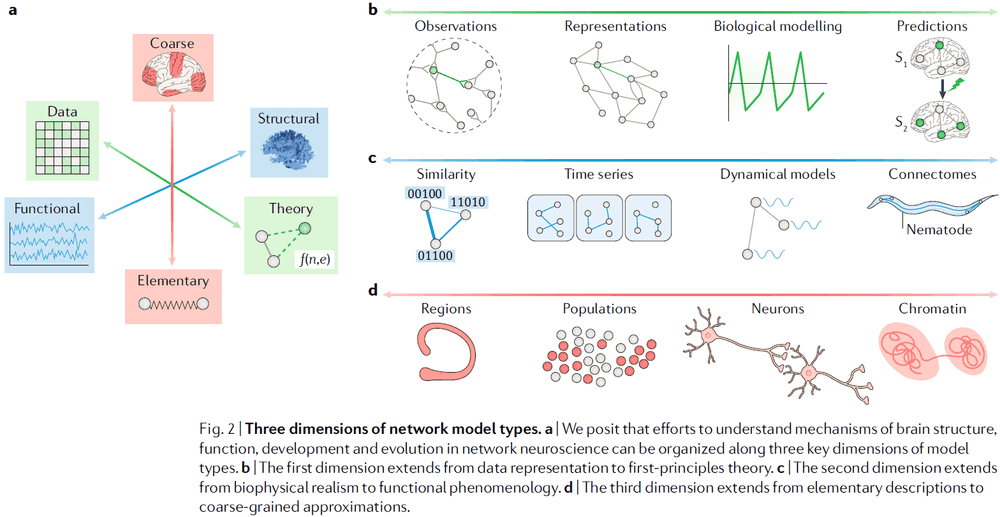

- We start by reviewing efforts to understand the mechanisms behind brain structure, function, development, and evolution in terms of three key model dimensions.

- From data representation to first-principles theory

- Is the network model a simple representation of data, or a theory of how the system behind the data might work?

- A simple way to differentiate this dimension is to ask if the model can make a prediction about how the system came to be or what the system will become.

- If the model can predict, it’s a theory-based model, if it can’t predict, it’s a data-based model.

- Another differentiator is that a data-based model can be stored in a simple graph, while a theory-based model must combine a graph with a difference or differential equations specifying dynamics, evolution, or function of the nodes, edges, or both.

- Data-based models are more biologically realistic but have fewer claims regarding mechanism or dynamics compared to theory-based models.

- E.g. Data-based models include the 305 structural connections among 32 visual areas in the macaque, C. elegans structural connectome, and other temporal, multilayer, multiplex, and annotate graphs.

- E.g. Theory-based models include the Hodgkin-Huxley, Izhikevich, Rulkov, neural mass models, and dynamic generative models.

- There are both advantages and disadvantages to both extremes of this dimension, and intermediate models try to combine the advantages of both extremes.

- E.g. Defining models where the parameters and functions are specified by data.

- In general, models distributed along this dimension are synergistic because data representations inform theories, and theories produce predictions that can be tested, generating new data representations.

- From biophysical realism to functional phenomenology

- Biophysically realistic network models include realistic elements such as neurons as nodes and axonal projections as edges.

- E.g. C. elegans structural connectome.

- These models can also include accurate descriptions of developmental, regenerative, or experience-dependent changes in neuronal morphology and projections.

- In contrast, functional phenomenological models include nodes and edges that don’t necessarily have exact physical counterparts.

- E.g. Functional connectivity uses correlations as edges.

- This dimension is important because it determines whether a particular model can be used to infer the functional capacities of realistic structures or the structural demands of certain functions.

- In functional phenomenology models, functional edges have informational rather than physical meanings.

- E.g. Synchronization, phase locking, coherence, and correlation.

- These studies often focus more on understanding the brain as an information processing system than on understanding its specific physical instantiation.

- For biophysically realistic models, the incorporation of rich empirical observations creates concrete models but can be computationally expensive and are sometimes difficult to interpret because of the many parameters required to describe the network structure.

- A structural connection inferred from a physical measurement should be interpreted differently from a functional connection that’s inferred from statistical similarities in time series data.

- From elementary descriptions to coarse-grained approximations

- For some questions, nodes and edges take on natural elementary forms, for other questions, the nodes and edges are coarse-grained.

- E.g. In physics, quarks can be thought of as a wave function or as a spherical mass. The former description is useful for quantum mechanics while the latter is useful for classical mechanics.

- This dimension is important because elementary descriptions seek to understand how structure and function are related, while coarse-grained descriptions seek to understand emergent network properties.

- Many common network models are based on the neuron doctrine with neurons and their synaptic connections.

- In contrast, coarse-grained models are based on simplified descriptions of ensembles of smaller units.

- E.g. Neural ensemble dynamics and neural mass models.

- Elementary models are often most useful for understanding neural codes and network function at the cellular level, while coarse-grained models are useful for understanding population and ensemble codes.

- The three dimensions of network models describe a 3D space where many different network models exist.

- Are particular parts of this volume more or less well studied?

- We find that the least-well-studied volume are models that are first-principle theories of functional phenomenology at the elementary level of description.

- It isn’t necessary for any one model in this 3D space to provide the same insights as any other model.

- E.g. A biophysical model of synaptic transmission in the dentate gyrus isn’t likely to provide direct insight into the functional phenomenology of the default-mode network.

- Assessing the validity and effectiveness of a particular network model can be challenging because of the diversity of goals and uses.

- We propose a classification system for validating network models on different goals and domains.

- Descriptive validity

- Does the model resemble the base system?

- This validity is often used in animal models by comparing different species to each other and to humans.

- How well does the structure of the model match the anatomical and/or functional data that it represents?

- E.g. Network models where edges are assigned continuous weights representing anatomical connections have more descriptive validity than edges assigned binary weights.

- One difficulty is identifying the appropriate level of complexity that should be modelled.

- Another difficulty is that individual variability creates uncertainty on whether network features are signal or noise.

- In general, little is known about the principles that govern individual differences in neural connectivity patterns in organisms with central nervous systems.

- However, network structure and function do differ across people and these differences are associated with cognitive functions and symptom severity for certain diseases.

- Network dynamics also show individual differences.

- Explanatory validity

- This category focuses on developing statistical tests to support conclusions drawn from the use of the model.

- E.g. A network model of the brain is considered to have explanatory validity if its architecture can be justified in terms of brain data and if it can then be used to test for causal relationships on the basis of that architecture.

- Explanatory validity requires an assessment of both a model’s architecture and its ability to test for causal relationships.

- These two criterion can be tested using statistical model-selection approaches.

- Predictive validity

- A statistical model is predictive if there’s a correlation between the output from perturbing the model and the output from perturbing the organism.

- E.g. Adding, removing, strengthening, or weakening a node or edge in the network should affect the model in a way that predicts the same outcome to the same perturbation in the organism.

- Building models with predictive validity comes after descriptive and explanatory validity, and are thus new to neuroscience.

- With the multitude of model and validity types, it’s important that investigators clearly specify their study’s goals because insights drawn from one type of model can be different from another type of model.

- One strength of network neuroscience is that it has built many descriptive models but lacks explanatory and predictive models.

- Models designed to explain are assessed according to goodness of fit but can be overfit to the data.

- Models designed to predict are assessed according to their ability to generalize.

Sleep Is for Forgetting

- One possible function of sleep is to erase and forget information built up throughout the day that would clutter the synaptic networks that define us.

- This paper discusses and illustrates the importance of forgetting for development, for memory integration and updating, and for resetting sensory-motor synapses after intense use.

- Sleep could serve this unique forgetting function for memory circuits within reach of the locus coeruleus (LC) and those formed and governed outside its noradrenergic net.

- Review of rapid eye movement (REM), transition-to-REM (TR), slow-wave sleep (SWS).

- Forgetting to avoid saturation

- Sleep preserves memory from gradual degradation over time.

- However, the type of forgetting discussed here isn’t passive degradation but rather the active and targeted erasure of synapses.

- It’s been hypothesized that REM sleep was for forgetting useless information learned throughout the day that, if not eliminated, would saturate the memory synaptic network with junk.

- The type of forgetting discussed here also isn’t the global synaptic weight downscaling of the synaptic homeostasis hypothesis (SHY).

- Arguments of this paper

- It’s the targeted erasure of synapses that’s unique to sleep.

- This targeted forgetting is necessary for efficient learning.

- Deficits in this process may underlie various kinds of intellectual disabilities and mental health problems.

- Hippocampal activity for forgetting during REM sleep

- One way to test if sleep is for forgetting or for remembering is to test the activity of hippocampal neurons during sleep.

- The hippocampus forms initial associative memories and temporarily stores them until they’re consolidated to the neocortex.

- It’s logical then that the hippocampal network of weighted synapses be recycled for future learning.

- Review of place cells and long-term potentiation (LTP).

- Place cells are most active at the peaks of theta frequency (5-10 Hz) local field potential patterns during active waking.

- Activity during the positive phase of the theta rhythm (peak) induced LTP, while stimulation in the opposite phase, the theta trough, induced a decrease in synaptic efficacy.

- We found that once a place becomes familiar to the animal, the place cells reversed their primary firing phase with respect to local theta oscillations during REM sleep.

- The time course that place cells switched from the novel peak firing pattern in REM sleep to the familiar trough firing pattern matched the time course of memory consolidation from the hippocampus to neocortex.

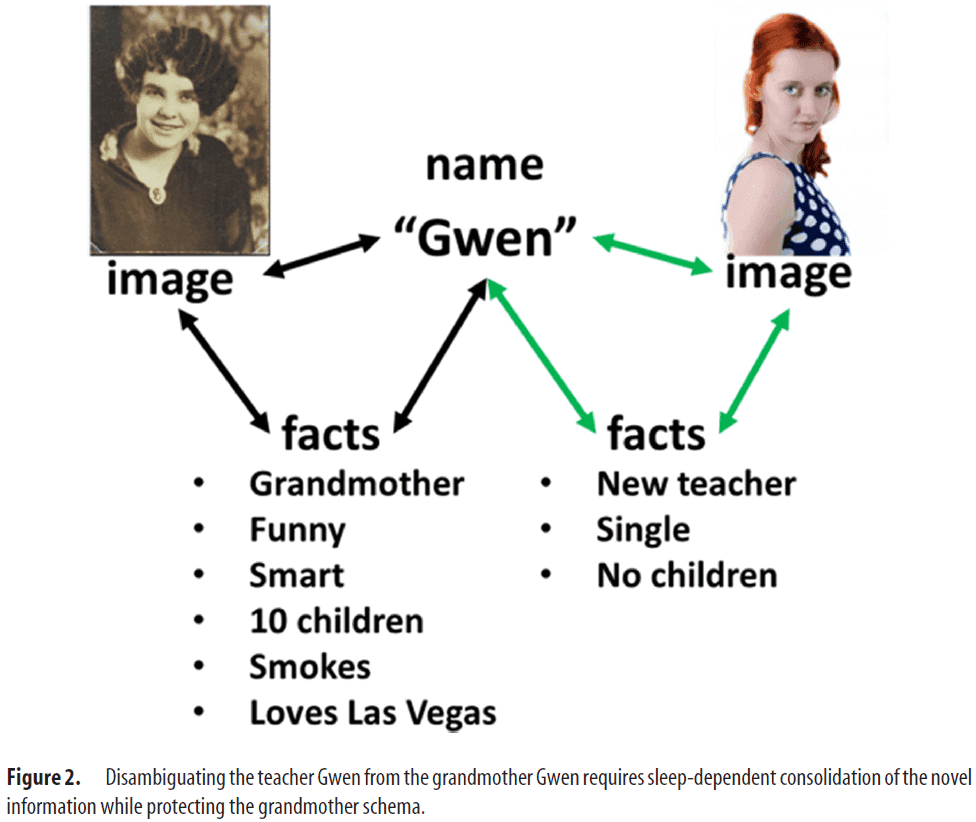

- Forgetting is necessary to incorporate novel information into established schema

- Long-term depression (LTD), the reverse of LTP, is also induced by learning.

- The hypothesis that REM sleep is unique for forgetting predicts that any learning that requires depotentiation would be compromised by its disturbance.

- One type of learning that requires forgetting is reversal learning.

- E.g. Learning that Santa isn’t real.

- If LTD didn’t happen to at least some of the synapses encoding the original information, then we would have redundant and conflicting information during memory recall, making us confused as to what’s true and what’s false.

- Targeted forgetting is unique to sleep

- The theta phase specificity of experience-dependent reactivation in the hippocampus revealed an elegant sleep-dependent memory function.

- Why would such an important function as rewiring a memory schema not occur during waking?

- One line of research suggests that the LTD step in the rewiring process is only reliably induced in the absence of norepinephrine (NE).

- NE blocks depotentiation and enhances LTP.

- E.g. Stimulating the source of NE to the forebrain, the LC, or direct application of NE through intracerebroventricular infusion enhances and prolongs LTP.

- Sustained LC silence normally only occurs during sleep.

- E.g. NE neurons in the LC are only suppressed during REM sleep and during the transition-to-REM (TR; stage 2 in human sleep).

- REM sleep and TR are perhaps the only times when synaptic circuits within LC’s reach could be refreshed and reset from the day’s relentless accumulation of connectivity.

- Perhaps the TR state is so short (30-60s in humans) precisely because it’s such a powerful time for bidirectional plasticity.

- No notes on NE bursts to terminate sleep spindles.

- The TR state, when the hippocampus and cortex uniquely synchronize during sleep spindles to transfer memories from the hippocampus to neocortex, is a vulnerable period when memories can be modified.

- Synapse elimination is essential in every developing circuit with experience-dependent refinement.

- E.g. Muscle innervation, imprinting, visual cortex development.

- Interestingly, REM sleep is especially abundant during early development as at birth, half or more of our sleep time is occupied by REM sleep compared to the less than 20% of sleep for adults.

- In juvenile birds learning bird songs, studies found that sleep reduced song complexity built up during the day and the noise from the day before was gone.

- In imprinting, learning the parent’s features was followed by immediate and impressive increases in REM sleep.

- Electrophysiological data is also consistent with the idea that without proper REM and TR sleep, neurons remain overconnected in the imprinting circuitry.

- If pruning fails, the synapses encoding alternative features remain and the animal remains open to imprinting on features of other individuals.

- Thus, imprinting shows how important targeted forgetting is for survival.

- Both REM and TR sleep rise during intensive learning periods.

- Sleep also serves a critical role in resetting the synaptic weights of primary sensory areas undergoing continuous amplification during waking.

- Many studies show that staying awake during the time from training to testing interferes with memory performance.

- Perhaps the memory degradation induced by staying awake for a long time is actually due to a buildup of interference rather than slow forgetting due to synaptic weakening.

- E.g. Slowly saturating circuits would result in a loss of specificity and clarity of information traces.

- Sleep is a protected time away from waking sensory interference to encode memories and to raise important memories above noise.

- E.g. In a study of experienced bicycle riders trained to ride a bicycle with reversed handles, subjects that slept after training were more likely to forget the training, erasing the sensorimotor learning gains compared to those who didn’t sleep.

- The authors of this study interpret their findings to mean that REM and TR sleep spindles might protect everyday needed skills like riding a bicycle by forgetting the interfering and irrelevant material.

- When memory reactivation occurs during sleep spindles without the ability to depotentiate synapses, circuits requiring reconsolidation become entangled.

- E.g. Without NE, synapses to and from the name “Gwen” to the information/memory associated with two different people would be at risk to be confused.

- Thus, NE preserves the memory network associated with a preexisting memory while allowing the network to learn and consolidate new memories.

- The absence of serotonin during REM sleep may be as important as the absence of NE in targeted forgetting.

- No notes on how serotonin may strengthen distal inputs in hippocampal neurons.

- A strong line of research findings indicate that NREM sleep serves to reactivate prior waking memory circuits to strengthen them.

- No notes on SWS.

- To summarize, sleep is characterized by a loss of norepinephrine and serotonin that uniquely allows for forgetting or depotentiation, specifically in activated circuits targeted by the LC.

- No notes on how this affects various pathological diseases.

- The role of sleep for forgetting seems to be unique and can’t be substituted by any other state in contrast to using sleep for remembering.

- However, there’s no behavioral data relating synaptic depotentiation to loss of memory and the theta peak-to-trough transition doesn’t always weaken memories.

- It’s also difficult to say that a memory has been forgotten as a memory trace can only be inferred from its retrieval and expression in behavior. The only proof of a memory is recalling it.

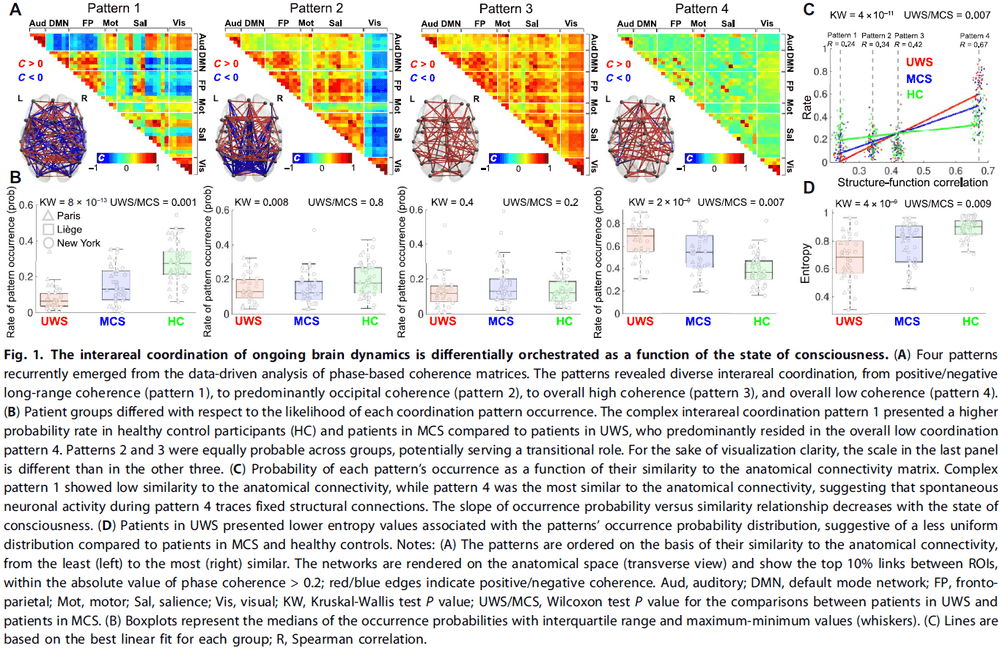

Human consciousness is supported by dynamic complex patterns of brain signal coordination

- All stages of losing consciousness, whether from sleep, anesthesia, or brain damage, all share a common feature: the lack of reported subjective experience.

- Finding reliable markers indicating the presence or absence of consciousness represents an unsolved challenge in neuroscience.

- We argue that consciousness has specific characteristics that are based on the temporal dynamics of ongoing brain activity and its coordination over distant cortical regions.

- E.g. Neural signals combine, dissolve, reconfigure, and recombine over time, allowing for perception, emotion, and cognition to happen.

- Brain dynamics can be characterized by the presence of complex activity patterns, which can’t be completely attributed to background noise.

- fMRI data shows that during normal wakefulness, the brain spontaneously generates a dynamic series of constantly changing activity and connectivity between brain regions.

- This suggests that consciousness could be found in temporally evolving dynamic processes seen in normal wakefulness.

- Furthermore, investigating spontaneous fMRI dynamic connectivity in different sleep stages and in anesthesia indicates that during physiologically reversible unconscious states, long-range correlations are disrupted in both space and time, anticorrelated cortical states disappear, and dynamic explorations are limited to specific and rigid functional configurations tied to anatomical connectivity.

- Conversely, conscious wakefulness is characterized by global integration, dynamic exploration of rich and flexible functional brain configurations, and the appearance of anticorrelations between the activity of different brain regions.

- Review of the Global Neuronal Workspace (GNW) theory that states that different streams of information in the brain compete for ignition in a widespread network of regions.

- The changes in brain connectivity dynamics associated with pathological unconsciousness after severe brain injury remain unknown.

- So far, we’ve inferred consciousness using active mental imagery neuroimaging paradigms and by assessing the complexity of evoked and spontaneous brain activity.

- However, we highlight the need to determine a common set of features capable of accounting for the capacity to sustain conscious experience.

- The goal of this paper is to determine whether the dynamics of brain-wide coordination could provide such a set of common features.

- No notes on the data and detailed method.

- The coordination between the continuous fMRI time series was estimated by phase synchronization.

- Phase synchronization: determines the relative timing of the phases of two signals during each waveform cycle.

- We clustered the phase-based coherence observations in a data-driven way, leading to a discrete set of brain-wide coordination patterns and their corresponding rates of occurrence in each group.

- The analysis consistently revealed four distinguishable patterns.

- Four complex patterns

- Pattern 1 showed high complexity and was prevalent in healthy participants and in minimally conscious state (MCS) patients.

- Pattern 4, in contrast, showed low interareal coordination and was more likely to occur in unresponsive wakefulness syndrome (UWS) patients compared to MSC patients.

- Patterns 2 and 3 were equally probable across all groups and conditions.

- To investigate the relationships between brain coordination dynamics and a representative network of anatomical connections, we also acquired diffusion spectrum imaging (DSI) data for each participant.

- We found that pattern 1 had low similarity to the anatomical connectivity, whereas pattern 4 was the most similar.

- Individuals with higher levels of consciousness were more likely to not only reside in pattern 1, but to also depart to and from this pattern to patterns 2 and 3.

- The brain dynamics of UWS patients were more likely to avoid this exploration of complex coordination pattern and preferred to stay in the less complex pattern 4.

- Our results are in line with previous findings in animals.

- E.g. The brain activity of anesthetized nonhuman primates resided mostly in a pattern of low connectivity resembling anatomy, which was sustained for a longer period of time compared to more complex patterns.

- We showed that network properties, such as modularity, integration, distance, and efficiency, increased with the participants’ conscious state.

- For patterns 2 and 3, these weren’t preferred by any group and could represent transitional states.

- We didn’t aim at tracking the moment-to-moment contents of conscious experience, but instead aimed at identifying brain-wide dynamic networks supporting different global states of consciousness.

- Pattern 4 was visited by healthy controls even under typical wakeful conditions.

- One explanation for this is that the flow of conscious cognition may be separated by periods of absent or reduced effortful information processing.

- E.g. Mind blanks.

- It didn’t escape us that the complex pattern 1 did sporadically appear in the group of unresponsive patients.

Brain Work and Brain Imaging

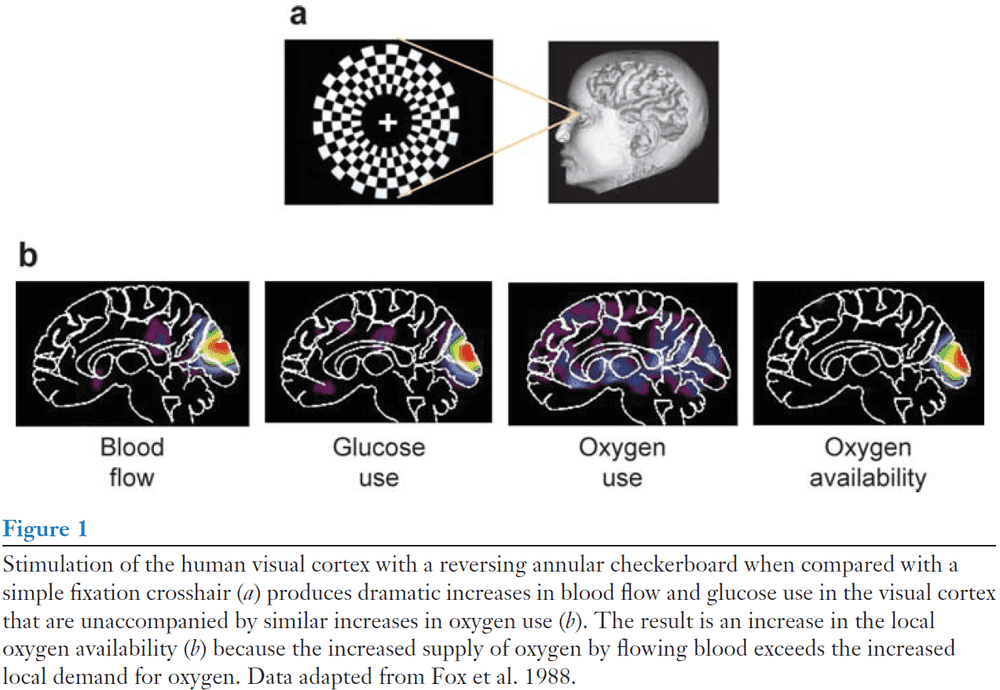

- The signal used by both positron emission tomography (PET) and magnetic resonance imaging (MRI) is based on changes in local circulation and metabolism (brain work).

- Our understanding of cell biology has improved in the past decade and we’ve shifted our focus from the output of neurons being most relevant to the input of neurons being most relevant.

- The introduction of PET and MRI presented researchers with an unprecedented opportunity to examine the neurobiological correlates of human behaviors.

- The result was the creation of a new scientific subfield: cognitive neuroscience.

- Another recent shift has been the establishment of research-imaging centers with expensive MRI equipment devoted exclusively to research.

- This shift breaks research tradition where research used to be done on clinical equipment after hospital hours.

- One of the criticisms and most important questions regarding neuroimaging is how to relate neuroimaging measurements to the biology and neurophysiology of brain cells and their microvasculature.

- Given that our knowledge is incomplete but rapidly expanding, we recognize that some of the points made in this paper are speculative and controversial.

- Evoked functional activity

- Behaviorally evoked changes in blood flow are at the heart of functional brain imaging signals.

- The fact that regional brain circulation is evoked by mental performance was first noticed by the Italian physiologist Mosso in 1878 when he noticed that a subject with a bony defect in his skull had faster brain pulsations in the right prefrontal cortex when performing calculations.

- Despite the centrality of blood-flow changes to functional imaging signals, the complexity of the relationship between blood flow and metabolism has only recently become more appreciated.

- One unresolved issue is why blood flow changes with brain activity.

- The intuitive notion that blood flow changes to serve the variable energy demands of the brain now appears oversimplified if not incorrect.

- What behavior of neurons accounts for the blood-flow changes we observe in neuroimaging?

- For many, the answer generally has been the spiking activity or output of neurons.

- In the work done by Logothetis and colleagues, they showed that the fMRI blood oxygen level-dependent (BOLD) measurement best correlated with local field potentials (LFPs) and not with unit activity.

- They concluded that fMRI bold signals reflect changes in LFPs and not changes in spikes.

- However, some question the modeling data in Logothetis et al.’s work and that the exclusive relationship between BOLD and LFPs may not exist.

- Is it the input to neurons (reflected in the LFPs) or the output from neurons (spiking activity) that drives the generation of functional brain signal?

- We believe the evidence favors a dominant role for the inputs to neurons (LFPs) as the signal underlying functional brain signals.

- Studies show that local tissue blood flow can be dissociated dramatically and convincingly from the spiking activity of neurons, whereas blood flow measured in the same experiments is consistently correlated with the LFPs.

- In our view, it’s incorrect to propose using functional neuroimaging signals as simple surrogate measures of the spiking activity of neurons.

- Instead, the input into a neuronal assembly may or may not correlate with its output.

- Moving forward, it’s become increasingly apparent that astrocytes also play a critical role in brain metabolism.

- The brain is dependent on a continuous supply of oxygen by flowing blood.

- E.g. When blood flow suddenly ceases, approximately 15s afterwards consciousness is lost.

- From this evidence, it was almost universally believed that blood-flow increases associated with cellular-activity increases must be related to the need for more oxygen.

- However, it came as a surprise that activity-induced increases in blood flow aren’t accompanied by proportionate increases in oxygen consumption.

- Although oxygen consumption does increase, this increase is much less than the increase in blood flow and glucose consumption.

- Blood flow and oxidative phosphorylation

- Two interesting findings

- Decreasing the oxygen availability in circulating blood by having normal subjects breathe air with reduced oxygen fails to cause a compensatory blood-flow response when brain cellular activity is stimulated.

- Sustained visual stimulation (25 mins) is associated with an initial increase in blood flow in excess of oxygen consumption but over time, oxygen consumption increases as blood flow falls.

- Both of these findings dissociate the link between blood flow and demand for oxygen.

- If the brain were to rely on a precise increase in oxygen delivery every time neuronal activity suddenly increased, a change in blood flow wouldn’t be the answer because it’s too slow.

- E.g. After an event, blood flow doesn’t reach its maximum for 4-6 seconds.

- Instead, the brain can extract more oxygen from circulating blood.

- E.g. On average, about 40% of available oxygen is removed from blood passing through the brain.

- However, evidence for this hypothesis is controversial.

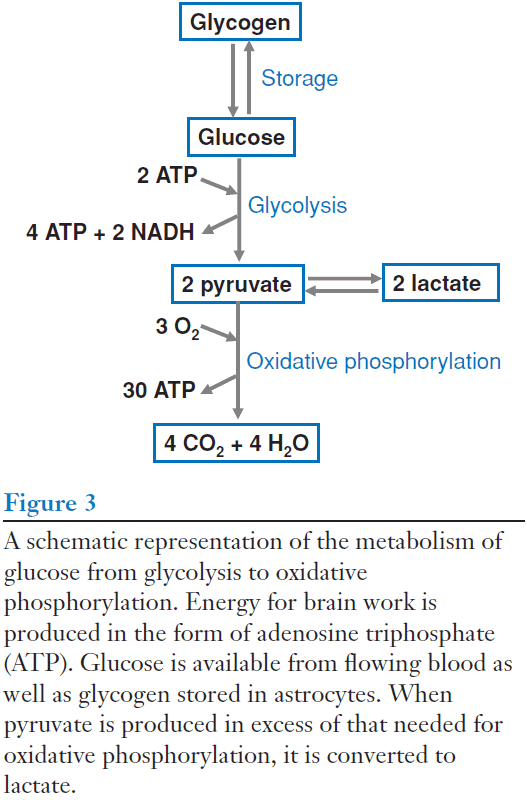

- The vast majority of the energy consumed by the brain is produced by the metabolism of glucose to carbon dioxide and water.

- Review of glycolysis and oxidative phosphorylation.

- Oxidative phosphorylation is more efficient than glycolysis because it produces more ATP, but glycolysis can be used during rapid increases in neuronal activity.

- Glycolysis is faster and can operate without oxygen.

- No notes on the role of astrocytes in brain metabolism.

- Astrocytes are the only store of glycogen in the brain.

- Our current information is inconclusive on the possibilities that a sustained increase in neuronal activity might cause a new baseline level of oxidative phosphorylation.

- Above, we focused entirely on evoked increases in brain activity, however, this likely represents only a small fraction of the true functional activity of the brain.

- Two interesting findings

- Activity decreases (deactivations)

- Generally, brain imaging work has emphasized task-induced increases in regional brain activity associated with executing a wide variety of tasks.

- However, we’ve become aware of task-induced decreases in regional brain activity; what we call the default mode network of the brain.

- One challenge with interpreting activity decreases is defining a baseline level of activity.

- E.g. Are the changes in functional activity normal or due to the task or due to some other cause? Are the changes merely unaccounted activations in control conditions?

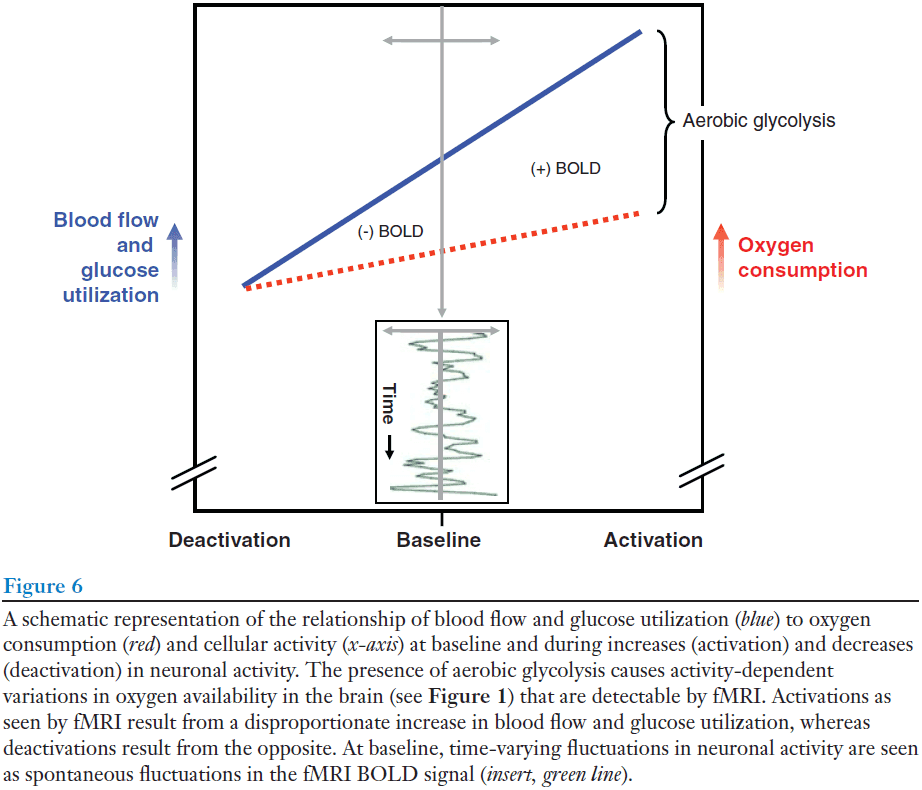

- We define activation, counterintuitively, as the increase in oxygen in blood because as neuronal activity increases, regional blood flow increases more than oxygen consumption. Hence, the amount of oxygen remaining in the blood leaving the activated region increases.

- Oxygen extraction fraction (OEF): the ratio of oxygen consumed to oxygen delivered.

- We find the OEF to be remarkably constant across the brain.

- E.g. In white matter, oxygen consumption is 25% compared to gray matter but the ratio of oxygen consumed to that delivered is the same as in gray matter.

- Using OEF, areas that are truly activated should exhibit a significant regional decrease in OEF compared to other brain areas.

- We found no area that exhibited a decrease in OEF in the resting state.

- We argue that brains are never at a zero-activity level and that such a view often encourages loose and misleading use of the term “activation”.

- We can monitor activity increases (activations) with fMRI BOLD contrast because when blood flow increases more than oxygen consumption, this creates a local increase in the amount of oxygenated hemoglobin.

- This increase in blood flow is accompanied by an increase in aerobic glycolysis, thus aerobic glycolysis provides us a window that we can use to observe changes in brain activity.

- Spontaneous functional activity

- Anyone who does fMRI BOLD imaging is aware that unaveraged MRI signals are quite noisy.

- Some of the noise is created by uninteresting sources.

- E.g. Scanner electronics, subject movement, respiration, variations in cardiovascular dynamics.

- However, a considerable fraction of the variance in the BOLD signal appears to reflect fluctuating neuronal activity.

- Correlated spontaneous fluctuations in the fMRI BOLD signal should be considered in the context of the rich neurophysiological literature on coherent neuronal fluctuations or oscillations.

- Oscillations could facilitate the coordination and organization of information processing across spatial and temporal scales.

- Overall cost of brain function

- So far, we’ve approach functional brain imaging from the view that changes in brain function relate to changes in brain energy consumption.

- Now, we cover the actual costs involved in these changes and how they relate to the overall cost of brains.

- The amount of energy dedicated to task-evoked regional imaging signals is remarkably small, with rarely more than a 5-10% increase in absolute blood flow as measured by PET.

- It’s become clear that the brain continuously expends a considerable amount of energy in the absence of a particular task.

- What intrinsic/default task consumes such a large amount of the brain’s energy resources?

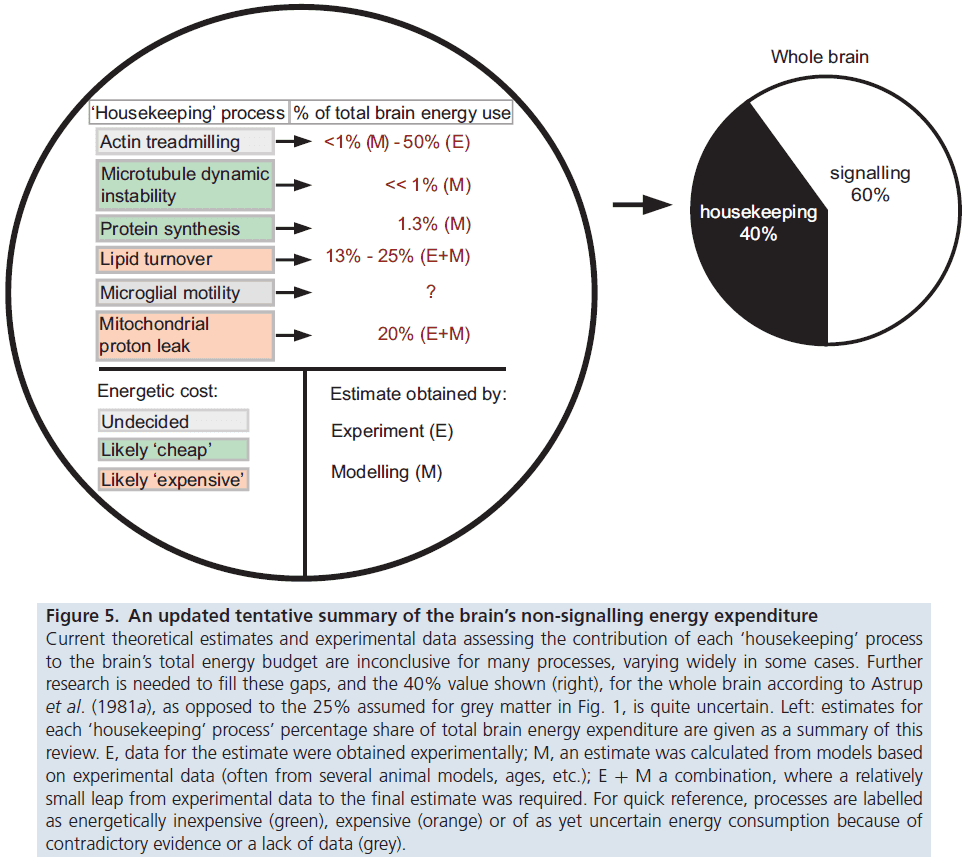

- Evidence suggests that simple housekeeping functions consume a relatively small fraction of the brain’s energy budget.

- E.g. Neuronal repair or protein trafficking.

- Various studies find that neuronal signaling processes are what consume the majority of energy.

- From this energy-cost analysis, it seems reasonable to conclude that intrinsic activity may be as significant as, if not more than, evoked activity in terms of overall brain function.

- Although we’ve learned a lot about the neurobiology of functional brain imaging signals, we still don’t know what function moment-to-moment changes in blood flow serve.

- Oxygen delivery doesn’t appear to be a convincing explanation and neither does the delivery of extra glucose as in both cases, adequate reserves are available.

- Perhaps the increased blood flow serves to remove waste products faster such as excess lactate or serves to adjust the ionic balance of tissue.

- Temperature regulation may also play some role.

- At present, no evidence exists for any of these speculative ideas.

- Unresolved issues

- What functions do regional brain-blood flow perform when neuronal activity changes?

- Why is glycolysis mainly used in providing energy for membrane-bound sodium and potassium ATPase in astrocytes and possibly neurons?

- How are the energy-generating resources of astrocytes and neurons coordinated and how does this affect functional brain imaging signals?

- What do inhibitory interneurons contribute in imaging signals?

Highly Nonrandom Features of Synaptic Connectivity in Local Cortical Circuits

- How different is local cortical circuitry from a random network?

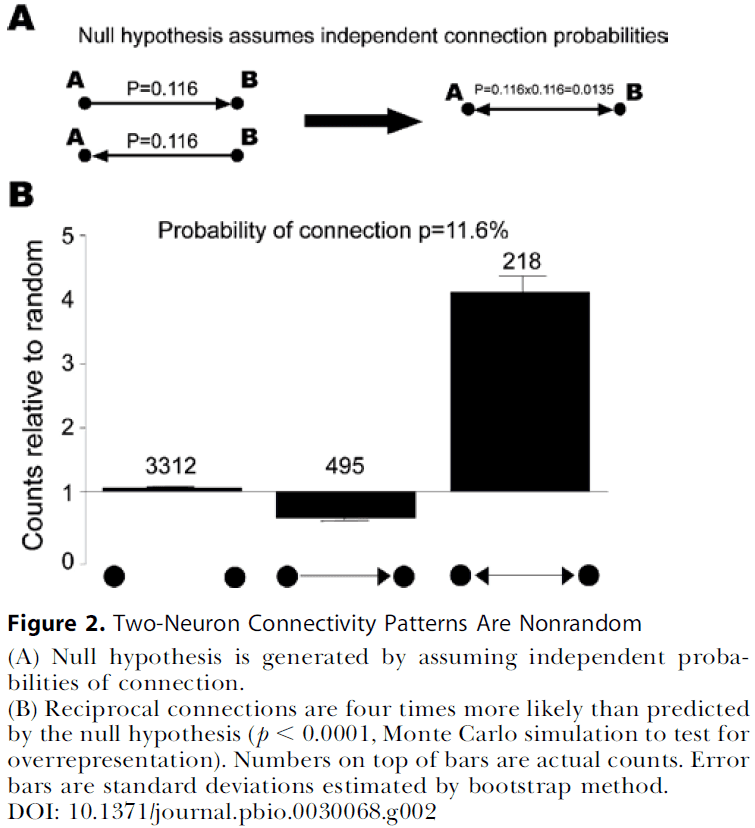

- We probed synaptic connections using whole-cell recording from layer 5 pyramidal neurons in the rat visual cortex and found several nonrandom features of synaptic connectivity.

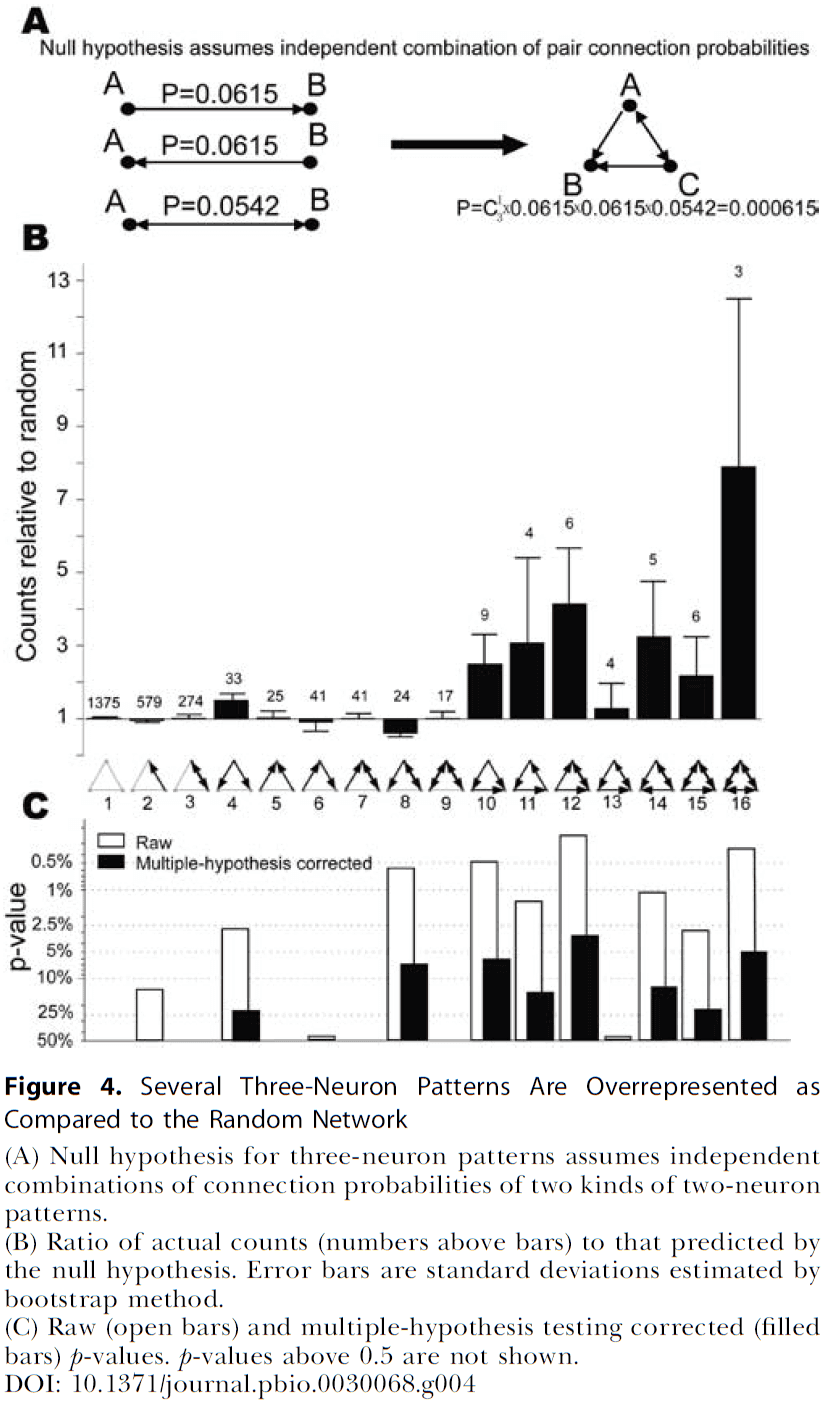

- We confirmed previous reports that bidirectional connections are more common than expected compared to a random network and that several highly clustered three-neuron connectivity patterns are prevalent.

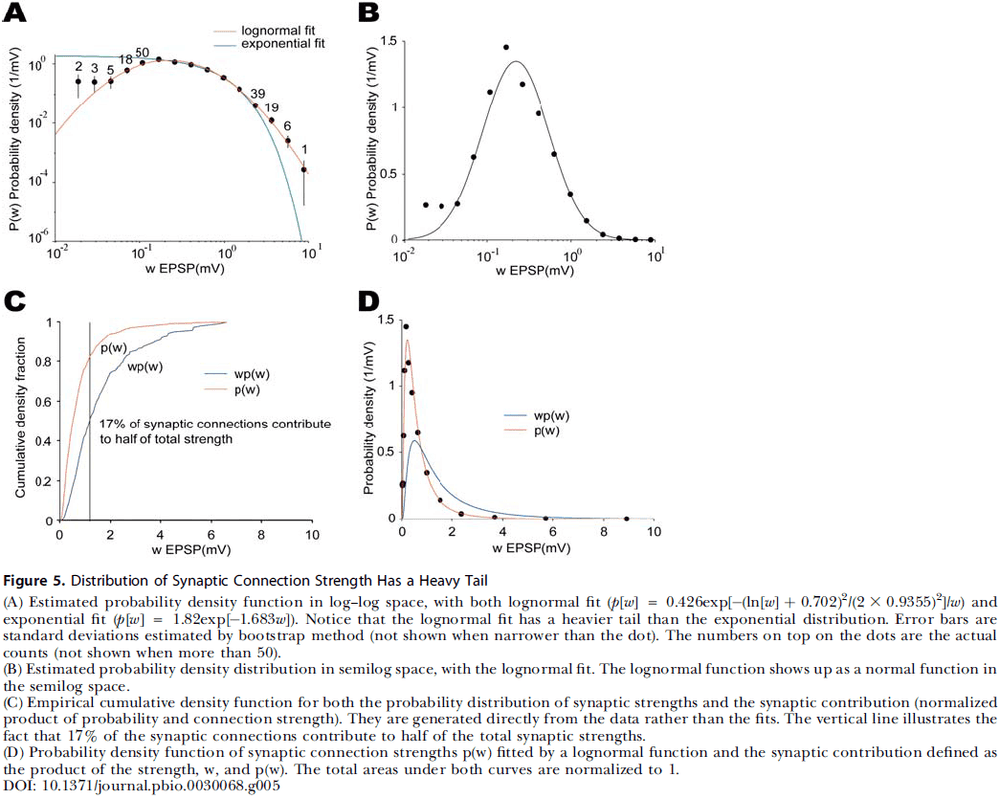

- Analyzing synaptic connection strength as defined by the peak excitatory postsynaptic potential amplitude, we found that the distribution of strength differs significantly from the Poisson distribution and is better fit by a lognormal distribution.

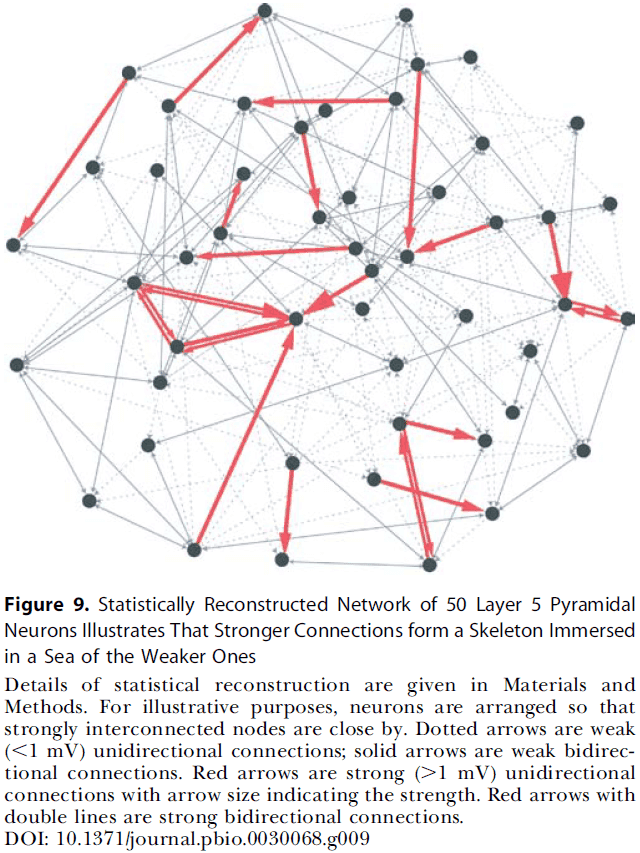

- A lognormal distribution implies that synaptic weights are concentrated and clustered among a few synaptic connections.

- Therefore, the local cortical network structure can be viewed as a skeleton of stronger connections in a sea of weaker ones.

- A reasonable approach to describe synaptic connectivity, the wiring diagram of the brain, is by using statistics.

- E.g. Random sampling of connections with multineuron recordings find that the probability of a connection between neurons is often low, suggesting a sparse network.

- Statistical sampling of connections has found, so far, that the underlying network doesn’t have random connectivity.

- Unanswered questions

- Are there nonrandom features in connectivity patterns involving more than two neurons?

- What’s the distribution of synaptic connection strength?

- Are synaptic connection strengths correlated?

- The goal of this paper is to apply a combination of statistical methods to a large dataset from hundreds of simultaneous quadruple whole-cell recording from visual cortex in developing rats.

- We found that connection strengths between pyramidal neurons follows a non-Poisson distribution and find correlations in the strength of the connections shared between pre- or postsynaptic neurons.

- Dataset details

- Recorded from thick tufted layer 5 pyramidal neurons.

- Recorded from 816 quadruple/four-cell groups.

- Rate of connectivity was 11.6% (931 connections out of 8050 possible connections).

- Three classes of neuron pairs

- Unconnected

- Unidirectionally connected

- Bidirectionally connected