Neuroscience Papers Set 6

By Brian PhoApril 03, 2022 ⋅ 84 min read ⋅ Papers

The Hippocampal Memory Indexing Theory

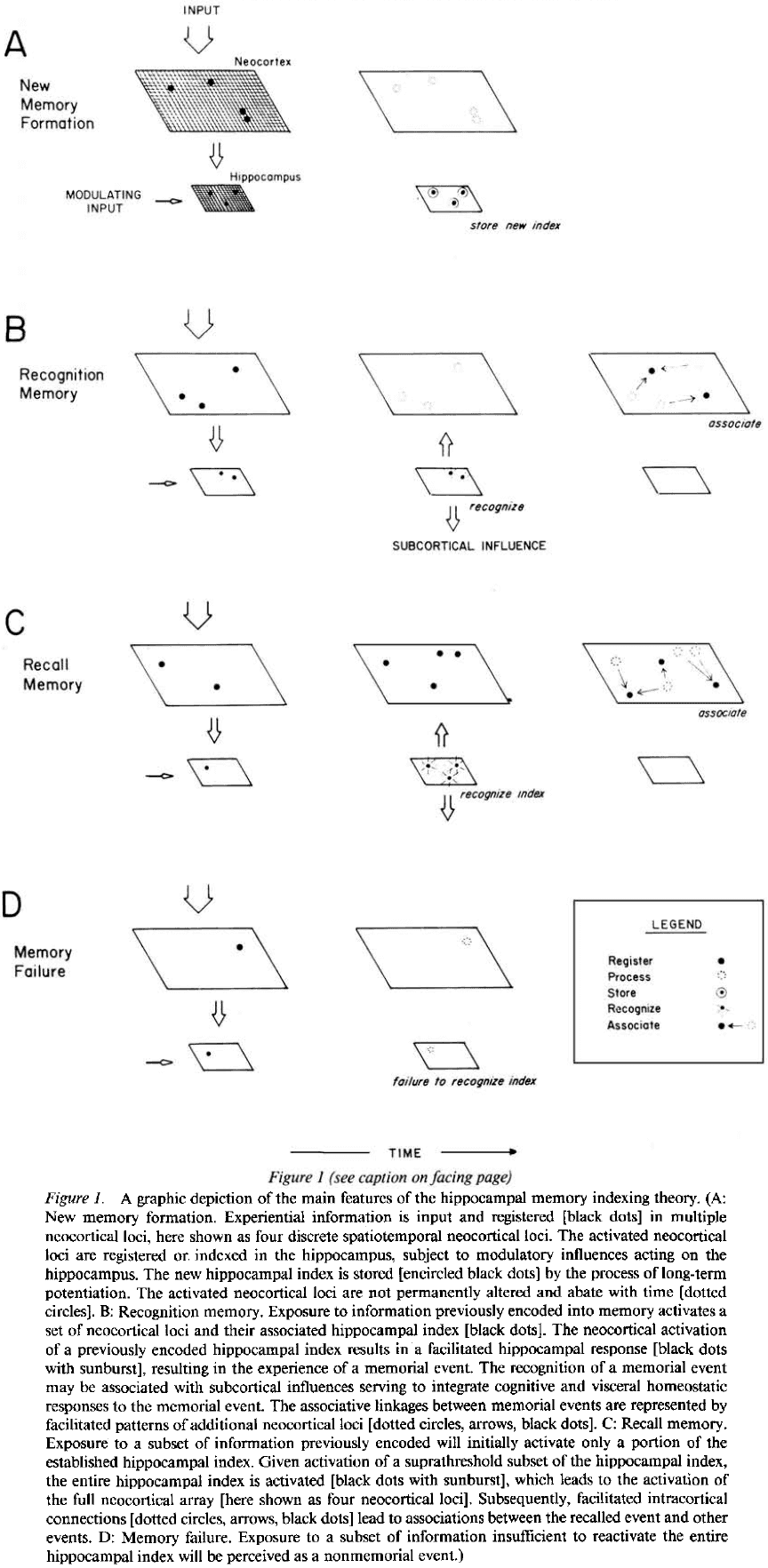

- This paper proposes the theory that the hippocampus forms and retains an index of neocortical areas activated by experienced events.

- The hippocampal index thus represents the unique cortical regions activated by specific events.

- This suggests that reactivation of the stored hippocampal memory index will serve to reactivate the associated unique set of neocortical areas and this results in reexperiencing a memory.

- The hippocampal memory index also implicates the neocortex as the location of long-term memories.

- Long-term potentiation (LTP) is suspected to be involved as a mechanism underlying information storage.

- The brain region with the most pronounced LTP is the hippocampal formation made up of the dentate gyrus, hippocampus, and subiculum.

- This paper is an attempt to develop a plausible model for information storage and retrieval in the brain.

- Hippocampal memory indexing theory

- Asserts that the hippocampus stores a map of locations of other brain regions such as the neocortex.

- Sensory information is processed at various cortical sensory and association areas in assemblies of cortical modules.

- The cortical module forms the basis for the cortical processing of stimulus information relayed to the neocortex by thalamocortical and other pathways.

- The additional processing of this information by ipsilateral association and commissural fibers forms links between stimulus patterns and events, assigning significance or meaning to the incoming stimulus pattern.

- Sensory organs → Thalamocortical sensory systems → Pattern of cortical modules → Hippocampus

- The role of the hippocampus is to store a map/index of the cortical modules activated by experience.

- The hippocampal storage of the spatiotemporal pattern of active cortical modules occurs, at least initially, through LTP at hippocampal synapses.

- Any neocortical modules and any hippocampal index of that module may participate in numerous memories.

- Only the location and temporal sequence of activated cortical modules are encoded.

- Reactivation of these neocortical modules in the right spatiotemporal sequence will simulate the original experience.

- If a new event feels similar to previous events, we predict that the spatiotemporal index stored in the hippocampus for the new event will match or be very similar to the index for the previous event.

- Once the memory index is established in the hippocampal array, it can further change by repeated activation of the same neocortical-to-hippocampal pathways.

- Alternatively, given no further activity on those specific pathways, the hippocampal index will decay over time due to LTP decaying.

- If only parts or a subset of a previously indexed neocortical array are activated by experience, then only those currently experienced parts of the stored hippocampal memory index will be activated.

- However, if the hippocampal activation exceeds some threshold, then the rest of the hippocampal index will activate and thus, reactivate the entire neocortical array.

- In behavioral terms, if only a subset of the index is activated, we call this recognition memory. If the entire index is activated, we call this recall memory.

- Whereas recognition is driven by external stimuli that are recognized by the hippocampus, in recall, the hippocampus is able to activate an array of cortical modules in the correct spatiotemporal sequence to bring about the reexperiencing of a memory.

- The hippocampus is continually and automatically testing every pattern of cortical activation to determine whether it matches any stored patterns. It tests whether an experience was already experienced in the history of the organism.

- If a match is found, either complete or partial, then a previously experienced event is reactivated.

- Repeated reactivation of a memory reinforces and strengthens the hippocampal index associated with that memory.

- Evidence from human studies support the idea

- That the hippocampus isn’t necessary for retrieving old memories.

- That there exists a neocortical mnemonic process that takes over from the hippocampus over an extremely long (three year) time course.

- Over a long time and with continual reactivation, the memory would rely less on the hippocampal index and cortical circuitry takes over.

- The theory also implies that the hippocampus stores every experiential event.

- Studies show this isn’t true and instead that experiential events are modulated by their importance.

- Memory storage and retrieval are a graded process that can be modulated by other agents or processes.

- We propose that the subcortical connectivity of the hippocampus functions to integrate the homeostatic, affective, and visceral aspects of an organism’s response to a recalled event.

- Although it’s not currently possible to do more than speculate regarding the detailed topological relations between the hippocampus and neocortex, we predict that the output of the hippocampus is able to topographically reaccess the neocortical modules whose activity was initially impressed onto the hippocampus.

- The role of the hippocampus is to store only the event and not the associations an event may elicit.

- Predictions

- A functional link exists between the hippocampus and neocortex.

- The indexing theory requires a two-way conversation between the hippocampus and neocortex, which must be demonstrable by anatomy and physiology.

- The theory requires a topological representation of neocortical modules in the hippocampus.

- Thus, the functional connections between hippocampus and neocortex must preserve topography.

- We predict that the functional activation of regions of the neocortex results in specific topological patterns of activation in the hippocampus.

- Cortical activation by experiential events can induce LTP in corresponding hippocampal loci.

- Similarities between hippocampal and neocortical damage are expected as these two structures are hypothesized to work in a cooperative manner.

- This paper presents a theory of how the hippocampus, LTP, and neocortex may interact in memory functions in the mammalian brain.

What Happened to Mirror Neurons?

- Ten years ago, mirror neurons were everywhere.

- Mirror neuron interest can be measured by the number of academic publications which peaked in 2013 and has since been declining.

- Mirror neuron basics

- Mirror neurons were discovered by chance in monkeys in 1992 and described as “mirror neurons” four years later.

- Three types

- Strictly congruent: respond during execution and observation of the same action.

- E.g. When the monkey performs a precision grip and when it passively observes a precision grip performed by another agent.

- Broadly congruent: respond during the execution of one action and during the observation of one or more similar, but not identical, actions.

- E.g. Execution of a precision grip and observation of a power grip, precision grip, or related movement.

- Logically related: respond to different actions in observe and execute conditions.

- E.g. Experimenter placing food in front of the monkey and when the monkey grasps the food to eat.

- Strictly congruent: respond during execution and observation of the same action.

- Strictly and broadly congruent neurons were the primary interest of researchers and is what most researchers mean when we use the term “mirror neuron”.

- These cells are intriguing because, like a mirror, they respond and match observed and executed actions; they code both “my action” and “your action”.

- Monkey mirror neurons are responsive to the observation and execution of hand and mouth actions.

- Mirror neurons were originally found using single cell recording in the ventral premotor cortex and inferior parietal lobule on the monkey brain.

- Like monkey mirror neurons, we’ve found consistent evidence that human mirror neurons exist in both areas.

- Research using single-cell recording suggests that mirror neurons are typically present in adult human brains.

- Four possible functions of mirror neurons

- Action understanding

- Speech perception

- Imitation

- Potential dysfunction in autism

- Action understanding

- One summary analysis concluded that there was broad agreement that mirror neurons “plays some role in action process” but there’s no agreement on what that role might be.

- E.g. It could be low level such as movement execution or high level such as movement intention.

- Evidence suggests that mirror neurons encode concrete representations of observed actions; the lower levels of observed actions.

- However, any involvement doesn’t mean that mirror neurons play a causal role in action processing.

- A recent review concluded that there’s no compelling evidence for the involvement of mirror neurons or any mirror-neuron brain areas in higher level processes such as inferring other people’s intentions from their observed actions.

- Speech perception

- Researchers agree that the motor system has some role in speech perception, but they disagree about the type and magnitude of the system’s role and whether mirror neurons are particularly important.

- While neuroimaging studies implicate the mirror-neuron brain areas in speech perception, evidence from patient studies contradict these findings.

- E.g. If speech perception requires perceived speech to be matched with motor commands for the production of speech, then one should find speech perception impairments in patients who have impairments in speech production due to brain lesions.

- This prediction is countered by a series of studies that showed intact speech sound discrimination in patients with speech production difficulties.

- There seems to be reasonably strong evidence for the involvement of the motor system in the discrimination of speech in perceptually noisy conditions, but this conclusion isn’t supported by patient data.

- Imitation

- This function received the strongest consensus among researchers due to two main findings.

- The first finding was that repetitive transcranial magnetic stimulation (TMS) of the inferior frontal gyrus, a mirror-neuron brain area, selectively impairs imitative behavior.

- Causal methodologies, such as brain stimulation and patient studies, continue to support the consensus that mirror-neuron brain areas contribution to imitation.

- E.g. Stimulation of the inferior frontal gyrus demonstrated improvement in vocal imitation and naturalistic mimicry.

- Data from fMRI studies also support this function as we find greater responses in mirror-neuron brain areas during imitation compared to other closely matched tasks.

- Autism

- In contrast with imitation, researchers has huge disagreements on autism and mirror neurons.

- Broken-mirror theory: that people with autism have abnormal activity in mirror-neuron areas of the brain.

- A systematic review found little evidence for a global dysfunction of the mirror system in autistic people.

- Overall, past research has provided no compelling evidence for the claim that autism is associated with mirror-neuron dysfunction.

- Do mirror neurons get their characteristic visual-motor matching properties from learning?

- One answer is that mirror neurons develop from the matching properties of standard sensorimotor associative learning.

- E.g. Mirror neurons start out as motor neurons but through correlated experiences of seeing and doing the same actions, these motor neurons become strongly connected to visual neurons tuned to similar actions.

- Another possibility is that there’s an innate or genetic predisposition to develop mirror neurons that’s independent of experience.

- More evidence has emerged arguing that learning plays an important role in the development of mirror neurons.

- E.g. Expert pianists and dancers had greater activity in mirror-neuron brain areas compared to observers who lacked such expertise.

- E.g. Wiggett et al. found using fMRI that mirror-neuron brain areas were more strongly activated by observation of hand movement sequences in participants who had simultaneously observed and executed the movements (sensorimotor learning) than in participants who had either observed the movements without performing them (sensory learning) or performed the movements without observing them (motor learning).

- The idea that motor learning alone is enough to change the properties of mirror neurons hasn’t been supported.

- It’s now well established that sensorimotor learning is important for the development of mirror neurons, but we don’t know the details of this learning.

- Two competing accounts of how mirror neurons develop

- Associative account: maintains that the sensorimotor learning that builds mirror neurons is the same kind of learning that produces Pavlovian and instrumental conditioning.

- Canalization account: suggests that monkeys and humans genetically inherit a specific propensity to acquire mirror neurons.

- Evidence is consistent with the associative account as mirror-neuron learning depends on standard conditioning such as contingency and contiguity.

- Recent studies have found no evidence of imitation in newborn monkeys and similar work in human neonates points in the same direction.

- Evidence doesn’t support the historical claim that newborns are capable of voluntary imitation of a range of action, and thus doesn’t support the canalization account.

- Reflection

- On action understanding, evidence suggests that mirror-neuron brain areas contribute to low-level processing of observed actions but not directly to high-level action interpretation.

- On speech perception, the role of mirror neurons remains unclear.

- On imitation, we have strong evidence that mirror-neuron brain areas play a causal role in behavioral copying of body movement topography.

- On autism, we have no evidence for the broken-mirror theory.

- These findings are disappointing relative to early newspaper headlines.

- Possible reasons why mirror neurons became so popular

- The deep historical pull of atomism, that objects are built out of units such as mirror neurons that combine sensory and motor properties, captivated people by the simple and tidy idea to explain complex ideas such as political strife and the social world.

- Some descriptions of mirror neurons imply telepathy or reading other’s minds.

- The field studying mirror neurons has produced substantial findings which shouldn’t be dismissed just because mirror neurons are “the most hyped concept in neuroscience”.

- In the author’s view, much of the skepticism about mirror neurons is based on a misunderstanding.

- Humans are better imitators than other animals because sociocultural experience provides humans with matching sensorimotor experience for a broader range of actions.

- We need to think hard about why neurons have mirror properties.

- Mirror neurons contribute to complex control systems rather than dominating such systems or acting alone.

- Rather than being developed through genetics, mirror neurons acquire their properties through sensorimotor learning.

- Mirror neurons shouldn’t be tarnished and they have yet to fulfill their true promise.

Representational drift in primary olfactory cortex

- Perceptual constancy requires the brain to maintain a stable representation of sensory input.

- For olfaction, the primary olfactory cortex (piriform cortex) is thought to determine odour identity and thus has odour representations.

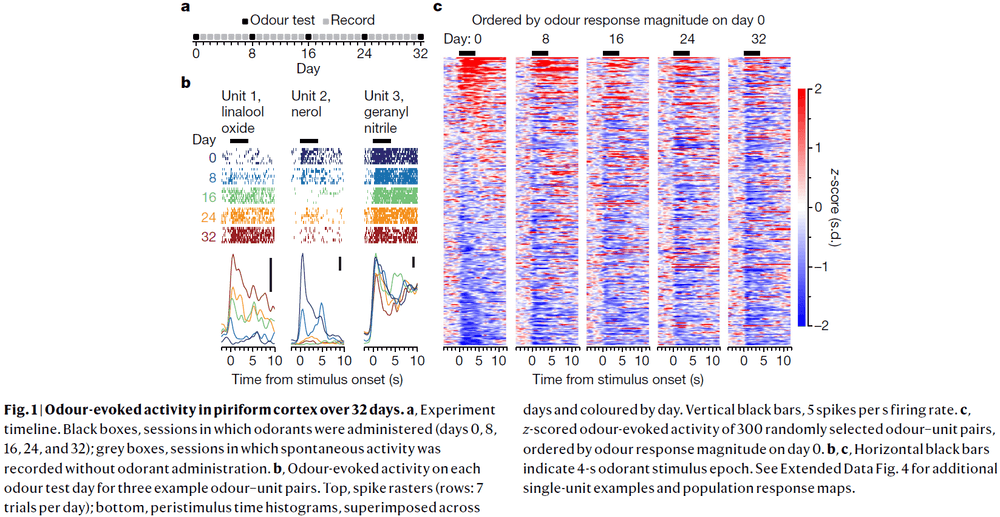

- This paper presents results from electrophysiological recordings of single units in the piriform cortex over weeks to study the stability of odour-evoked responses.

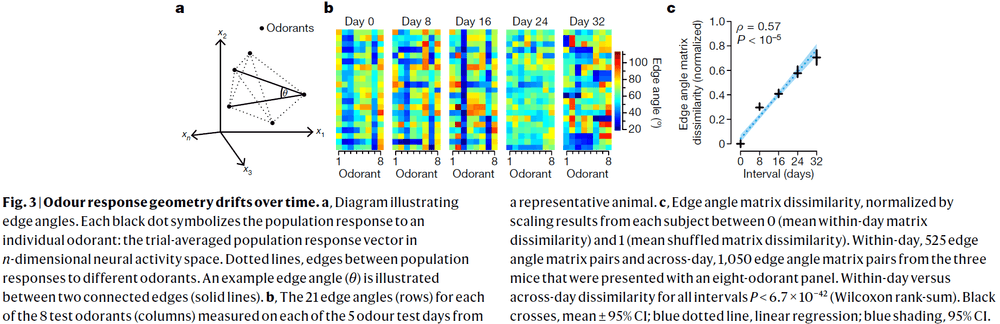

- Although the activity in piriform cortex could be used to discriminate between odorants at any time, odour-evoked responses drifted over days to weeks.

- Fear conditioning didn’t stabilize odour-evoked responses but daily exposure to the same odorant did slow the rate of drift.

- This evidence of continuous drift makes us question the role of piriform cortex in odour perception.

- In primary sensory areas, tuning to basic features such as retinotopy, somatotopy, and tonotopy is stable.

- Responses may vary over days but without perturbation or training, this variability is bounded and differences don’t accumulate over time.

- In the olfactory system, sensory neurons with the same receptors project to the same glomeruli in the olfactory bulb, thus, each odorant evokes a distinct, but stable, pattern of activity in the bulb.

- However, axonal projections from individual glomeruli discard this spatial patterning and diffusely innervate the piriform cortex without apparent structure.

- Since odorant information goes to the piriform cortex, we expect it to be the location in the brain where we determine the identity of the odorant.

- If piriform cortex does determine odorant identity, then perceptual constancy requires that its activity is stable.

- We examined the stability of odour-evoked activity in mouse anterior piriform cortex in response to a set of odorants over 32 days and recorded from 379 single units.

- There were gradual and sudden changes in odour responses across days.

- Single units either gained or lost responsiveness to a given odorant and only rarely showed stable responses to the odorant panel.

- The probability of a single unit maintaining a response to an odour across 32 days was about 6-7%.

- We quantified drift by comparing the single-unit response magnitude for each odorant across days and found that responses become increasingly dissimilar over time.

- Variance in single-unit waveform stability metrics didn’t explain variance in odour response similarity across days.

- Piriform cortex exhibits representational drift as changes in odour-evoked responses accumulate over time and trend towards complete decorrelation within weeks.

- Further observations show that drift in odour-evoked responses isn’t due to a global diminution in responsivity and occurs against the background of stable population response properties.

- Regions downstream of piriform cortex could compensate for drift in the response of neurons over time if the geometry of odour-evoked population activity were conserved.

- The geometry of population activity evoked by relatively dissimilar odorant molecules isn’t conserved but rather changes gradually.

- Next, we investigated whether evoked responses to a behaviorally salient odorant might exhibit greater stability.

- Recordings showed that evoked population responses to conditioned stimuli drifted at a rate comparable to that of neutral odours.

- Thus, fear conditioning doesn’t appear to stabilize odour-evoked responses in piriform cortex as we observed stable behavior in spite of drifting neural activity.

- What’s the purpose of this representation drift?

- One hypothesis is that drift in piriform cortex may reflect a learning system that continuously updates and overwrite itself.

- Two supporting observations

- The rate of drift of the evoked responses to familiar odorants was less than half that of unfamiliar odorants.

- Continual experience with an odorant enhances the stability of piriform cortex’s evoked responses to it.

- It’s unlikely that the changes in odour-evoked responses observed here are caused by a failure to follow the same population of neurons.

- Once daily odour exposure ceased, evoked responses to the familiar and unfamiliar stimuli drifted at similar rates, thus, daily exposure slows representational drift.

- Regions downstream of piriform cortex could compensate for representational drift.

- E.g. Replay of previous odorants to regularly update the downstream region, exploiting some invariant geometry in odour-evoked population activity, or that the small subset of single units that did show preserved odour-evoked responses are responsible for encoding odour identity.

- Although we failed to uncover evidence for an invariant geometry, this doesn’t rule out the possibility of an invariant structure that lies on a nonlinear manifold.

- Piriform cortex may not be the ultimate arbiter of odour identity as other brain regions also receive olfactory sensory information.

- E.g. Anterior olfactory nucleus, olfactory tubercle, cortical amygdala, and lateral entorhinal cortex.

- Three main observations of this paper

- Representational drift

- History-dependent stabilization

- Drift of previously stabilized odour-evoked responses

- These observations are consistent with the model that the piriform cortex is a fast learning system that continually learns and continually overwrites itself.

- Lacking a mechanism to stabilize these memory traces, however, piriform cortex can’t store these memories for the long term.

- In this model, representational drift in piriform cortex is a consequence of continual learning and continual overwriting where learned representations may be transferred to a more stable region downstream for long-term storage.

- The stability of neural activity and representations varies across brain regions.

- E.g. In the hippocampus, representational drift is seen but in primary sensory regions, representational drift is limited.

- What conditions promote drifting versus stable neural activity?

- Maybe anatomical organization sets a bound on changes in stimulus tuning to basic features.

- E.g. In primary visual cortex, the weakening or loss of one synapse onto a visual cortical neuron is likely matched by the strengthening or gain of a synapse tuned to a similar retinotopic region, limiting representational drift.

- However, the organization of piriform cortex differs in that it receives distributed and unstructured inputs from olfactory bulb and there appears to be a lack of topographic organization.

Humans Can Discriminate More than 1 Trillion Olfactory Stimuli

- Humans can discriminate between 2.3-7.5 million colors and around 340,000 tones, but the number of discriminable olfactory stimuli remains unknown.

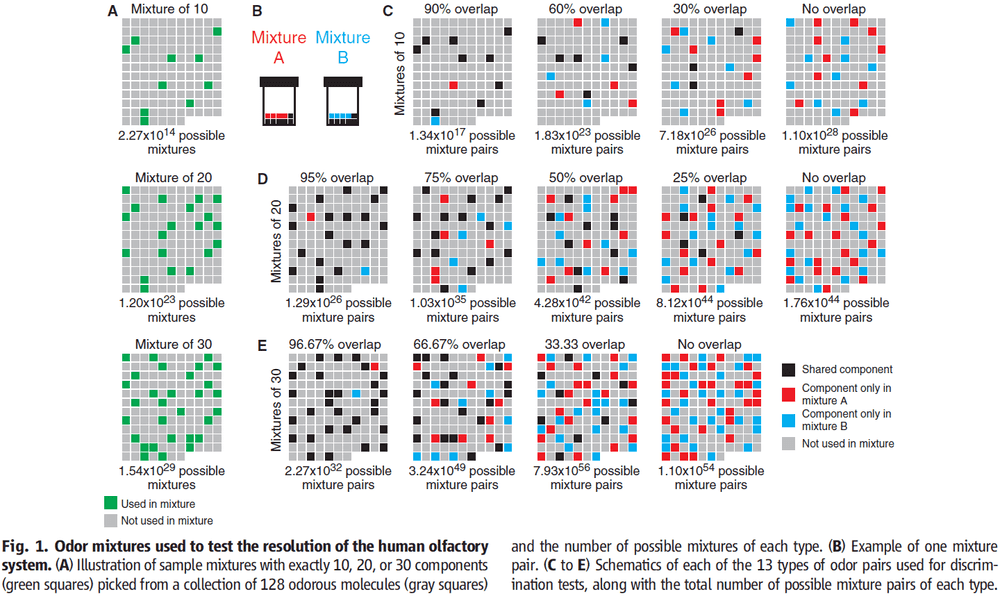

- Using psychophysical testing, we calculated that humans can discriminate at least 1 trillion olfactory stimuli.

- This demonstrates that the human olfactory system, with its hundreds of different olfactory receptors, far outperforms the other senses in the number of physically different stimuli it can discriminate.

- To determine how many stimuli can be discriminated, we first must know the range and resolution of the sensory system.

- E.g. Wavelength and intensity for color, frequency and loudness for tones.

- Then, we can calculate the number of discriminable stimuli from the range and resolution.

- For olfaction, it’s difficult to estimate its range and resolution because the dimensions and physical boundaries of the olfactory stimulus space aren’t known.

- Further, olfactory stimuli are typically mixtures of odor molecules that differ in their components.

- Thus, the strategies used for other sensory modalities can’t be applied to the human olfactory system because of the many dimensions of olfaction.

- The previous number of discriminable odor stimuli, 10000, was based on four dimensions each with a nine-point scale.

- However, we know that this isn’t the upper limit.

- E.g. The scent of a rose is a mixture of 275 components.

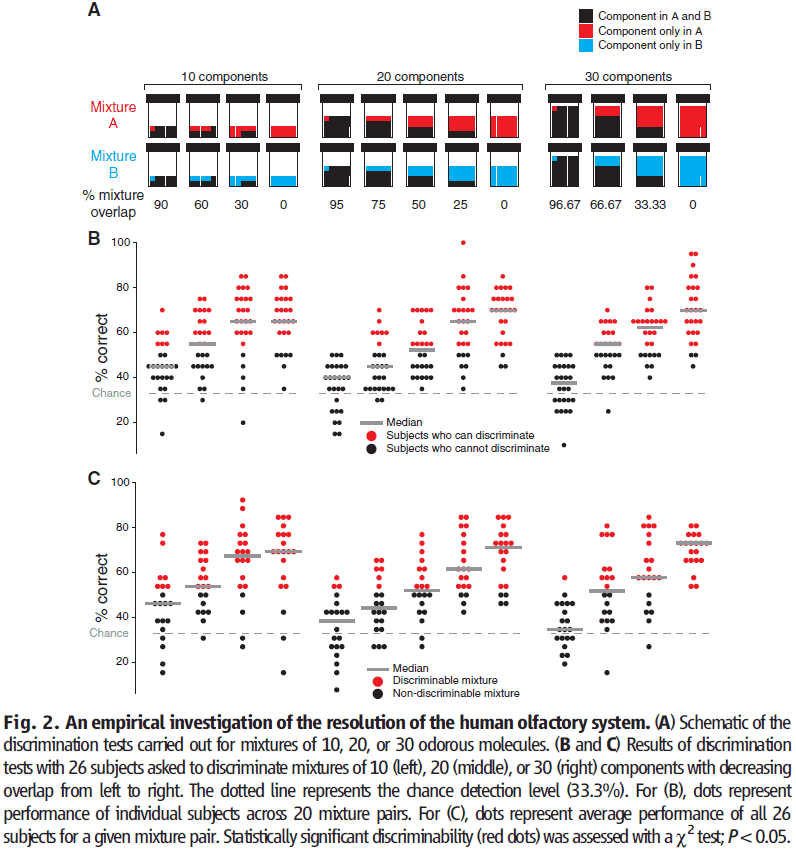

- We only investigated mixtures of 10, 20, or 30 components drawn from a collection of 128 odorous molecules.

- The 128 molecules cover much of the perceptual and physicochemical diversity of odorous molecules.

- To create mixtures A and B, we combined these components together in equal ratios.

- The main difference between two mixtures with the same number of components is the percentage/ratio of components.

- By varying the ratio, we can determine the resolution of the human olfactory system.

- E.g. What percentage must two mixtures differ on average so that they can be discriminated by the average human nose?

- Methods

- Subjects performed forced-choice discrimination between pairs of mixtures.

- In double-blind experiments, subjects were presented with three odor vials, two of which had the same mixture while the third had a different mixture.

- The order of the tests was randomized.

- Total of 260 mixture discrimination tests.

- Had four control discrimination tests to measure general olfactory acuity and to ensure subject compliance.

- Twenty-eight subjects completed the study but two were excluded.

- We found that pairs of mixtures are more difficult to distinguish the more they overlap.

- No subject could discriminate mixture pairs with more than 90% overlap.

- Of the 260 pairs of mixtures that were tested, subjects performed above chance level for 227 pairs.

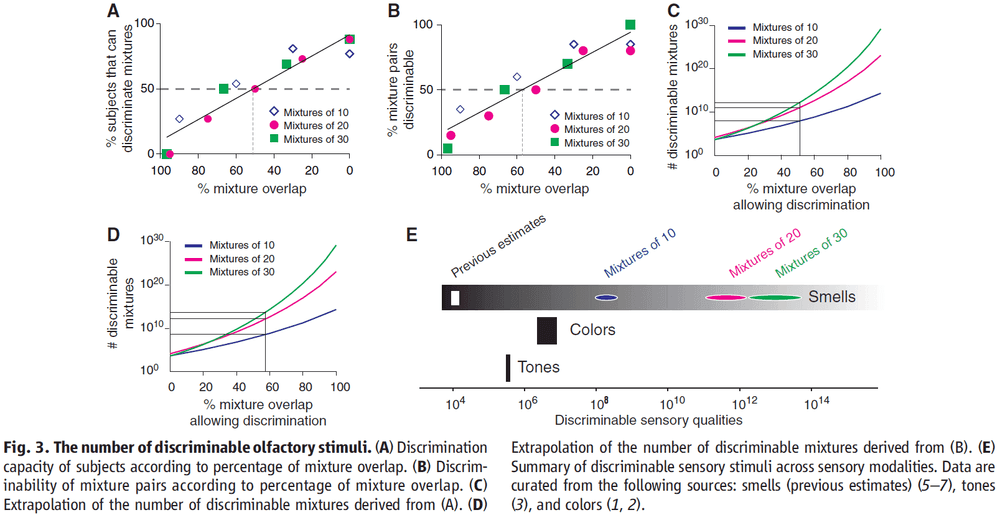

- Resolution of the visual and auditory system is defined as the difference in frequency between two stimuli that’s required for reliable discrimination.

- Resolution of the olfactory system can be defined as the highest percentage overlap in components between two mixtures at which those mixtures can be distinguished.

- We found that the resolution of olfaction isn’t uniform across the olfactory stimulus space.

- E.g. Half of the mixtures with 20 components with 50% overlap could be discriminated, but the other half couldn’t be discriminated.

- This quirk of non-uniform resolution across stimulus space is also found in other sensory systems.

- E.g. In hearing, frequency resolution is better at low frequencies than at high frequencies. In vision, wavelength discrimination is best near 560 nm.

- We can calculate the resolution of olfaction in two ways

- Discrimination capacity of subjects

- Discriminability of stimulus pairs

- For olfaction, we found the resolution or maximum overlap to be between 51-57%.

- The lower limit of how many discriminable mixtures there are, or the inverse of resolution, is mixtures for 30 components at a 51% resolution.

- Mixtures that overlap by less than 51% can be discriminated by the majority of subjects, which means that humans can, on average, discriminate more than 1 trillion mixtures of 30 components.

- However, there are large differences between subjects.

- E.g. One subject could discriminate mixtures of 30 components, whereas another subject could only discriminate mixtures.

- Our results show that there are several orders of magnitude more discriminable olfactory stimuli than colors or tones.

- This study focused only on stimulus quality and not stimulus quantity (intensity-based discrimination).

- The actual number of distinguishable olfactory stimuli is likely higher than since mixtures can be made up of more than 128 components combined in groups of 30 at different ratios.

Critical Role of the Hippocampus in Memory for Elapsed Time

- Episodic memories include how long ago those specific events occurred.

- This temporal context is crucial for distinguishing individual episodes.

- The discovery of timing signals in hippocampal neurons, time cells, strongly suggests that the hippocampus is important for tagging the time in episodic memories.

- This paper tests the hypothesis that the hippocampus is critical for keeping track of elapsed time over several minutes.

- We found that the hippocampus was essential for discriminating smaller, but not larger, temporal differences, consistent with a role in temporal pattern separation.

- This suggests that the hippocampus plays a critical role in remembering how long ago events occurred.

- Previous findings suggest that a fundamental role of the hippocampus is to provide an internal representation of elapsed time, which may support our capacity to remember the timing of individual events.

- However, this crucial link has yet to be shown.

- Basic timing ability is either normal or mildly impaired with hippocampal dysfunction.

- Uncovering the role of the hippocampus will require the use of longer (> 40s) timescales.

- We developed a novel behavioral paradigm to test the hypothesis that the hippocampus is critical for keeping track of elapsed time over several minutes.

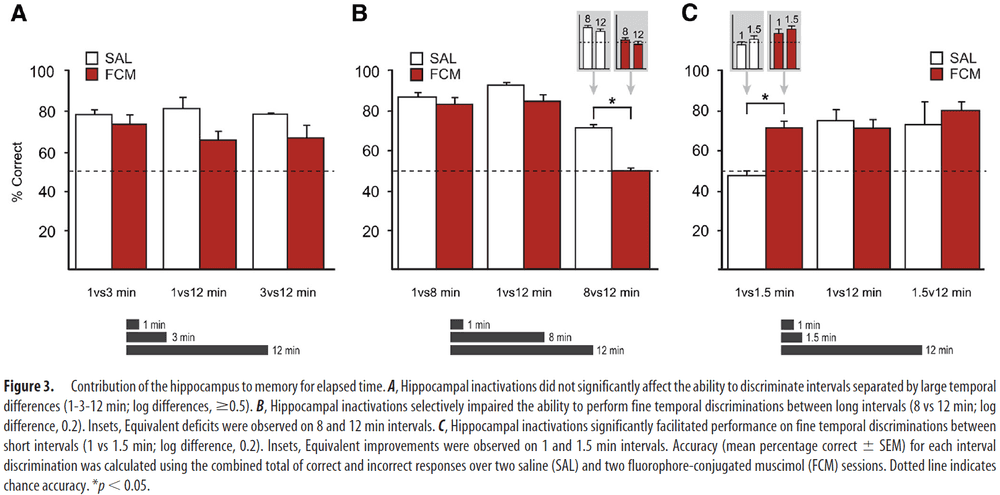

- Methods

- We trained rats to use odor-interval associations to indicate how much time has passed since a specific event.

- E.g. One odor for 1 minute, one for either 1.5/3/8 minutes, and one for 12 minutes.

- To determine the contribution of the hippocampus, we use localized infusions of fluorophore-conjugated muscimol (FCM) to temporarily inactivate the hippocampus.

- No notes on the detailed methods.

- Fluorescence imaging confirmed that a large part of the rostrocaudal extent of the hippocampus was infused with FCM and very little FCM spread to other structures.

- This is evidence that the infusions were localized to the hippocampus, thus inactivating it.

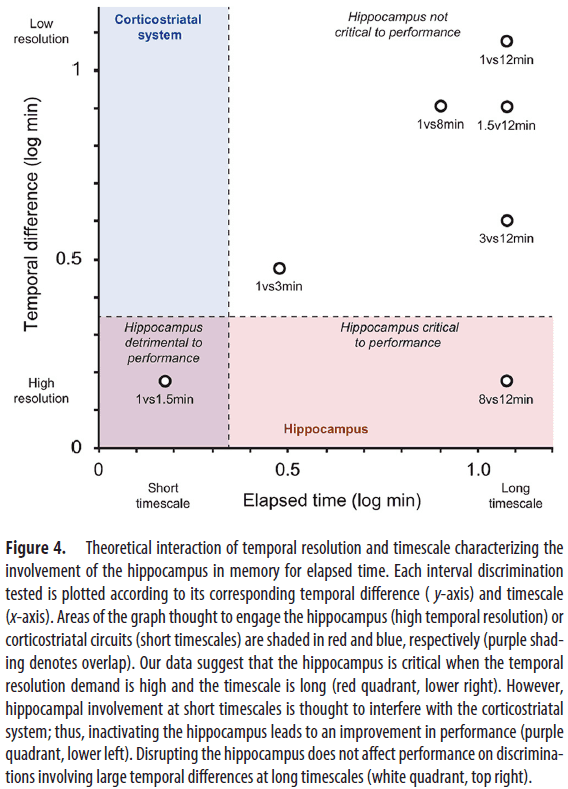

- Interval conditions

- 1-3-12 min: finding show that discriminating intervals separated by large temporal differences don’t depend on the hippocampus and FCM infusions had no significant effect.

- 1-8-12 min: FCM infusions significantly impaired performance for discriminating 8 vs 12 min trials while not impairing other trials, suggesting that the hippocampus is critical for discriminating small, but not large, temporal differences.

- 1-1.5-12 min: FCM infusions significantly improved 1 vs 1.5 min trials, suggesting that hippocampal processing may be detrimental to the performance of fine temporal discriminations at seconds-long timescales.

- While hippocampal inactivations didn’t affect the ability to discriminate intervals separated by large temporal differences, they produced significant effects when temporal resolution demands were increased.

- E.g. 8 vs 12 min difference.

- These findings represent a compelling demonstration that the hippocampus is necessary for remembering the time that has elapsed since an event.

- Interestingly, performance at the same temporal resolution, but on a short time scale (1 vs 1.5 min) was improved with hippocampal inactivations.

- This suggests that hippocampal processing is detrimental to performance at short timescales and may compete with another timing system for this scale.

- Overall, we found that hippocampal processing is beneficial when the temporal resolution demand is high and the timescale is long, but adverse when the temporal resolution is high and the timescale is short.

- Notably, little is known about the neurobiology of timing intervals in the range of minutes.

- We show that the hippocampus plays a critical role in remembering how long ago specific events occurred.

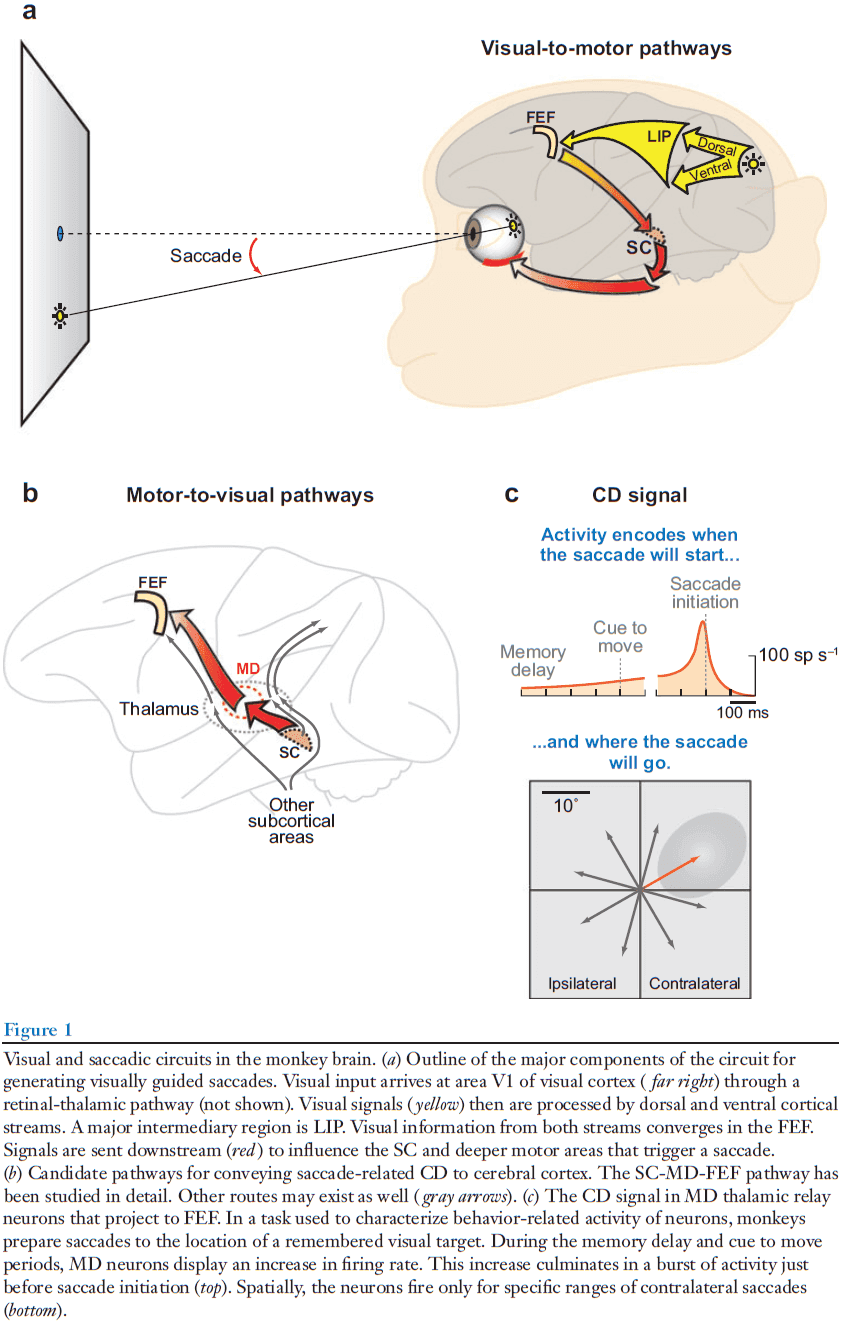

Brain Circuits for the Internal Monitoring of Movements

- Each movement we make activates our own sensory receptors. This is a problem because the brain can’t tell if the sensation is due to an external actor or due to our own movement.

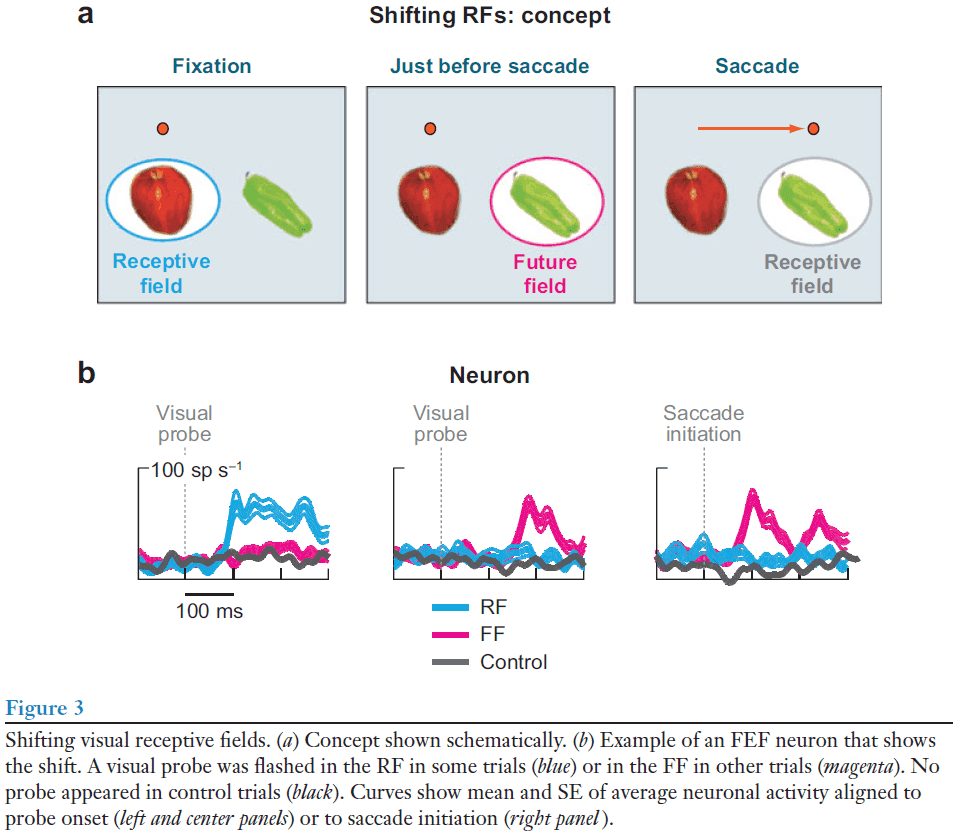

- In this paper, we consider circuits for solving this problem in the primate brain. Circuits that convey a copy of each motor command, known as a corollary discharge (CD), to sensory brain regions solve this problem.

- E.g. In the visual system, CD signals help to produce a stable visual percept from the jumpy imaging resulting from our rapid eye movements.

- A possible pathway for providing CD for vision is from the superior colliculus to the frontal cortex.

- This circuit warns about impending eye movements used for planning subsequent movements and analyzing the visual world.

- A major function of nervous systems is to process sensory input to detect changes in the environment and act on those changes.

- E.g. A cat pouncing on a mouse requires it to ignore the sensation of grass brushing against its legs and to move its head and eyes to track the target.

- Moving any sensory organ to improve its detection ability, however, momentarily disrupts the very sense it’s intended to help.

- Nervous systems require a solution to these self-generated movement disruptions in sensation.

- One major mechanism is to provide warning signals to sensory systems from within the movement-generating systems themselves.

- The importance of motor-to-sensory feedback is seen if we imagine that they don’t exist.

- E.g. Moving a security camera causes the image to jerk, blurring the image during the time of movement.

- The fundamental difference between the security camera and the eye is internal feedback of movement information.

- This internal feedback, this warning signal, is the corollary discharge.

- Corollary discharge (CD): a copy of a motor command sent to brain areas for interpreting sensory inflow (also called efference copy).

- Efference: motor outflow that causes movements.

- Review of saccades (rapid eye movements).

- The saccadic network provides an attractive model system for studying the sources of CD and the pathways the distribute CD to sensory areas.

- Retina → V1 → Lateral intraparietal (LIP) → Frontal eye fields (FEF) → Superior colliculus (SC) → Midbrain and pontine reticular formation → Oculomotor nuclei that innervate each of the six eye muscles.

- The parietal and frontal areas (LIP and FEF) contribute to the generation of voluntary saccades.

- What does a saccadic CD circuit look like?

- In theory, a CD pathway should run counter to the normal downward flow of sensorimotor processing.

- One such pathways is from the SC → Medial dorsal nucleus (MD) of the thalamus → FEF.

- It’s challenging to establish that a neuron carries CD as opposed to a movement command because by definition, both the command and CD should look the same.

- Four criteria for identifying CD

- Must come from a brain region known to be involved in movement generation, but it must travel away from the muscles.

- The signal should occur just before the movement and represent its spatial and temporal parameters.

- The neurons shouldn’t contribute to producing the movement.

- Perturbing the CD pathway does disrupt performance on a task that depends on a CD.

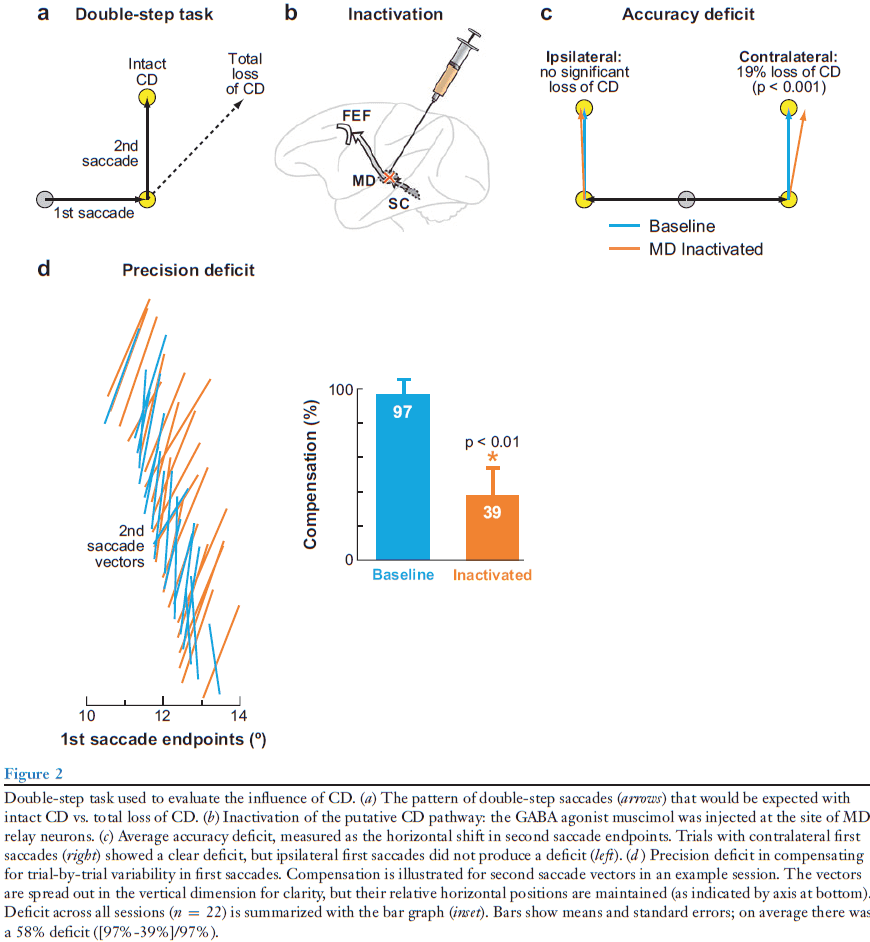

- Double-step task: when two targets are flashed sequentially and the subject has to make a saccade to the first target and then to the second. The initial fixation point and the two flashed targets are gone before the saccades begin.

- In the double-step task, the observable event is the second saccade and the parameter of interest is its vector.

- E.g. If the vector is correct, this implies perfect CD. If the vector is incorrect, this implies an inaccurate CD.

- To test the SC-MD-FEF CD pathway, we reversibly inactivate the MD relay node, thereby disrupting the pathway.

- If the inactivation eliminates CD, the monkey should make the correct first saccade but the incorrect second saccade.

- We found that inactivation of MD did cause the second saccade end point to shift as if CD of the first saccade was impaired.

- The impairment was significant but smaller than expected, suggesting that we didn’t silence the pathway completely or that other CD pathways exist.

- Interestingly, the deficit was limited to trials where the first saccades were made into the contralateral visual field. So, the deficit matched the direction of saccades represented in the affected MD nucleus.

- Successive saccades depend on CD.

- In its normal state, the brain precisely monitors even small fluctuations in saccade amplitude and adjusts subsequent saccades accordingly.

- If the CD were changed by inactivation, the compensation should be altered and this is what we find.

- E.g. The second saccades during inactivation failed to rotate as a function of where the first saccades ended.

- These inactivation experiments suggest that activity in the SC-MD-FEF pathway not only correlate with an expected CD signal, but the activity actually functions as a CD signal.

- Thus, this pathway satisfies the four criteria we regard for identifying CD.

- E.g. The signals originate from a known saccade-related region, they encode the timing and spatial parameters of upcoming saccades, their removal doesn’t affect the generation of saccades, and their removal does disrupt saccades that depend on CD.

- Review of receptive field (RF).

- The RF of visual neurons shifts to a location dependent on the saccade that’s about to occur, and this is only possible if the neuron receives information about the imminent saccade.

- Such shifting RFs (remapped RFs) have been studied in many regions including the LIP, extrastriate visual areas, and the SC.

- No notes on the second experiment.

- Perturbing the CD allows us to move from correlation to causation.

- Three ways CD can influence neural activity

- Suppression

- Cancellation

- Forward models

- Various studies in the primate visual-saccadic system support that the CD influences neural activity using suppression and forward models, with little evidence supporting the cancellation mechanism for saccades.

- No notes on CD and human disease.

- A CD signal represents the state of a movement structure such as the SC.

- The information of the CD signal is limited by the signals available to the structure, and the potential targets of the CD signal are constrained by the anatomical reach of the structure’s efferent pathways.

Primary sensory cortices contain distinguishable spatial patterns of activity for each sense

- Are the primary sensory cortices unisensory or multisensory?

- We used multivariate pattern analysis of fMRI data in humans to show that simple and isolated stimuli of one sense elicit distinguishable spatial patterns of neuronal responses, not only in their corresponding primary sensory cortex but also in other primary sensory cortices.

- This suggests that primary sensory cortices, traditionally thought of as unisensory, contain unique signatures of other senses.

- Traditional view of sensory processing

- Information from different senses is initially processed in anatomically distinct, primary unisensory areas.

- This information then converges onto higher-order multisensory areas.

- Evidence

- Lesions limited to primary sensory cortices (PSCs) clearly show unimodal sensory deficits.

- Electrophysiological and functional neuroimaging studies show that sensory stimuli elicit activity in the primary sensory areas matching the sensory modality of the stimulus.

- Tracing studies find few, if any, interconnections between primary somatosensory, auditory, and visual cortices.

- Alternative view of sensory processing

- That cortical areas believed to be strictly unisensory are instead multisensory.

- Evidence

- Responses in unisensory cortices by corresponding sensory input can be modulated by concurrently applied non-corresponding sensory input.

- E.g. In macaque monkeys, coincident tactile stimuli with auditory stimuli enhances the activity in or near the primary auditory cortex.

- Studies find that activity in PSCs can be elicited by stimuli belonging to a non-corresponding sensory modality, but only when these stimuli convey information related to the modality.

- E.g. Visual stimuli conveying information related to audition activates the auditory cortex.

- Responses in unisensory cortices by corresponding sensory input can be modulated by concurrently applied non-corresponding sensory input.

- However, these pieces of evidence don’t unequivocally prove that PSCs are multisensory.

- E.g. The observed multisensory effect only modulates the primary stimuli, or could result from stimulus-triggered sensory imagery from the primary stimuli.

- Two unresolved questions

- Can PSCs respond to stimuli of other senses when they’re not temporally coincident with stimuli of the principal modality?

- Are non-principal responses elicited in PSCs unique for each modality?

- E.g. Can V1 show different responses to non-corresponding auditory and tactile stimuli?

- We used multivariate pattern analysis of fMRI signals in the human primary somatosensory (S1), auditory (A1), and visual cortex (V1) and examined the spatial patterns of the neural responses elicited by the simple and isolated tactile, painful, auditory, and visual stimuli, and by tactile/visual stimuli delivered to two different body/visual field locations respectively.

- We found that in any PSC, the spatial pattern of the fMRI responses elicited by each sensory stimulus of another modality is sufficiently distinct to allow for reliable classification of the stimulus modality.

- E.g. Discriminating between tactile and auditory stimuli using fMRI responses from V1.

- We also found that two stimuli of the same modality presented at different locations also elicit distinguishable patterns of fMRI responses in non-corresponding PSCs.

- E.g. Discrimination between tactile stimuli to two fingers using the fMRI responses sampled within V1.

- These findings indicate that transient and isolated stimuli of one sense elicit distinguishable spatial patterns of neural activity not only in their corresponding PSC but also in non-corresponding PSCs.

- PSCs encode the modality of non-corresponding stimuli

- We used simple and isolated stimuli to avoid multisensory integration and sensory imagery in PSCs.

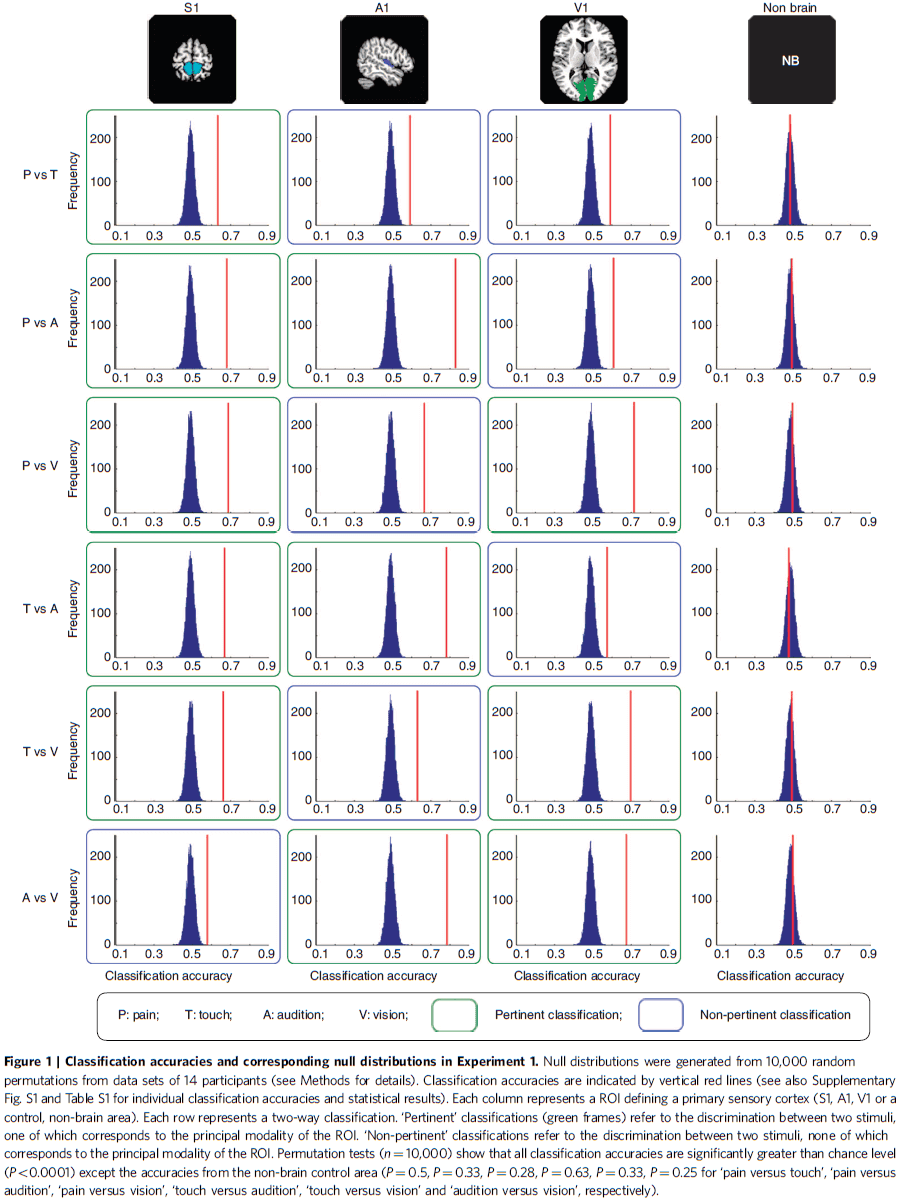

- In each of the three PSCs (S1, A1, and V1), six two-way classifications were performed.

- P-value was determined by comparing the group-average accuracy with its corresponding null distribution generated by 10,000 random permutations.

- This shows that the accuracy of each classification was significantly higher than chance level regardless of whether one of the two sensory modalities involved corresponded to the modality of the given PSC.

- Thus, we can accurately classify the normalized fMRI responses in each PSC by each type of sensory stimulus.

- E.g. We can classify between tactile and auditory stimuli using the fMRI response in V1.

- Regardless of which two sensory modalities were classified, the contributing voxels in a given ROI always responded more strongly to their corresponding sensory modality than to non-corresponding modalities.

- Within each PSC, the information of each stimulus modality is distinguishable at a spatially distributed pattern level.

- Modality coding in PSCs isn’t determined by edge voxels

- Although our results don’t allow us to conclude that the core of the PSCs has a definitive role in discriminating different sensory modalities, they do rule out the possibility that voxels located in the peripheral part of the ROIs possibly sample neural activity of neighboring higher-order areas.

- Modality coding is observed in some other brain regions

- If we don’t focus on ROIs and use the whole brain in the same analysis, we find only a subset of all brain regions appeared to contain information allowing discrimination of the sensory modality of the stimulus.

- PSCs also encode the location of non-corresponding stimuli

- In the first experiment, the stimuli of each sensory modality were identical in their location, intensity, and temporal profile.

- Thus, it’s unclear whether different stimulus features besides modality can also be discriminated in non-corresponding PSCs.

- To examine this, we manipulated the location of non-corresponding stimuli to see if PSC activity allowed for discriminating between two different stimuli of the same modality, and between two simultaneous stimuli of the same modality.

- E.g. Somatosensory stimuli to either the index finger or little finger or both.

- Results indicate that non-corresponding stimuli in PSCs allow discriminating not only the modality of the stimuli, but also the spatial location of stimuli of the same modality.

- In contrast to the traditional voxel-by-voxel univariate analysis of sensory processing, our results show that transient and isolated stimuli of a sensory modality elicit distinguishable spatial patterns of neural activity not only in their primary sensory areas, but also in non-corresponding primary sensory areas.

- We also found that when two stimuli of the same modality are presented in different spatial locations, these stimuli elicit distinguishable patterns of activity in non-corresponding PSCs.

- We show that simple and isolated unisensory stimuli conveying information related to another sense can elicit activity within the PSC corresponding to that other sense.

- E.g. Silent videoclips of a barking dog elicit activity in A1. Seeing silent lipreading activates A1.

- Summary of results

- Spatially distinct responses are elicited in PSCs by stimuli of different non-corresponding sensory modalities.

- Spatially distinct responses are elicited in PSCs by stimuli of the same non-corresponding modality presented at different spatial locations.

- That these patterns of activation are spatially distinct raises the possibility that sensory inputs belonging to different modalities activate distinct populations of neurons in each unisensory area.

- Possible reasons for widespread distribution of sensory information in multiple PSCs

- Responses could underlie processes involved in multisensory integration.

- Responses could reflect a reduction or active inhibition of tonically active neurons, which may enhance the contrast between neural activities in principal and non-principal sensory areas.

- E.g. Salient auditory stimuli demotes the processing of potentially distracting visual stimuli within the visual cortex.

- Our findings provide a compelling answer to the ongoing debate about the extent of the multisensory nature of the neocortex, demonstrating that even PSCs are essentially multisensory in nature.

- Our results emphasize that PSCs don’t solely respond to sensory input of their own modality.

An Integrative Theory of Prefrontal Cortex Function

- This paper proposes that cognitive control comes from the active maintenance of activity patterns in the prefrontal cortex that represent goals and the means to achieve them.

- The prefrontal cortex (PFC) biases signals in other brain structures to guide the flow of activity along neural pathways that establish the proper mappings between inputs, internal states, and outputs needed to perform a task.

- We review neurophysiological, neurobiological, neuroimaging, and computational studies that support this theory and discuss its implications.

- One of the fundamental mysteries of neuroscience is how coordinated, purposeful behavior emerges from the sparse activity of billions of neurons in the brain.

- Simple behaviors can rely on simple interactions between inputs and outputs.

- E.g. Reflex behavior is explained by simple neural circuits.

- Organisms with fewer neurons can perform simple behaviors, while organisms with more neurons can have behavior that’s more flexible at a cost.

- The cost is choice: of selecting an action in a large repertoire of actions.

- The rich information we have about the world and the great number of options for behavior require appropriate attentional, decision-making, and coordinative functions.

- To deal with the overwhelming number of possibilities, we’ve evolved mechanisms that coordinate lower-level sensory and motor processes along a common theme: the internal goal.

- The ability for cognitive control is spread out over much of the brain, but we suspect that the PFC is particularly important.

- The PFC is most elaborated in primates, animals known for their diverse and flexible behavioral repertoire.

- The PFC is well positioned to coordinate a wide range of neural processes since it’s a collection of interconnected neocortical areas that sends and receives projections from virtually all cortical sensory, motor, and subcortical systems.

- However, an understanding of the mechanisms behind how the PFC executes control has remained elusive.

- This paper aims to provide a theory of PFC function that precisely defines its role in cognitive control.

- Role of PFC in top-down control of behavior

- PFC isn’t critical for simple automatic behaviors (bottom-up).

- E.g. Balance or unexpected sounds.

- These hardwired pathways are important because they allow highly familiar behaviors to be executed quickly and automatically.

- However, the tradeoff is that these behaviors are inflexible and are stereotyped reactions caused by just the right stimulus.

- E.g. Hardwired behaviors don’t generalize well to novel situations and take extensive time and experience to develop.

- In contrast to these bottom-up pathways, the PFC is important when top-down processing is needed; when behavior must be guided by internal states or intentions.

- E.g. When we need to use the “rules of the game”; internal representations or goals and the means to achieve them.

- These pathways are weaker and change rapidly.

- Evidence

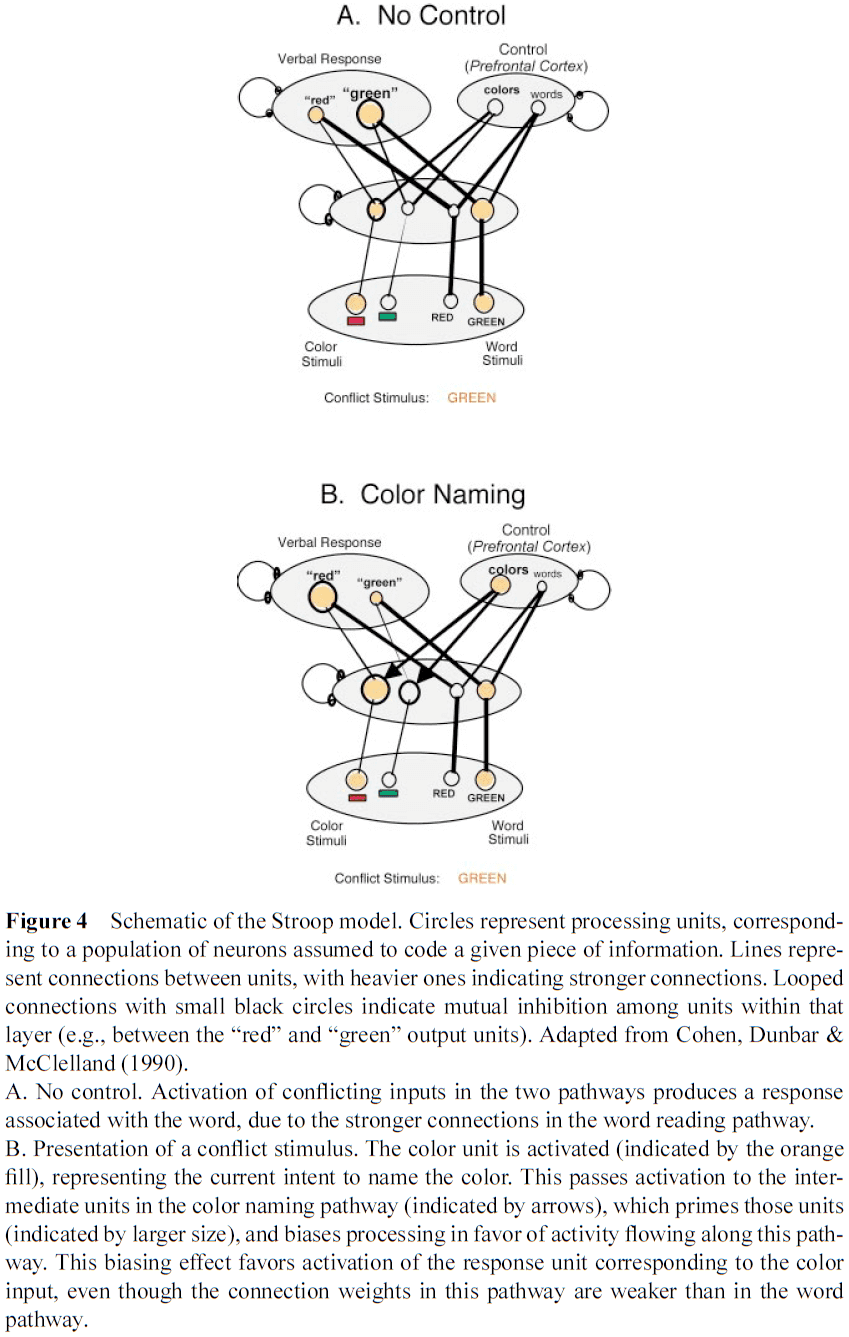

- Stroop task and Wisconsin card sort task (WCST).

- E.g. Reading the word “GREEN” displayed in red.

- This conflict between the read word (“green), and the seen color (“red”) illustrates one of the most fundamental aspects of cognitive control and goal-directed behavior: the ability to select a weaker, task-relevant response in the face of competition from a stronger, but task-irrelevant one.

- Patients with frontal impairments have difficult with the Stroop task, especially when the instructions change frequently, suggesting that they have difficultly sticking with the goal of the task or its rules in the face of strong competition.

- We observe similar findings in the WCST.

- E.g. Subjects have to sort cards according to shape, color, or number of symbols. The correct action is controlled by whichever sorting rule is in effect.

- Humans and monkeys with PFC damage show stereotyped deficits in the WCST; they can learn the initial mapping easily but are unable to adapt their behavior when the rules change.

- The Stroop task and WCST are described as using either selective attention, behavioral inhibition, working memory, or rule-based or goal-directed behavior.

- We argue that the PFC configures processing in other parts of the brain according to current task demands.

- These top-down signals favor weak, but task-relevant, stimulus-response mappings when they compete with more habitual, stronger mappings, especially when flexibility is needed.

- We build on the fundamental principle that processing in the brain is competitive: that different pathways, carrying different sources of information, compete for expression in behavior, and that the winners are those with the strongest sources of support.

- Voluntary shifts in attention result from top-down signals that bias the competition among neurons representing the scene, increasing the activity of neurons representing the to-be-attended features and, through mutual inhibition, suppress activity of neurons processing other features.

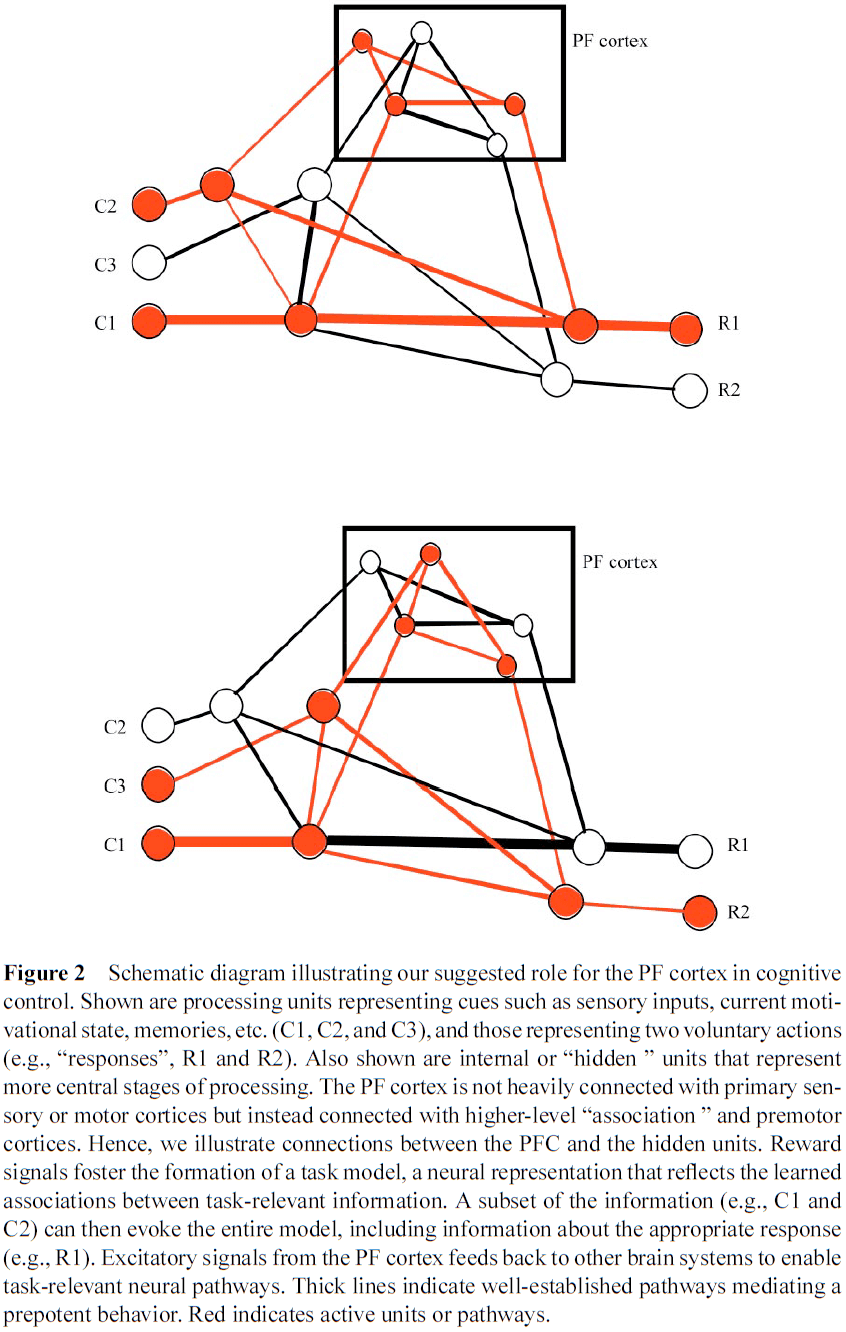

- Overview of the theory

- We assume that the PFC actively maintains patterns of activity that represent goals and the means to achieve them.

- The PFC provides bias signals to other brain regions, affecting all sensory modalities and the systems responsible for response execution, memory retrieval, and emotional evaluation.

- The sum effect of these bias signals is to guide the flow of neural activity along pathways that establish the proper mapping between inputs, internal states, and outputs needed to perform a task.

- The constellation of PFC biases can be viewed as the neural implementation of attentional templates, rules, or goals, depending on the bias signal target.

- How does the PFC mediate the correct behavior?

- We assume that stimuli activate internal representations within the PFC that can select the appropriate action.

- Repeated selection can strengthen the pathway between stimulus and response and allow for it to become independent of the PFC.

- As this happens, the behavior becomes more automatic and relies less on the PFC.

- How does the PFC develop the representations needed to produce the correct contextual response?

- In new situations, you may try various behaviors to achieve a goal.

- E.g. Trying actions that worked in similar circumstances, trying random actions, or trying actions suggested by others.

- We assume that each attempt between sensation and response is associated with some pattern of activity within the PFC.

- When an action is successful, reinforcement signals augment the corresponding pattern of activity by strengthening connections between the PFC neurons activated by that action.

- Reinforcement also strengthens connections downstream of the behavior, establishing an association between these circumstances and the PFC pattern that supports the correct behavior.

- Many details need to be added before we fully understand the complexity of cognitive control, but this general theory can explain many of the assumed functions of the PFC.

- Without the PFC, the most frequently used (and thus best established) neural pathways would predominate or, where those don’t exist, behavior would be haphazard and random.

- Such impulsive, inappropriate, or disorganized behavior is a hallmark of PFC dysfunction in humans.

- Minimal requirements for a mechanism of top-down control

- We can think of the PFC’s function as an “active memory” for control.

- Thus, the PFC must maintain its activity against distractions until a goal is achieved, yet must also be flexible enough to update its representations when needed.

- It must also have the appropriate representations that can select the neural pathways needed for the task.

- E.g. The PFC must have access to and must influence a wide range of information in other brain regions; PFCs must have a high capacity for multimodality, integration, and plasticity.

- It must be possible to explain all of these properties without invoking some other mechanism of control, lest the theory become subject to problems of the hidden “homunculus”.

- This paper brings together various observations and arguments to show that a reasonably coherent, and mechanistically explicit, theory of PFC function is beginning to emerge.

- Properties of the PFC

- Convergence of diverse information

- A system for cognitive control must have information about both the internal state of the system and the external state of the world.

- The PFC is anatomically well suited for this as it has interconnections with virtually all sensory and motor systems, and with limbic and midbrain structures involved in affect, memory, and reward.

- E.g. The lateral and dorsomedial PFC is more closely associated with sensory neocortex than the ventromedial PFC is with sensory neocortex.

- The PFC also has many intraconnections, which makes it suitable for coordinating and integrating these diverse signals.

- Many PFC areas receive converging inputs from at least two sensory modalities and in all cases, these inputs are from secondary/association and not from primary sensory cortex.

- Similarly, there are no direct connections between the PFC and primary motor cortex, but there are extensive connections with premotor areas that do project to primary motor cortex.

- Also important are the interconnections between the PFC and basal ganglia.

- The orbital and medial PFC are closely associated with medial temporal limbic structures critical for long-term memory and the processing of internal states.

- E.g. Direct and indirection connections with the hippocampus, amygdala, and hypothalamus.

- Most PFC regions are interconnected with most other PFC regions, providing pathways for information from diverse brain systems to interact.

- Convergence and plasticity

- Evidence suggests that lateral PFC neurons can convey the degree of association between a cue and a response.

- E.g. One neuron could show strong activation to one of two auditory cues, but only when the cue signaled a reward.

- More complicated behaviors between cues and responses/rewards may depend on more complex mappings.

- Neural responses to a given cue often depend on which rule is active in the task.

- Also, the baseline activity of many neurons varies with the rule.

- Lesion studies of the PFC also suggest that it’s used for learning rules.

- E.g. Following PFC damage, patients couldn’t learn arbitrary associations between visual patterns and hand gestures.

- Passingham argues that most, if not all, tasks that are disrupted following PFC damage depend on acquiring conditional associations (if-then rules).

- In sum, these results indicate that PFC neural activity represents the rules, or mappings, required to perform a particular task.

- Feedback to other brain areas

- Our theory requires the feedback signals from the PFC to reach widespread targets throughout the brain and we find that the PFC has the neural machinery to do so.

- E.g. Deactivating the lateral PFC attenuates the activity of visual cortical neurons to a behaviorally relevant cue.

- Neurons were found in the PFC that exhibited sustained sample-specific activity that survived the presentation of distractors, which wasn’t found for IT cortex.

- Active maintenance

- If the PFC represents the rules of a task in its pattern of neural activity, it must maintain this activity for as long as the rule is required.

- This capacity to support sustained activity in the face of interference is one of the distinguishing characteristics of the PFC.

- Many studies demonstrate that neurons within the PFC remain active during the delay between transiently presented cue and the later execution of a follow-up response.

- However, other areas of the brain also exhibit a simple form of sustained activity.

- E.g. In many cortical visual areas, a brief visual stimulus will evoke activity that persists from several hundred milliseconds to several seconds.

- The difference between the PFC and these other brain areas is that the PFC can sustain its activity in the face of distractions.

- E.g. In a visual matching task, the PFC sustains it’s activity while visual areas are easily disrupted by distractors.

- Learning across time within the PFC

- Rules often involve learning associations between stimuli and behaviors separated in time.

- How can associations learned for a rule/event at one point in time be used for behaviors/rewards at a later time?

- Even though a cue and action were separated in time, information about each was simultaneously present in the PFC, permitting an association to be formed between them.

- Midbrain dopamine (DA) neurons may serve to reinforce patterns of PFC activity responsible for achieving a goal.

- As learning progresses, DA neurons become activated progressively earlier by events that predict reward and cease activation to the now-expected reward.

- However, if the predicted rewards fail to appear or appear sooner than expected, it will again elicit DA neural responses.

- Thus, midbrain DA neurons seem to be coding prediction error rather than reward, which is the degree that a reward is surprising.

- The goal of a cognitive system isn’t just to predict rewards but to also pursue actions that ensure its procurement.

- This prediction error signal could help in learning by selectively strengthening not only connections that predict reward, but also connections with representations in the PFC that guide the behavior needed to achieve it (downstream connections).

- Studies support this as damage to the PFC disrupts reward evaluation.

- Summary

- The review of studies here show that the PFC exhibits the properties required to support cognitive control.

- E.g. Sustained activity robust to distractions/interference, multimodal convergence and integration, feedback pathways to exert influence on other brain structures, and ongoing plasticity that’s adaptive to the demands of new tasks.

- While these properties aren’t unique to the PFC and are found in other brain areas, we argue that the PFC represents a specialization along this specific combination of dimensions that’s optimal for a role in brain-wide control and coordination.

- Convergence of diverse information

- Activation theory of PFC function

- A simple model of PFC function

- Most neural network models of PFC simulate it as the activation of a set of rule units whose activation leads to the production of a response other than the one most strongly associated with a given input.

- Connections along a pathway are stronger as a consequence of more extensive and consistent use.

- The ability to engage weaker pathways requires adding a set of control units connected to stimulus features.

- We assume the control units in the model to represent the function of neurons in the PFC.

- Guided activation as a mechanism of cognitive control

- Our model emphasizes that the role of the PFC is modulatory rather than transmissive.

- E.g. Information doesn’t run through the PFC but is instead guided by it along task-relevant pathways.

- An analogy is that the PFC functions like a switch operator for a system of railroad tracks.

- E.g. The brain is a set of tracks (pathways) connecting various origins (stimuli) to destinations (responses). The goal is to get the trains (activity carrying information) at each origin to their proper destination as efficiently as possible while avoiding collisions. If there’s only one train, no intervention is needed. However, if two trains must cross the same track, then some coordination is needed to guide them safely to their destination.

- Patterns of PFC activity can be thought of as a map that specifies which pattern of tracks is needed to solve the task, and this is achieved by biasing signals from the PFC.

- This distinction between modulation vs transmission is consistent with neuropsychological deficits associated with frontal lobe damage.

- E.g. One patient prepared coffee by first stirring then adding cream when the correct order is cream first then stir. The actions still occurred as transmission was intact but the order was incorrect as modulation was impaired.

- Active maintenance in the service of control

- Sustained activity can be viewed as a mechanism of control.

- Weaker pathways rely more on top-down support when faced with competition from a stronger pathway.

- This suggests that increased demand for control requires greater or longer PFC activation, which is what we see in the neuroimaging literature.

- This also explains the reverse case where as a task becomes more practiced, its reliance on control is reduced.

- From a neural perspective, as a pathway is repeatedly selected by PFC bias signals, activity-dependent plasticity mechanisms can strengthen them.

- With strengthening, these circuits can function independently of the PFC and performance becomes more automatic.

- This aligns with studies showing that PFC damage impairs learning while sparing well-practiced tasks.

- Selective attention and behavioral inhibition are two sides of the same coin.

- Attention is the effect of biasing competition in favor of task-relevant information, and inhibition is the consequence for task-irrelevant information.

- We should distinguish between the activity-dependent control of the PFC with other forms of control such as the hippocampal system.

- E.g. The hippocampus lays down new tracks while the PFC is responsible for flexibly switching between them.

- Updating of PFC representations

- It’s easier, faster, and cheaper to switch between existing tracks than it is to lay new ones down.

- Representations in the PFC face two competing demands: they must be responsive to relevant changes in the environment (adaptive) but they must also be resistant to irrelevant changes (robust).

- Studies support this as PFC representations are responsive to task-relevant stimuli yet are robust to interference from distractors.

- Damage to the PFC further supports this as two hallmarks of PFC damage are perseverations (inadequate updating) and increased distractibility (inappropriate updating).

- DA neurons may play a role in implementing this as it’s been suggested that they provide a time-sensitive gating mechanism.

- Timing is important because updates must match the conditions.

- DA neurons may learn when to gate through feedback.

- E.g. If an exploratory DA-mediated gating signal leads to successful behavior, then its coincident reinforcing effects will strengthen both the DA signal and thus the behavior signal.

- This bootstrapping mechanism may be how the PFC self-organizes, which averts the problem of how the DA-signals are controlled in the first place, avoiding a theoretical regress aka recursion.

- E.g. If the PFC controls behavior and DA signals control the PFC, how are DA signals controlled?

- A simple model of PFC function

- Issues

- Representational power of the PFC

- The tremendous range of tasks that people are capable of raises important questions about the ability of the PFC to support the necessary range of representations.

- The large size of the PFC (over 30% of cortical mass) suggests that it can support a wide range of mappings.

- However, there seems to be an infinite number of tasks that a person can do and it seems unlikely that all possible mappings are represented within the PFC.

- E.g. “Wink whenever I say bumblydoodle.”

- Perhaps some undiscovered representational scheme can support this infinite flexibility of human behavior.

- Or maybe plasticity can account for PFC flexibility.

- As of this paper, we don’t know yet the mechanisms behind the wide and extraordinary flexibility of human behavior.

- PFC functional organization

- How are PFC representations organized?

- Maybe each PFC region handles a different function or that each region has the same function but operates on different types of information/representations.

- Our theory suggests that it’s unlikely that different classes of information will be represented in a modular or discrete form.

- E.g. Most regions of the PFC can respond to a variety of different types of information.

- The PFC may also be organized topographically with each stimulus dimension handled in a different region with some regions being multimodal.

- Monitoring and allocation of control

- A stronger pattern of activity within the PFC produces stronger biasing effects for a certain pathway, which may be at the expense of other pathways though competition.

- Recent studies suggest that allocation of control may depend on signals from the anterior cingulate cortex.

- E.g. Two trains destined to cross tracks at the same time or the coactivation of competing (mutually inhibiting) sets of units such as both “red” and “green” in the Stroop task.

- Such conflict produces uncertainty in processing and increases the chance of errors.

- Mechanisms of active maintenance

- There’s little empirical research on the mechanisms responsible for sustained activity.

- Two possible mechanisms: cellular models that propose neuron bistability and circuit models that propose the recirculation of activity through closed/recurrent loops of neurons.

- Capacity limits of control

- One of the most perplexing properties of cognitive control is its severely limited capacity.

- E.g. Simultaneously talking on the phone and typing an email.

- How many representations can be simultaneously maintained?

- It’s important to distinguish between the capacity limits of cognitive control and short-term memory.

- E.g. Memory depends on different structures than cognitive control.

- No theory to date can explain the capacity limitation itself.

- Prospective control and planning

- Active maintenance can’t account for future planning as we’re unlikely to maintain this information in the PFC for the future.

- More likely, this information is stored somewhere else and then activated at the appropriate time.

- This might involve interactions between the PFC and other brain systems such as the hippocampus.

- Representational power of the PFC

- How can interactions between billions of neurons result in behavior that’s coordinated and under control?

- Here, we’ve suggested that this ability of cognitive control stems from the PFC and its features.

- E.g. Diverse inputs and intrinsic connections that provide a suitable substrate for synthesizing and representing diverse forms of information needed to guide performance in complex tasks, capacity for active maintenance of representations, and regulation by neuromodulatory systems.

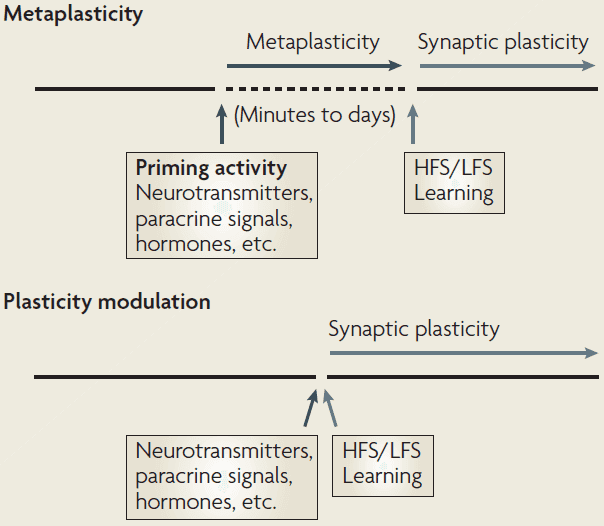

Metaplasticity: tuning synapses and networks for plasticity

- It’s crucial that synaptic plasticity be tightly regulated in the brain so runaway effects don’t occur.

- Activity-dependent mechanisms, also called metaplasticity, have evolved to help implement these computational constraints.

- This paper reviews the various intercellular signalling mechanisms that trigger lasting changes in the ability of synapses to express plasticity.

- Development and learning depend on the ability of neurons to modify their structure and function as a result of activity.

- At the synaptic level, neural activity can generate persistent forms of synaptic plasticity.

- E.g. LTP and LTD.

- There must be safeguards to prevent the saturation of LTP or LTD, which would compromise the ability of networks to discriminate events and store information.

- Extreme levels of LTP can also lead to excitotoxicity.

- Excitotoxicity: excessive activation of glutamate receptors can lead to cell death.

- How is the proper balance of LTP and LTD maintained?

- Various intercellular signalling molecules directly regulate the degree of LTP and LTD that can be induced.

- E.g. GABA, ACh, cytokines, hormones.

- Metaplasticity: a change in the physiological or biochemical state of neurons or synapses that alters their ability to generate synaptic plasticity.

- The “meta” part refers to the higher-order nature of the plasticity; plasticity of synaptic plasticity.

- A key feature of metaplasticity is that these changes outlast the triggering/priming activity and persist until a second round of activity.

- This distinguishes metaplasticity from conventional forms of plasticity modulation where the modulating and regulated events overlap in time.

- Since both plasticity and metaplasticity can be induced simultaneously, it’s difficult to only study metaplasticity.

- One paradigm for inducing metaplasticity involves activation of NMDA receptors (NMDARs).

- NMDAR activation is a key trigger for LTP induction but it can also inhibit subsequent induction of LTP.

- Inhibition of LTP by priming synaptic activity depends on the activation of NMDARs and other receptors.

- NMDAR activation can also facilitate the subsequent induction of LTD.

- Skipping over the molecular details.

- In contrast to the inhibitory effects of NMDAR priming on LTP, activation of group 1 metabotropic glutamate receptors (mGluRs) facilitates both the induction and persistence of subsequent LTP in area CA1.

- So far, the metaplasticity described is homosynaptic meaning that the synapses activated during the priming stimulation are also those that show altered plasticity.

- However, another form of metaplasticity is when activity at one set of synapses affects subsequent plasticity at neighboring synapses. This is called heterosynaptic metaplasticity.

- Results suggest that a cell-wide homeostatic process adjusts plasticity thresholds to keep the overall level of synaptic drive to a neuron within a range that permits plasticity to be expressed.

- Synaptic tagging: when molecules in the synapse of a second pathway, tagged by weak stimulation, captures the proteins synthesized in response to stronger stimulation elsewhere.

- The effects of synaptic tagging are limited to local dendritic compartments, presumable because the proteins are generated at the dendrite and not at the cell body.

- Heterosynaptic metaplasticity might come from changes in membrane properties or excitability of the postsynaptic neuron.

- Intrinsic plasticity: activity-dependent changes in the properties or levels of voltage-dependent ion channels.

- Intrinsic plasticity is strongly predicted to be a metaplasticity mechanism that regulates LTP and LTD.

- The link between learning and metaplasticity remains uncertain.

- Findings in rodents indicate that NMDAR inhibition and mGluR facilitation of LTP are in a dynamic balance and are regulated by sensory experience.

- Metaplasticity prevents the saturation of synaptic potentiation, which might guard against excitotoxicity or epilepsy.

- Furthermore, some metaplastic control mechanisms might be part of a larger set of processes that protect against excitotoxicity and death.

- Metaplasticity can prevent a network from becoming incapacitated by too much LTD or by loss of afferent input.

- Since both metaplasticity and plasticity mechanisms can be active simultaneously, it’s difficult to ascribe particular molecular changes induced by conditioning stimulation to one mechanism or the other.

- E.g. Protein synthesis can promote plasticity persistence but can also raise the threshold for reversing the plasticity as a metaplasticity mechanism.

- Environmental stimuli might first have to lower the plasticity thresholds at these synapses before additional plasticity/learning can occur.

- Synapse-specific regulation provides local controls, while wider heterosynaptic and network changes provide more global regulation.

- Both of these metaplastic processes represent an adaptation that helps keep synaptic efficacy within a dynamic range and larger neural networks in the appropriate state for learning.

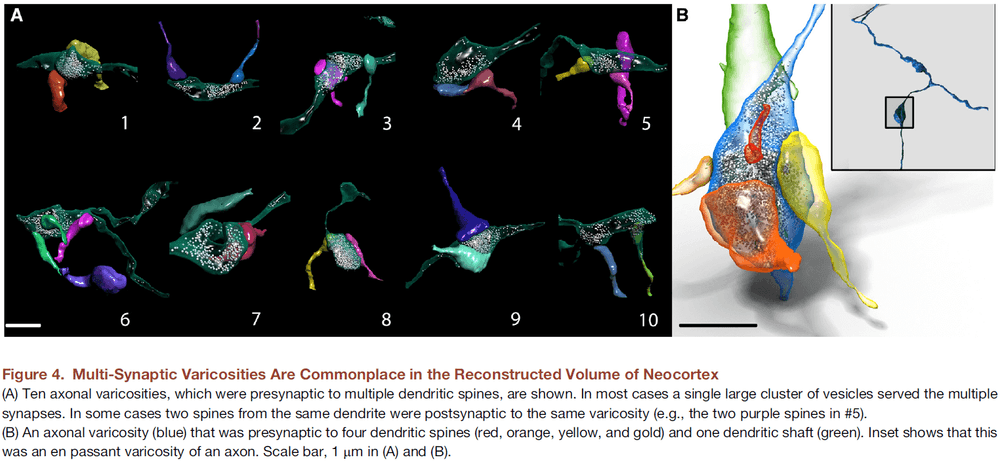

Saturated Reconstruction of a Volume of Neocortex

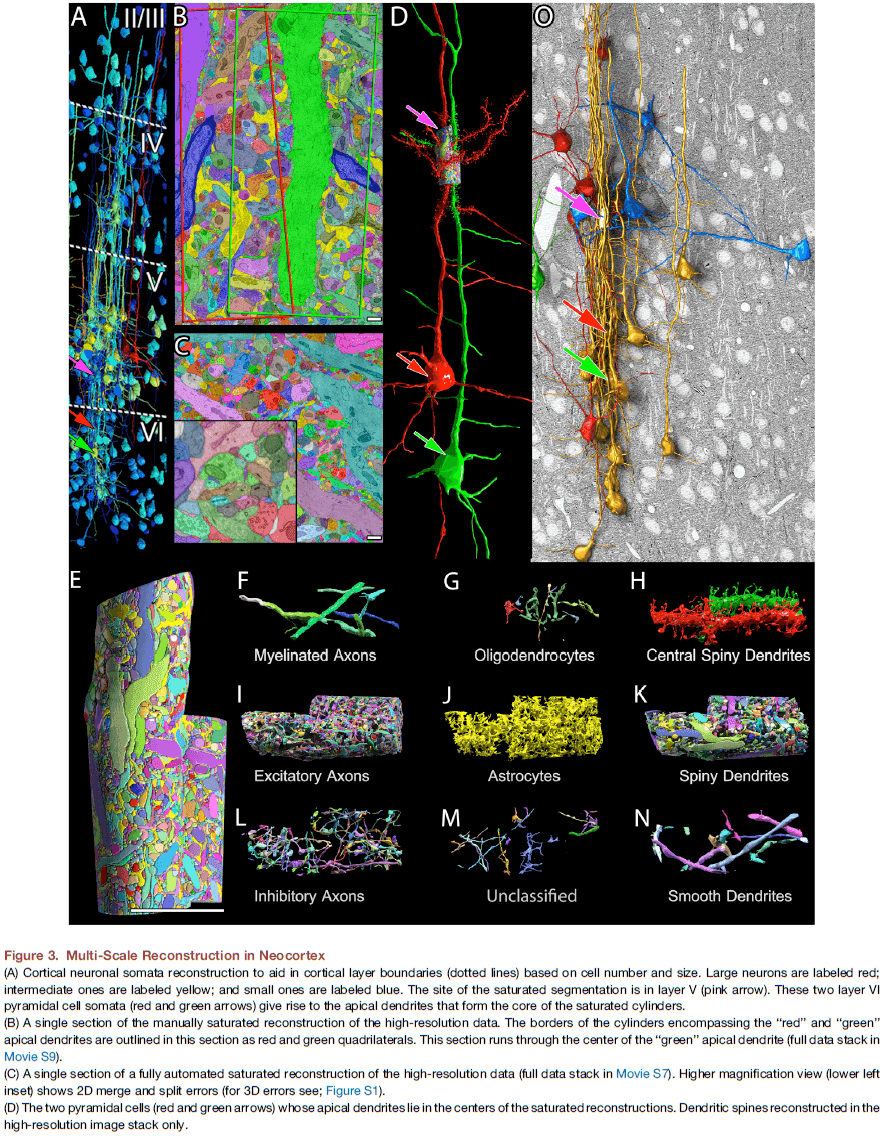

- This paper describes automated technologies to probe the structure of neural tissue at nanometer resolution and to generate a saturated reconstruction of a sub-volume of mouse neocortex where cellular objects and sub-cellular components are rendered and itemized.

- The cellular organization of the mammalian brain is more complicated than that of any other known biological tissue.

- Much of the nervous system’s fine cellular structure is unexplored.

- Using electron microscopy (EM), we’re now beginning to generate the brain’s fine cellular structure data.

- Reconstruction on the scale of mammalian brains is enormously expensive and difficult to justify.

- However, we can efficiently map the connectome if the connectivity of the cerebral cortex could be determined without looking, in detail, at every synapse.

- E.g. If the overlap of axons and dendrites at light microscope resolution provides sufficient information to infer connectivity, then the huge data sets of EM images would be redundant and not useful.

- Can fine details of the brain’s structure be inferred from either lower resolution or more sparse analysis?

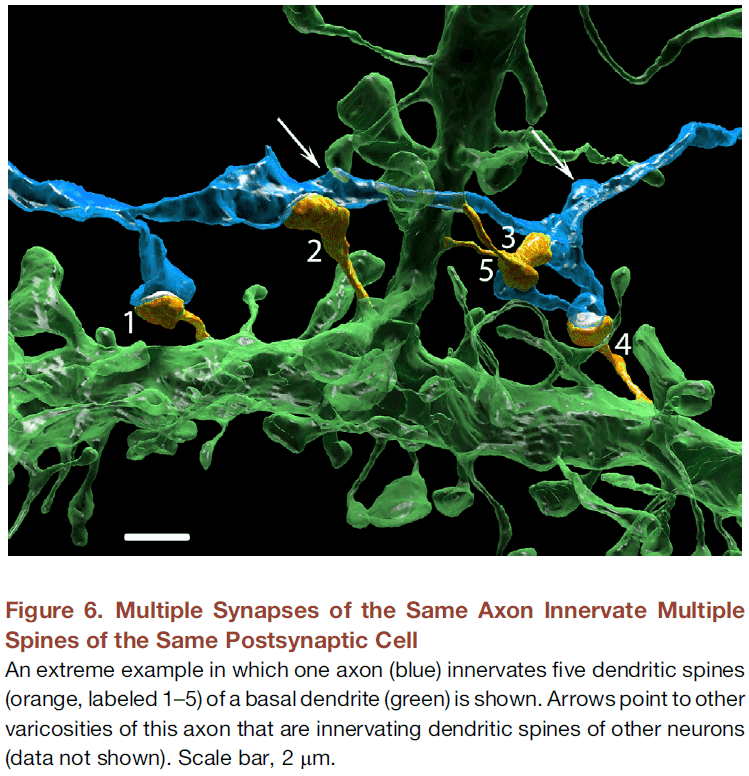

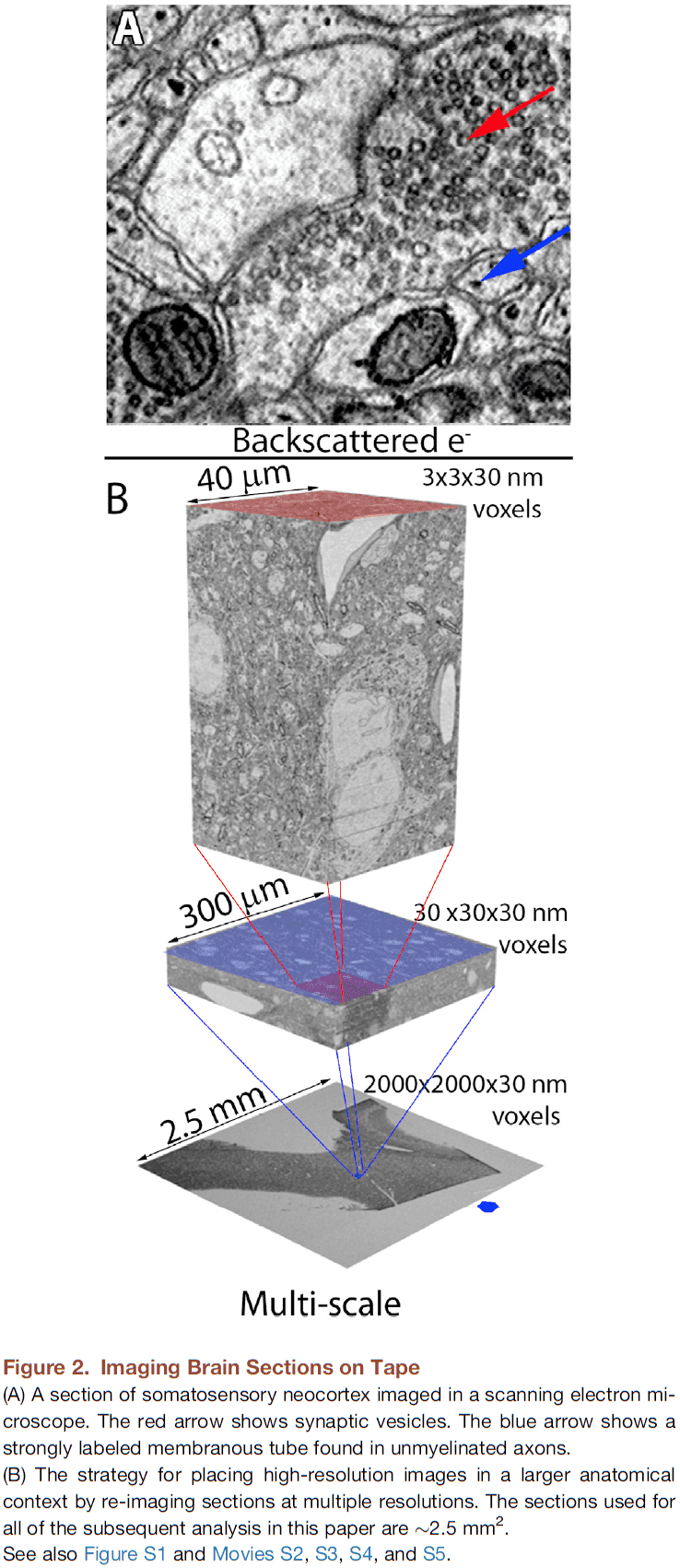

- To test this, we reconstructed all of the connectivity in a small piece of neocortical tissue (1500 ) at a resolution allowing identification of every synaptic vesicle.

- Previous studies in the retina and hippocampus concluded that connectivity wasn’t entirely predictable from the proximity of presynaptic elements to postsynaptic targets.

- Is this also true in the neocortex?

- In neocortex, it’s possible that spatial overlap is sufficient to explain synaptic connections between pairs of axons and dendrites.

- Surprisingly, analysis of the connectomic data was even more challenging than creating the image data or annotating it.

- Technical details

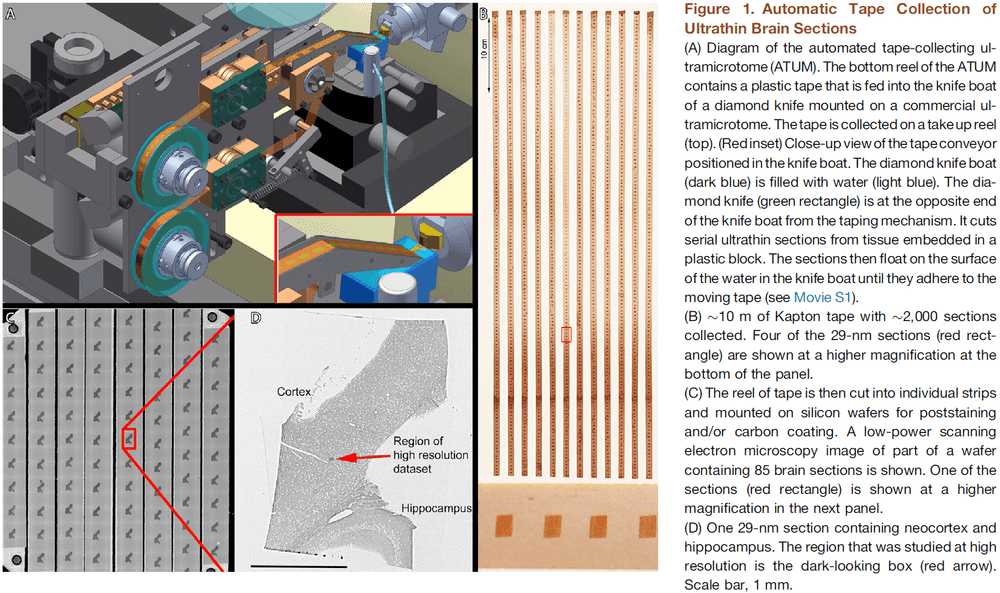

- We built an automatic tape-collecting ultramicrotome (ATUM) that retrieves brain sections from the water boat of a diamond knife immediately as they’re cut.

- To generate the dataset, we collected 2,250 29-nm coronal brain slices at a rate of 1,000 sections per 24 hours.

- We chose 29 nm as section thickness to trace the finest neuronal wires.

- The tape was then cut into strips and placed on silicon wafers to be photographed.

- Once mapped, the wafers constitute an ultrathin section library for repeated imaging of the sections at a range of resolutions.

- The scanning electron microscope had sufficient resolution and contrast to detect individual synaptic vesicles.

- We used a ROTO stain to aid in reconstruction of fine processes.

- The resulting images were sampled with 3-nm pixels, ensuring that membrane boundaries would be oversampled for easier reconstruction.

- The same sample was imaged at lower resolutions (29 nm and 2,000 nm) to rapidly acquire images of larger tissue volumes.

- This generates a multi-scale dataset from the same sample of cerebral cortex.

- Imaging can be sped up by scanning different wafers in parallel on multiple microscopes, use of secondary electron detection, and by imaging on newer microscopes that use multiple scanning beams.

- Three scales of resolution: 2,000 nm, 29 nm, and 3 nm per pixel.

- We developed a computer-assisted manual space-filling segmentation and annotation program called “VAST”.