Neuroscience Papers Set 1

By Brian PhoJanuary 25, 2021 ⋅ 77 min read ⋅ Papers

Could a Neuroscientist Understand a Microprocessor?

- Analyzed a microprocessor using neuroscience techniques and while it does reveal interesting structures in the data, it doesn’t meaningfully describe the hierarchy of information processing in the microprocessor.

- One can take a human-engineered system and ask if the methods used for studying biological systems would allow it to understand an artificial system.

- Tested the “MOS6502” microprocessor.

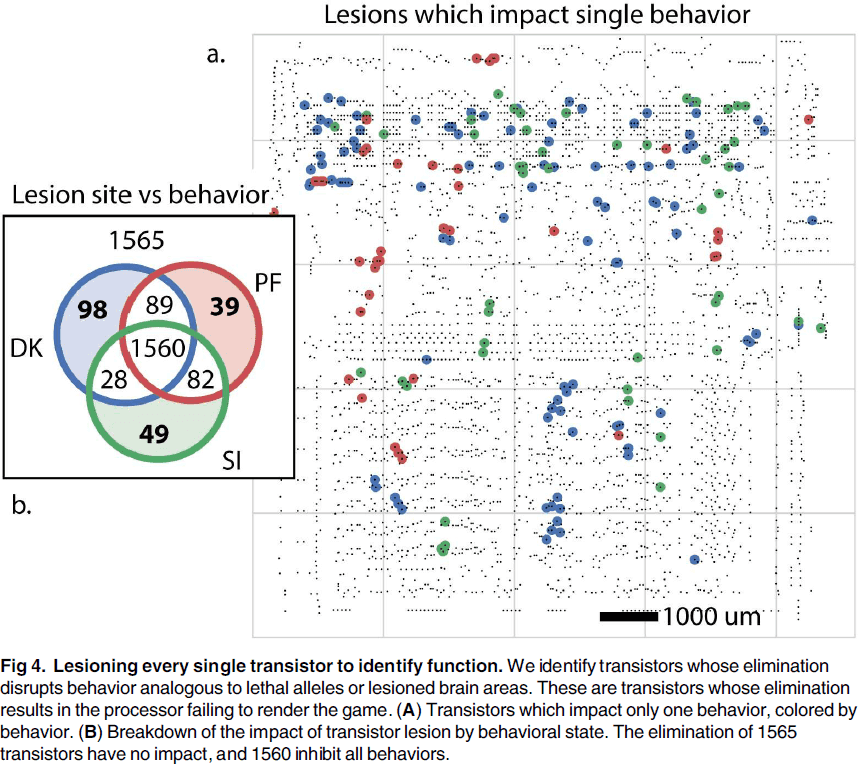

- Lesions let us study the effects of removing a part of the system without affecting the rest of the system.

- While we can lesion the microprocessor by removing transistors, it doesn’t help us get a better understanding of how the processor really works.

- Lesioning highlights the importance of isolating individual behaviors to understand the contribution of parts to the overall function.

- However, it’s extremely difficult to produce behaviors that only require a single aspect of the brain.

- If we record and analyze the tuning properties of transistors in the microprocessor, we find that a small number of transistors are strongly tuned to the luminance of the most recently displayed pixel.

- However, we know that the strongly tuned transistors aren’t directly related to the luminance of the pixel, despite their strong tuning.

- We should be skeptical about generalizing from processors to the brain as there are many differences.

- However, we can’t ignore the failure of the methods used on the processor simply because processors are different from neural systems.

- We can use simulated microprocessors as a testbed for new neuroscience methods.

- The problem isn’t that neuroscientists couldn’t understand a microprocessor, the problem is that they wouldn’t understand it given the approaches they’re currently using.

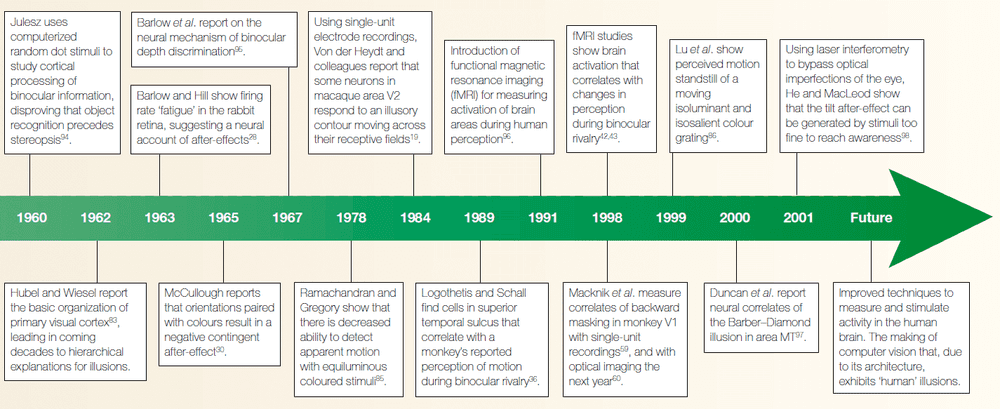

Visual illusions and neurobiology

- Illusions are often the stimuli that exist at the extremes of what our sensory systems have evolved to handle.

- The word “illusion” is difficult to define as, in a sense, all of vision is an illusion.

- An illusion arises from a difference between the perceived and the actual stimuli.

- E.g. In a cinema or movie theatre, we pay to watch a succession of flat, still images that appear to be rich with motion and depth.

- The systematic study of illusions provides important clues to the neural architecture and its constraints.

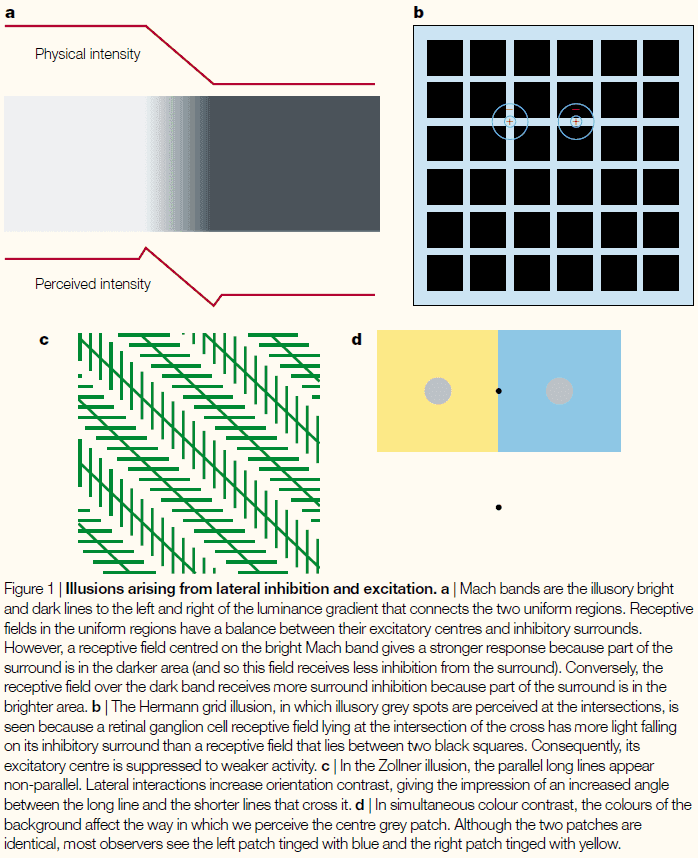

- Certain illusions have enabled us to discover that from the retina onwards, neurons characteristically inhibit or excite their neighbors depending on their connectivity.

- This allows the nervous system to enhance contrast between similar regions.

- Types of illusions

- Illusions from lateral interaction

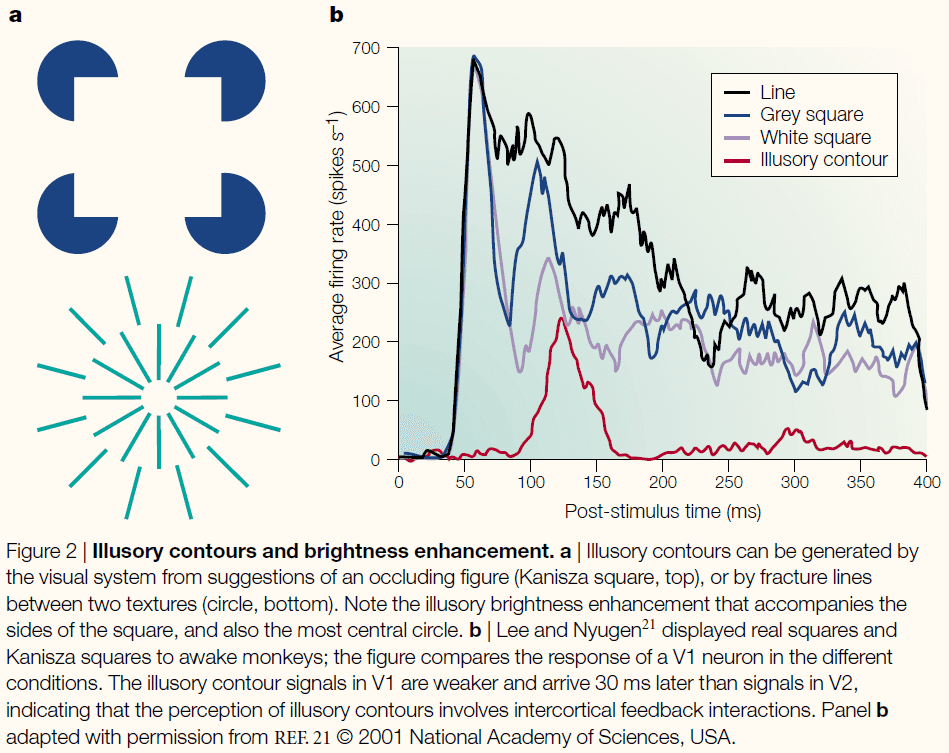

- Illusory contours

- After-effects

- Multistable stimuli

- Timing of awareness

- Opponent process theory of vision: trichromatic signals from cones are fed into subsequent neural stages and show opponent processing.

- This theory explains the after-effects illusion where you see the opponent color after staring at a color for a long time.

- A consequence of this theory is the idea that competing neural populations exist in a balance.

- If one subpopulation is fatigued, another population can dominate the push-pull competition and briefly control the percept.

- It now seems clear that the fatigue of neuronal populations falls short as an explanation for after-effects.

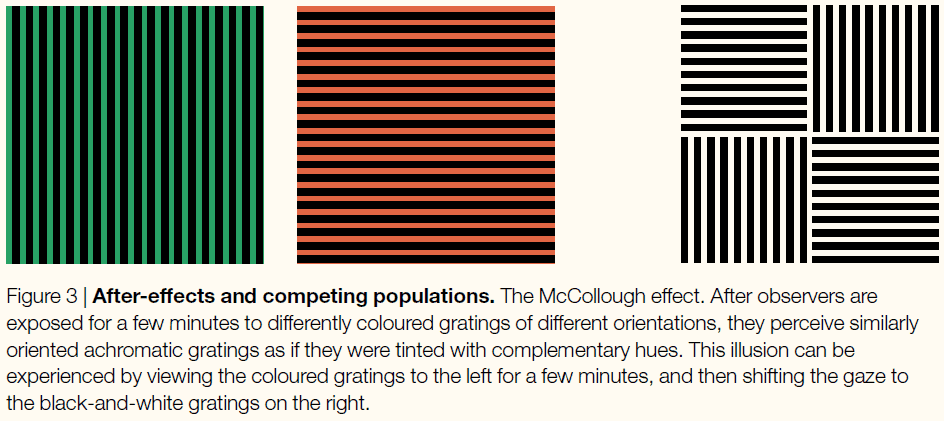

- The McCollough effect is unexplainable by the theory as the effect can last overnight and sometimes days.

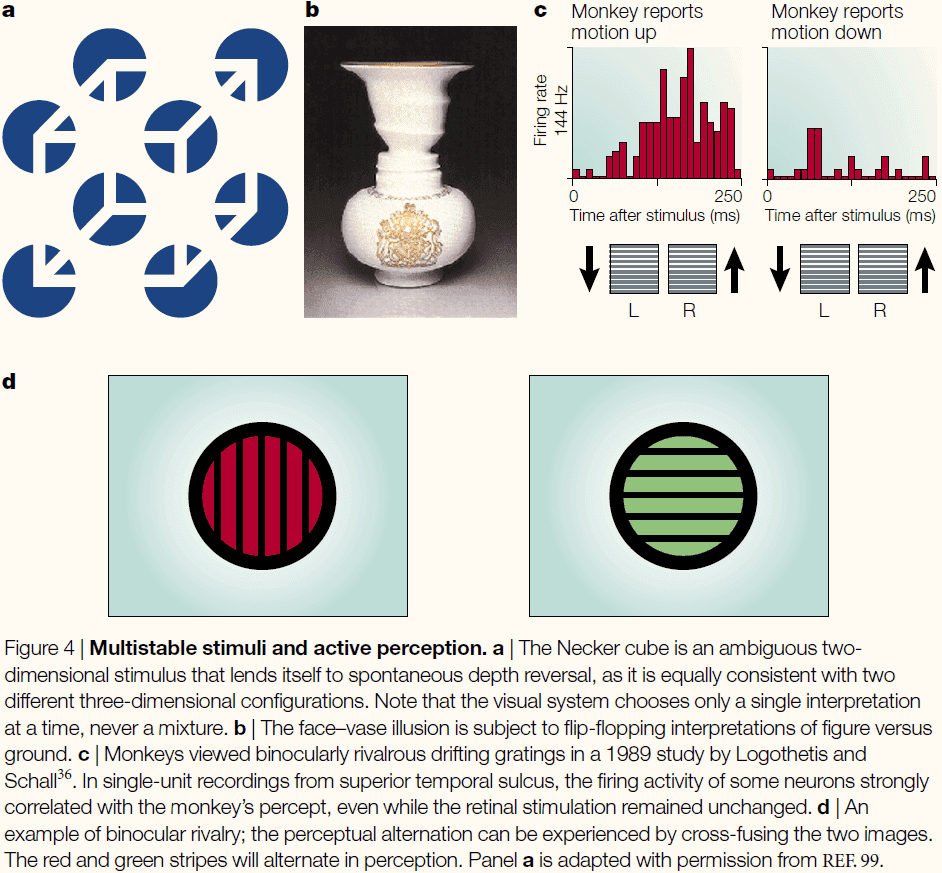

- A very useful example of bistability is a phenomenon known as binocular rivalry.

- Multistable stimuli are invaluable tools for the study of the neural basis of visual awareness, because they allow us to distinguish neural responses that correlate with basic sensory features from those that correlate with perception.

- Several biological principles have been distilled from the careful study of illusions, and these will continue to guide neuroscience research.

- The flash-lag illusion is consistent with the idea that the percept attributed to the time of an event is a function of events that happen in a small window of time after the event.

- This means that consciousness is a retrospective reproduction and that the visual system isn’t only feedforward but also feedbackward.

- Cross-modal illusions have also been observed such as the McGurk illusion where visual information influences auditory information.

- Our understanding of biology can also inform us of new illusions such as the finding that the optic nerve causes a blindspot in our vision.

Is coding a relevant metaphor for the brain?

- The “neural coding” metaphor is misleading due to the following arguments

- The neural code depends on experimental details that aren’t carried by the coding variable, thus limiting their representational power.

- Neural codes carry information only by reference to things with known meaning.

- Coding variables are observables tied to the temporality of experiments, whereas spikes are timed actions that mediate coupling in a distributed dynamical system.

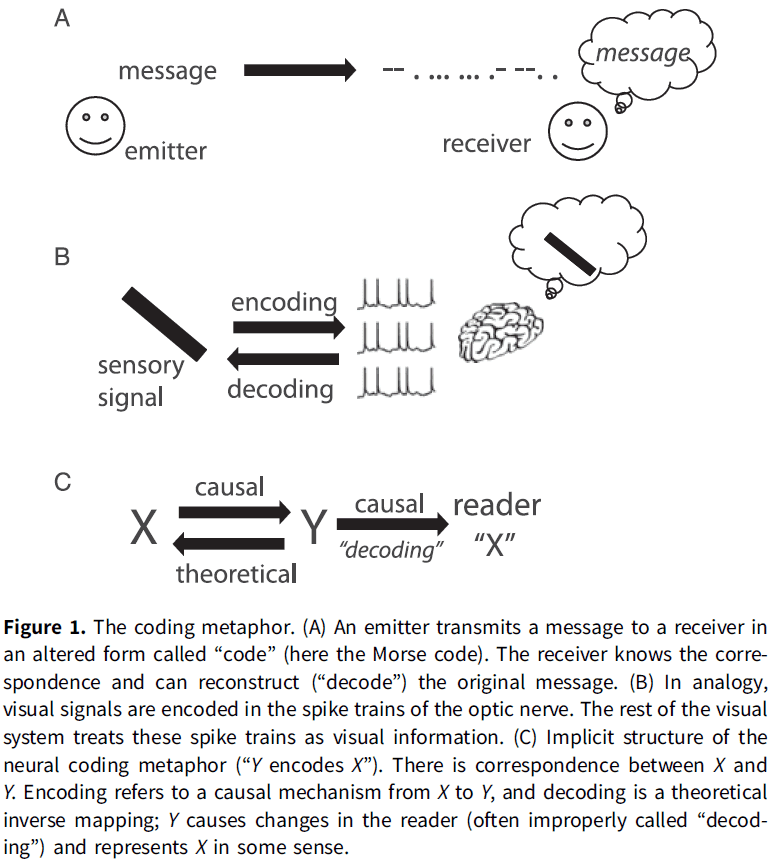

- Neural coding is a communication metaphor.

- E.g. Morse code that maps letters to a sequence of dots and dashes.

- Both Morse code and the retinal code are related to a communication problem: to communicate text messages over telegraph lines, or to communicate visual signals from the eye to the brain.

- Three key properties of the neural coding metaphor

- Technical sense in that there’s a correspondence between two domains.

- E.g. We call the relation between visual signals and spike trains a code to mean that spike trains specify/encode visual signals.

- One can theoretically reconstruct the original message (visual signals) from the encoded message (spike trains), a process called decoding.

- E.g. It’s this sense that neurons in the primary visual cortex encode the orientation of bars in their firing rate and that neurons in the hippocampus encode the animal’s location in place and grid cells.

- Not all cases of correlations in nature are considered instances of coding.

- E.g. Climate scientists don’t say that rain encodes atmospheric pressure.

- Another assumption is that spike trains are considered messages for a reader, the brain, about the original message.

- This is the representational sense of the metaphor.

- Neural coding as a metaphor implies a causal relation between the original message and the encoded message.

- E.g. Spike trains result from visual signals and not the other way around.

- To be a representation for a reader, the neural code must at least have a causal effect on the reader.

- Technical sense in that there’s a correspondence between two domains.

- Three elements of the neural coding metaphor summarized

- Correspondence

- Representation

- Causality

- Most technical work on neural coding uses the first technical sense where the word “code” is used as a synonym for “correlate”.

- Paper argues that scientific claims based on neural coding rely on the representational or causal sense of the metaphor, but that none of these two senses is implied by the technical sense (correspondence).

- In other words, a correspondence or correlation doesn’t mean representation or causality.

- Coding variables that are shown to correlate with stimulus properties depend on the experimental context, therefore, neural codes don’t provide context-free symbols.

- And we can’t extend the neural code to represent a larger set of properties, such as the context, because context is what defines properties.

- Thus, neural codes have little representational power.

- The fundamental reason is that the coding metaphor conveys an inappropriate concept of information and representation.

- Neural codes carry information by reference to things with known meaning.

- E.g. Morse code only means something if you know that the dots and dashes refer to letters.

- However, perceptual systems have no option to refer to another thing for meaning so they build information from the relations between sensory signals and actions, forming a structured internal model.

- Another issue with the neural coding metaphor is that it tries to fit the causal structure of the brain (dynamic, circular, distributed) into the causal structure of neural codes (atemporal, linear) which doesn’t work.

- The author concludes that the neural coding metaphor can’t be the basis for theories of brain function because it’s disconnected from the causal structure of the brain and it’s incompatible with the representational requirements of cognition.

- The activity of neurons is often said to encode properties.

- E.g. The firing rate of pressure sensory neurons in skin encodes the depth of the stimulus. The deeper the stimulus, the faster the firing rate.

- Francis Crick fallacy of the overwise neuron

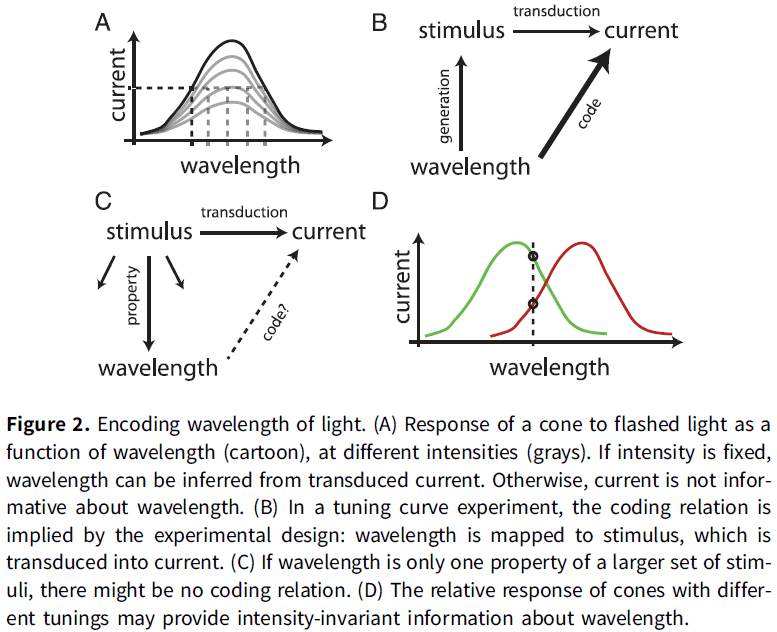

- Cones are broadly tuned to wavelength.

- If different wavelengths of light are flashed, then the amplitude of the transduced current in the cone varies with the wavelength.

- Thus, the current encodes wavelength in the technical sense as one can recover the wavelength from the magnitude of the current. One can be translated into the other.

- Yet, animals/humans with a single functional type of cone are color blind, why? The cones encode color information so why can’t the organism see color?

- The answer is that if the photoreceptor’s current also depends on light intensity, then it doesn’t provide unambiguous information about wavelength in any general setting, even in the narrow sense of correspondence.

- This also applies to any tuning curve experiment.

- The proposition that the neuron encodes the experimental parameter is mainly a property of the experimental design rather than a property of the neuron (which only needs to be sensitive to the parameter).

- This confusion, that a neuron’s firing rate is a function of the stimulus parameter (rather than a context-dependent correlate), underlies influential neural coding theories.

- To say that a neuron encodes a property of the stimuli and isn’t only sensitive to it, we have to rule out all other factors.

- E.g. Wavelength isn’t encoded by single cones, but by the relative activity of cones with different tunings. The relative activity doesn’t depend on light intensity nor other properties of the stimulus so we can make the stronger claim.

- Thus, referring to tuning curve experiments in terms of coding promotes a semantic drift, from the modest claim that a neuron is sensitive to some experimental manipulation, to a much stronger claim about the intrinsic representational content of the neuron’s activity.

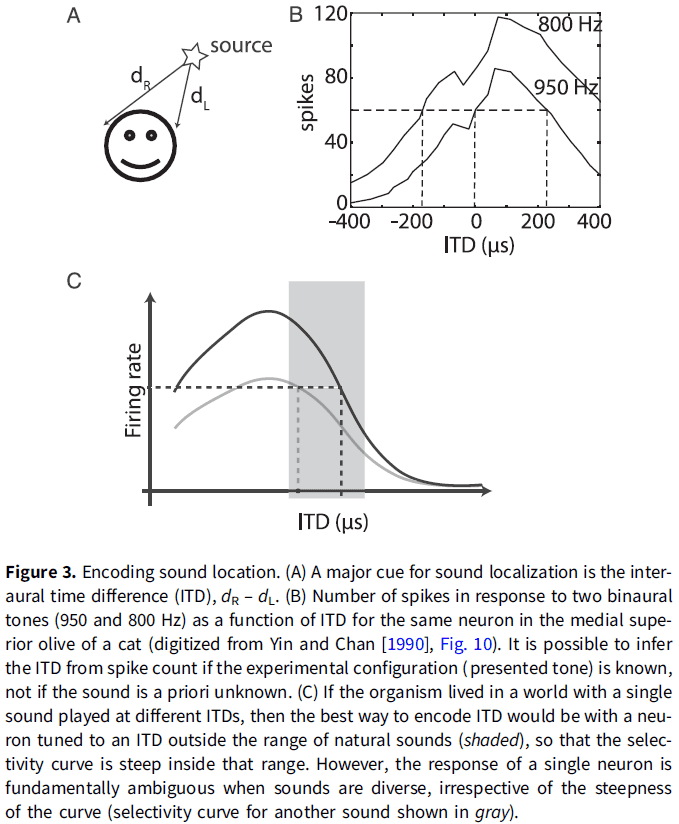

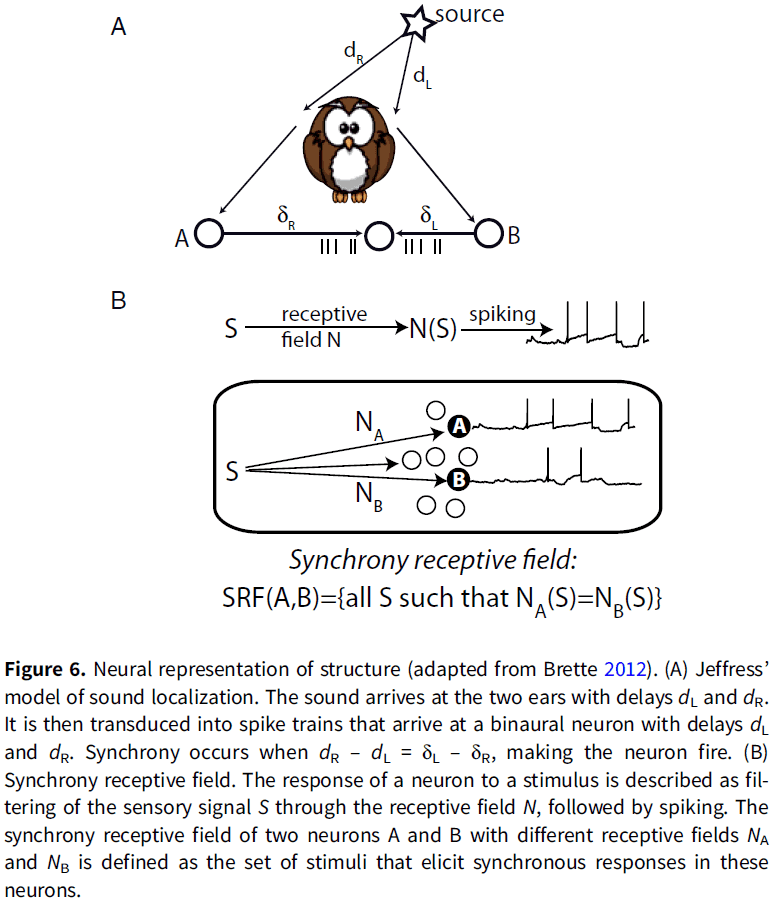

- Another example of when the neural coding metaphor drifts from the technical sense is for sound localization.

- Interaural time difference (ITD): the difference in arrival times of a sound wave at the two ears.

- ITD is a major cue for animals to determine the location of a sound.

- It’s been shown that the neurons in the IC have a critical role in localizing sounds in the contralateral field.

- How does the activity of these neurons contributed to sound localization behavior?

- One way is to consider the entire pathway, to try to build a model of how neuron responses in various structures combine to produce behavior that uses the sound’s location.

- Another way is to ask how neurons encode sound location.

- However, theories that say how neurons encode sound location don’t link how the responses of a single neuron can result in the behavior observed.

- E.g. They say that there is sufficient information in the firing rates of individual neurons to produce ITD that are comparable with those of human psychophysically.

- There is a skip between linking neural activity to behavior. Decoding the neural code isn’t done by the brain but by the experimenter.

- By implying that the brain reads the neural code, we manage to make claims about perception and behavior while ignoring the mechanisms by which behavior is produced.

- These claims rely on implicit linking propositions based on abstract constructs, where neural activity is likened to computer memory that the brain stores, retrieves, and manipulates, wherever it’s in the brain and whenever it occurs.

- We should also be wary of populations of overwise neurons, such as in slope coding.

- In reality, tuning curves are defined for a specific experimental condition and aren’t context free.

- If neurons encode variables, then what encodes the definition of those variables?

- Perception and memory cannot just be about encoding stimulus properties because this omits the definitions of those properties and of the objects to which they are attached.

- The author argues that the coding metaphor conveys a particular notion of information, information by reference, and this kind of information is irrelevant to perception and behavior.

- How is it possible for the nervous system to infer external properties from neural activity if all that it ever gets to observe is that activity? How does the brain know what the codes refer to?

- My answer is that the nervous system uses the pathway that the activity came from to infer the external properties.

- This is related to the symbol grounding problem because how do spikes, the symbols of the neural code, make sense for the organism?

- One possibility is that the meaning of neural codes is implicit in the structure of the brain that reads them.

- Two objections against this proposition

- Neural plasticity makes the idea of a fixed code implausible.

- E.g. A person born with one hemisphere can see both visual hemifields, implying that the hemisphere has learned the new meaning of the signals from the opposite hemifield.

- We didn’t evolve to handle new types of information.

- E.g. We don’t have a brain circuit for chess or driving.

- Neural plasticity makes the idea of a fixed code implausible.

- Neural coding theory considers the relations between observables as redundant and that they should be eliminated.

- In contrast, the view discussed in the paper argues that relations constitute information.

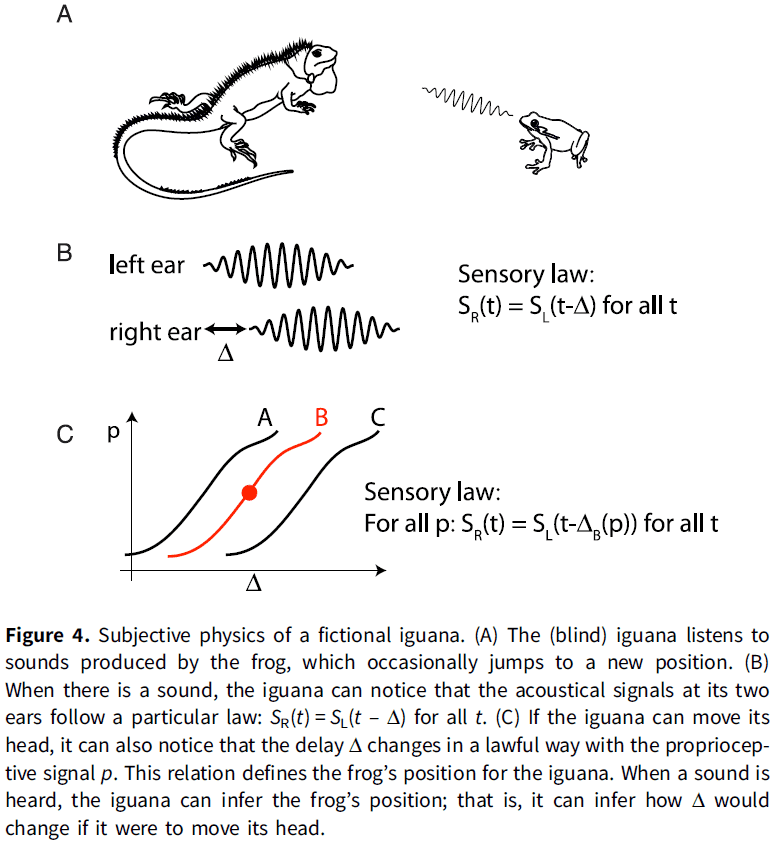

- One way that the nervous system might build relations is by exploiting the “invariant structure” in sensory stimuli.

- E.g. When a sound is produced, the left and right ears receive a delayed version from each other. This property of “delay” is an invariant structure of sound because the ears are distanced apart.

- The sensory world is made up of random pairs of signals that follow particular laws that the listener can identify. This identification is called the “pick-up of information”.

- An interesting aspect of this alternative notion of information is that the topology of the world projects to the topology of sensory laws.

- E.g. Two different sounds produced by a source at the same position will produce pairs of signals that share the same property (the sensory law).

- This can be assessed without knowing what this property corresponds to in the world. We don’t know what the property is, we just know that the property is invariant.

- This can be implemented in neurons using Hebb’s law.

- For an external observer, the sensory laws convey the information that the delay is related to the source’s position.

- However, for the receiver, they don’t know what the sensory law conveys as nothing tells it what it is nor can it infer it from the message.

- The important point here is to focus on the perspective of the receiver. If we are in the place of the receiver aka the organism, we can’t infer what the sensory signal means.

- Now let us consider that the receiver can turn their head, now the receiver observes that there’s a lawful relation between a proprioceptive signal (the head’s position) and the observed delay.

- Now when the receiver observes sounds with a particular delay, it can infer that if it were to move its head, then the delay would change in a predictable way.

- For the receiver, the relation between acoustical delay and proprioception defines the spatial position of the source.

- We note that the perceptual inference involved here does not refer to a property in the external world (frog position), but to manipulations of an internal sensorimotor model such as head position.

- The kind of information available to an organism isn’t Shannon information (correspondence to external properties of the world) but internal sensorimotor models.

- Animals are interested in such models because they can be manipulated to predict the effects of hypothetical actions.

- While this type of model seems similar to predictive coding theory, it isn’t the same as those models map internal variables to observables, while this model is more akin to models in physics which form relations between observables (F = ma).

- Generative models aren’t the kind of internal models described in the previous section.

- The word “predictive” is another baggage term in neuroscience as it is used in the technical sense such as in predictive coding, where it means that a neuron’s firing correlates with its sensory inputs, but the term is also used to refer to how an organism forms behavioral predictions.

- There isn’t any indication of how a theory based on neural coding might explain anticipatory behavior.

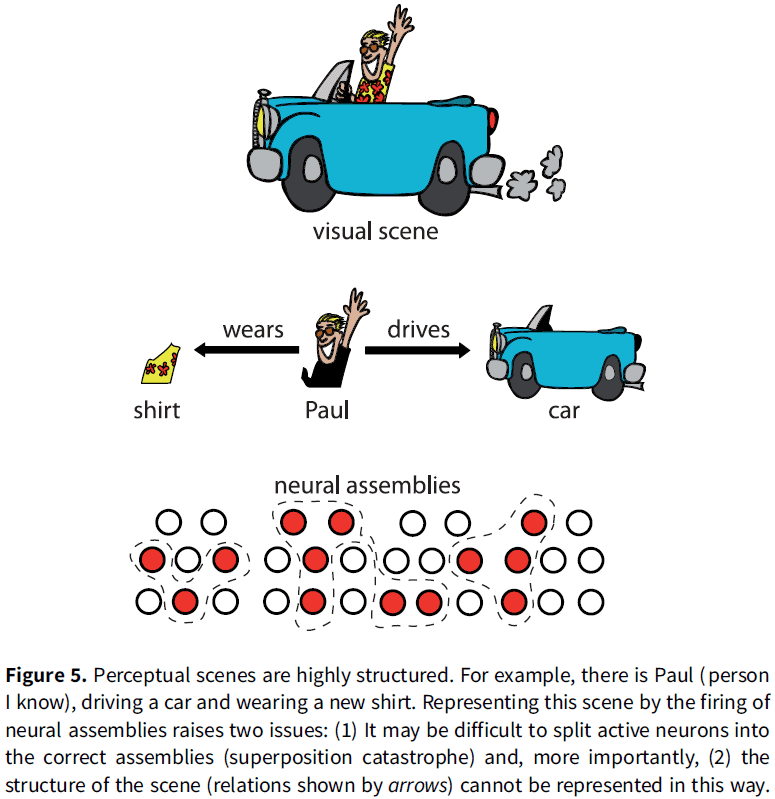

- For memory, cell assemblies can’t be used to implement memory because they’re unstructured and, thus, can’t represent structured internal models.

- E.g. Analogous to the “bag of words” model in text retrieval where a text is represented by a set of words and all syntax is discarded.

- The main objection is that cell assemblies encode objects or features to be related, but not the relations between them.

- Synchrony is one possible solution to bind features of an object represented by neural firing.

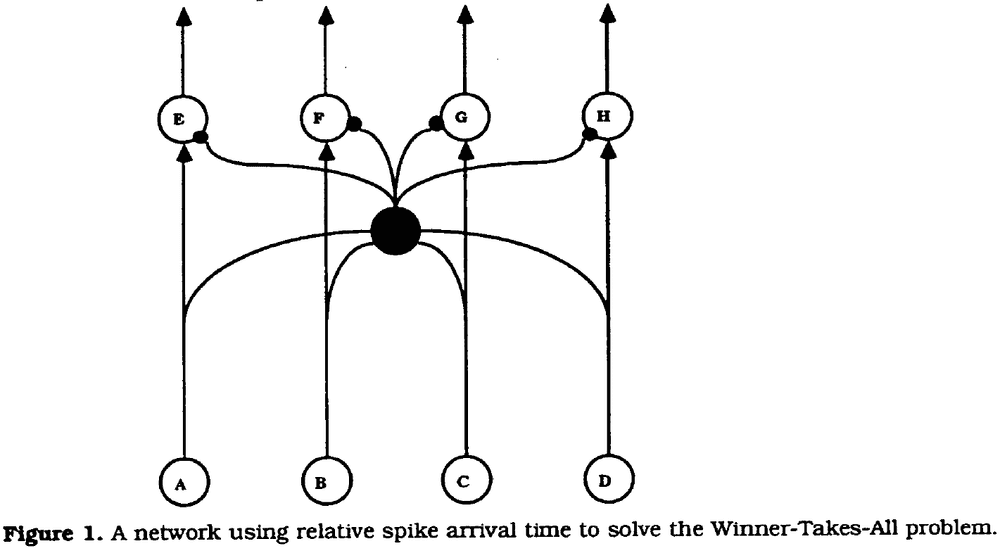

- E.g. In the Jeffress model of ITD coding, neurons receive inputs from both ears with different conduction delays. When input spikes arrive simultaneously, the neuron spikes. Thus, spikes are caused when the two acoustical signals at the two ears match the conduction delay of that specific neuron. In this model, the neuron’s firing indicated whether signals satisfy a particular sensory law.

- This model has been generalized with the concept of a “synchrony receptive field”, which is the set of stimuli that elicit synchronous responses in a given group of neurons.

- Although synchrony can represent relations, neither binding by synchrony nor synchrony receptive fields solve the general problem of binding, because only one type of relation can be represented by synchrony and only a symmetrical one.

- Neural coding theories generally rely on the representational sense of the metaphor, the idea that neural codes are symbols standing for the properties that the brain manipulates, but there’s no evidence that this sense is valid.

- Worse, there’s empirical evidence and theoretical arguments against it.

- The coding metaphor has a dualistic structure and separates the function of the brain into two distinct components

- A component that encodes the world into the activity of neurons.

- A component that decodes that activity into an internal model.

- The two components (encoding and decoding) are indistinguishable in behavior, because no behavior involves just one of them.

- The question “does the brain use a firing rate code or a spike timing code?” is the same as asking “does the brain use the BOLD code?”

- Neural codes based on averaging over trials don’t have causal powers because percepts are experienced now, not on average.

- Neural codes have much less representational power than generally claimed or implied.

- The causal structure of neural coding metaphor is incongruent/incompatible with the causal structure of the brain.

- So what else, if not coding?

- The author suggests to develop models of the full sensorimotor loop.

- E.g. Instead of looking for neural codes of sound location, look for neural models of auditory orientation reflexes.

- That is, to see neural activity as what it really is: activity.

- Action potentials are potentials that produce actions and they aren’t hieroglyphs to be deciphered.

Our understanding of neural codes rests on Shannon’s foundations commentary

- When applying Shannon’s theory of information to understanding the brain, what determines the set of possible messages?

- Brain structure determines the sets of possible messages.

- E.g. The set of possible messages about vision is restricted to three types of cones set up by the brain.

- Shannon’s source-coding theorem enables us to understand why the brain imposes this structure on color messages it receives as an efficient code must reflect the source statistics.

- E.g. For color, the source statistics are the statistics of the reflectance spectra of surfaces in the natural world.

- The brain’s way of encoding color captures a large part of the information available.

- Meaning we capture the specific electromagnetic spectrum (400-700 nm) because it captures a lot of information about the real world, compared to infrared or ultraviolet.

- An understanding of the brain’s codes is as essential to neuroscience as an understanding of the genetic code is to biology.

The origin of the coding metaphor in neuroscience commentary

- The question of eliminating “coding” shouldn’t be in reference to the truth of the coding metaphor, but on its utility.

- We shouldn’t get bogged down in wondering whether the brain “really” encodes information about the world. It doesn’t.

- The questions, rather, are: Is the metaphor still useful for us, today? Does the usefulness of the metaphor outweigh its inaccurate connotations? Or, has it outlived its usefulness entirely?

Is coding a relevant metaphor for building AI?

- The neural coding metaphor is an insufficient guide for building AI.

- The history of AI tells us that the most useful principles, and the richest theoretical insights, emerged from studying control, optimization, and learning processes rather than the particularities of representations or codes.

Consciousness: The last 50 years (and the next)

- One of the defining questions for the mind and brain sciences has been the relationship between the subjective experience of consciousness and its biophysical basis.

- Consciousness science over the last 50 years can be divided into two epochs.

- From the mid-1960s to 1990 the fringe view held.

- From the late-1980s to early-1990s there has been an overflow of research into consciousness.

- Consciousness: 1960s until 1990

- By the mid-1960s, behaviorism (that had dominated the 20th century of psychology) was retreating.

- There was growing interest in the dependency of particular aspects of consciousness on specific brain properties.

- E.g. Split-brain patients.

- One of our most deeply held assumptions is that consciousness is unified.

- However, there’s evidence against this assumption as lesions to the medial temporal lobe, in the case of patient H.M, showed impairment only in explicit, conscious memories and not in other forms of memory such as motor skills and semantic memory.

- Libet experiment paradigm: comparing the brain signals before and during the conscious decision to move.

- Consciousness: 1990s until the present

- The revival of consciousness as a scientific study can be marked by the landmark paper, “Towards a neurobiological theory of consciousness”.

- Neural correlates of consciousness (NCCs): the minimal neuronal mechanisms jointly sufficient for any conscious percept.

- E.g. In binocular rivalry such as the Necker cube, implanted electrodes found that neuronal responses in early visual areas (such as V1) tracked the physical stimulus rather than the percept. While the neuronal responses in higher visual areas (such as IT) tracked the percept rather than the physical stimulus.

- Global workspace theory: the idea that modular and specialized processors compete for access to a global workspace.

- The road ahead

- Review of Integrated Information Theory (IIT) and predictive coding/Bayesian brain.

- A great deal is now known about how embodied and embedded brains shape and give rise to various aspects of conscious level, content, and self.

- However, much more remains to be discovered.

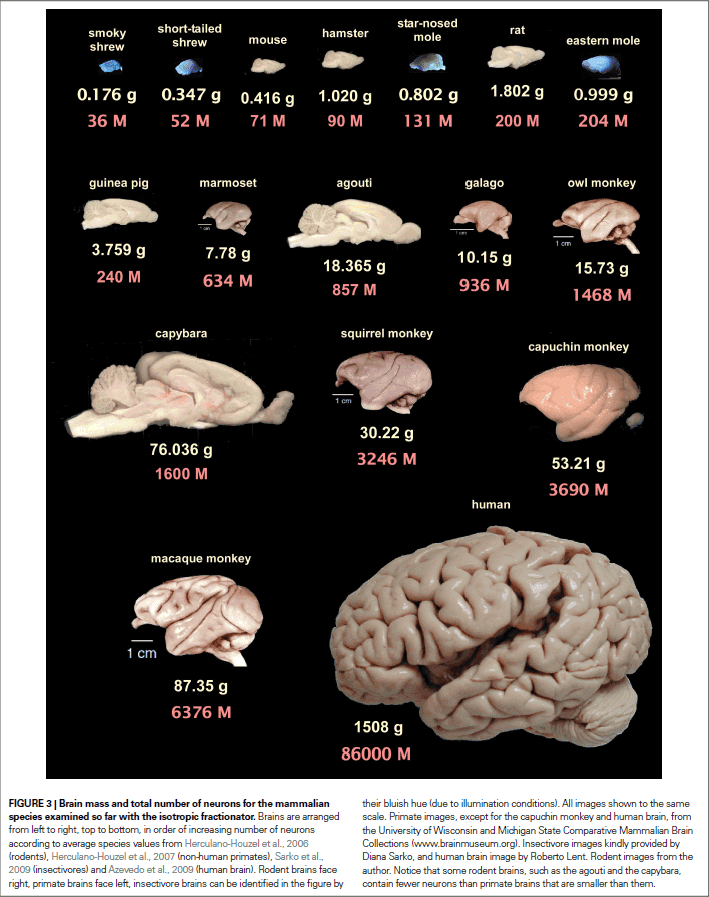

The human brain in numbers: a linearly scaled-up primate brain

- Brain size can’t be used as a proxy for the number of neurons in the brain because neuronal densities differ between brains.

- The human cerebral cortex holds only 19% of all brain neurons; the rest are in the cerebellum or brain stem.

- Two advantages of the human brain

- It’s built according to very economical, space-saving, scaling rules (that apply to other primates).

- It’s the largest among primate brains, hence it contains the most neurons.

- Paper findings argue in favor of the view that cognitive abilities are centered on absolute numbers of neurons, rather than on body size/encephalization.

- Weirdly, our exceptional abilities aren’t brain-centered, but rather body-centered in encephalization.

- People believe that our superior cognitive abilities are a result of our brain being bigger than expected for our body size.

- How many neurons does the human brain have, and how does that compare to other species?

- The usual answer is that we have 100 billion neurons and 10 times more glial cells.

- Among mammals, the trend is that the species with the largest brains tend to have a greater range and versatility of behavior than those with smaller brains.

- E.g. Larger-brained birds such as corvids, parrots, and owls have more complex behavior than smaller-brained birds.

- Since whales and elephants have larger brains than we do, why should we be more cognitively able compared to them?

- Encephalization quotient (EQ): a measure of how much the observed brain mass of a species deviates from the expected brain mass for its body mass.

- Problems with EQ

- It isn’t obvious how a larger-than-expected brain mass confers a cognitive advantage.

- E.g. When we compare small-brained animal with very large EQs to large-brained animals with smaller EQs, we find that the large-brain animals have better cognitive performance even though they have smaller EQs.

- The body-brain mass relationship depends on the precise combination of species computed and we shouldn’t compare apples to oranges.

- It isn’t obvious how a larger-than-expected brain mass confers a cognitive advantage.

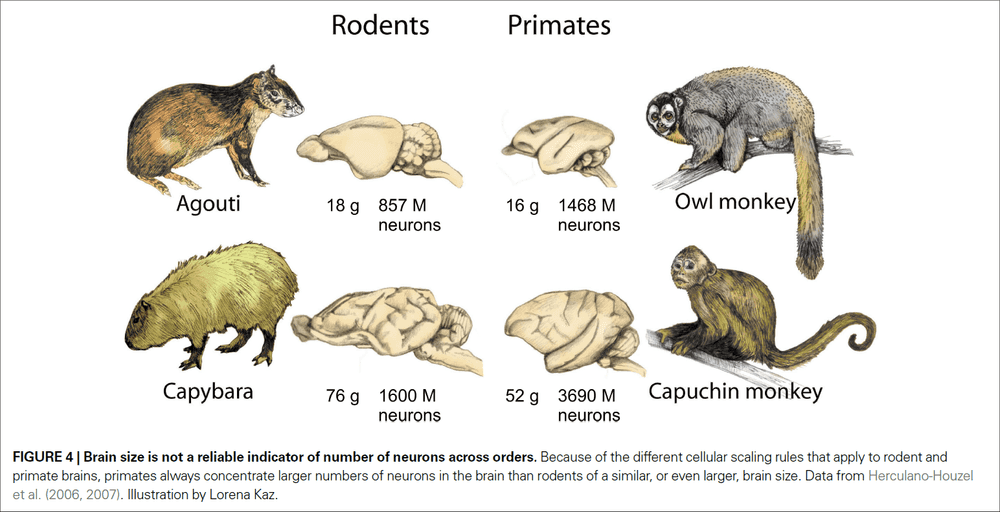

- Another false assumption is that brains are all built the same. However, we know that some brains scale much better and are built denser than other brains.

- The number of neurons in the cerebral cortex increases coordinately with the number of neurons in the cerebellum in humans.

- Brain size isn’t a reliable indicator of number of neurons because different cellular scaling rules apply to rodents, primates, and insectivores.

- In rodents, a larger number of neurons is followed by larger neurons.

- The adult male human brain has, on average, 86 billion neurons and 85 billion non-neuronal cells in 1.5 kg.

- Remarkably, the human cerebral cortex, which represents 82% of brain mass, holds only 19% of all neurons in the human brain.

- The relatively large human cerebral cortex, therefore, isn’t different from the cerebral cortex of other animals in its relative number of neurons.

- If a rodent brain had 86 billion neurons, like the human brain, we would expect it to weigh 35 kg, a value beyond the current largest known brain mass (9 kg for the blue whale) and is probably physiologically unattainable.

- Being a primate endows us with seven times more neurons than would be expected if we were rodents.

- The human brain is a linearly scaled-up primate brain, with the expected number of neurons for a primate brain of its size.

- It’s likely that humans don’t have truly unique cognitive abilities, but rather the combination and extent of abilities is what makes us great.

- Exponential combination of processing units, and therefore of computational abilities, leads to events that may look like “jumps” in the evolution of brains and intelligence.

- Since neurons interact combinatorially through synapses, the increase in cognitive abilities afforded by increasing the number of neurons in the brain can be expected to increase exponentially with absolute number of neurons.

- We should disregard body and brain size and focus more on the absolute number of neurons.

- Glial cells in the human brain are at most 50% of all brain cells.

- Two advantages of the human brain

- It scales as a primate brain meaning it scales economically.

- It has the largest number of neurons.

Neuroscience: In search of new concepts

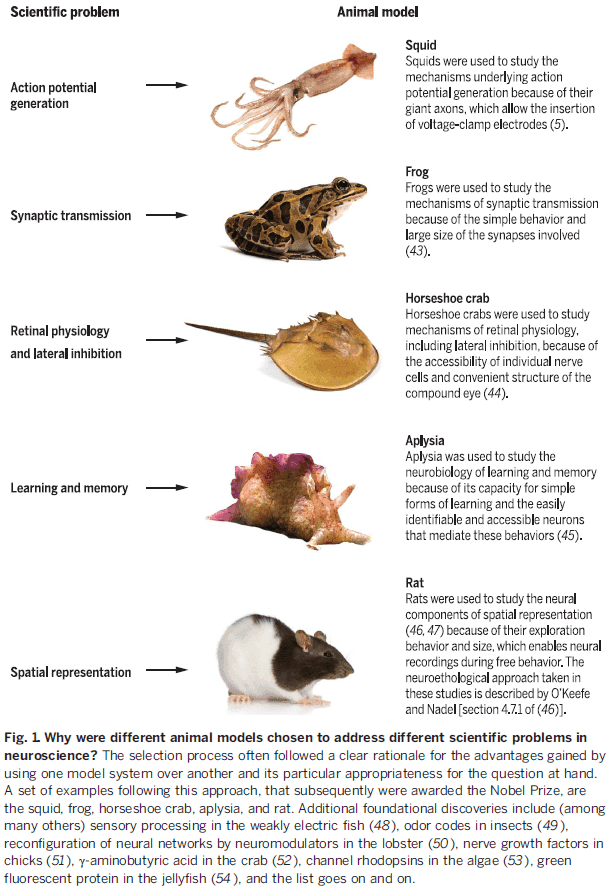

The emperor’s new wardrobe: Rebalancing diversity of animal models in neuroscience research

- Is the great diversity of questions in neuroscience best studied in only a handful of animal models?

- Neuroscience has been and is converging on only a few select model organisms.

- E.g. Mouse and monkey.

- Krogh’s principle: for a large number of problems, there will be some animals that will be the most convenient to study.

- E.g. Hodgkin and Huxley used the squid to understand the mechanisms behind AP generation because it has a huge axon (~1 mm diameter).

- Benefits of convergence (using only a few animal models)

- Rapid development of tools to study model nervous systems.

- Standardization of animal procedures such as housing and breeding.

- Reduced costs and simplicity.

- Certain functions are specialized in few animals which makes studying the function difficult if we ignore them as model animals.

- E.g. Vocal mechanisms such as language and songs.

- Another benefit of studying a variety of model organisms is that they may have specialized functions that we would like to have or that we can compare ourselves to.

- E.g. Sound localization. Studying the barn owl has revealed that the neural computation for sound localization followed almost exactly the Jeffress model, which is based on coincidence detection of excitatory inputs arriving from both ears. Yet in rodents, the problem is solved in a different way, which is based on inhibitory inputs that adjust the temporal sensitivity of coincidence-detection neurons.

- The comparative approach serves as an extremely powerful tool to assess the validity of universal principles.

- What should a scientist looking 30 years into the future do?

- One can, and should, think carefully and select the model system for the scientific question, rather than feel compelled to select the scientific question for the model system.

Big data and the industrialization of neuroscience: A safe roadmap for understanding the brain?

- Three major issues

- Is the industrialization of neuroscience the soundest way to achieve substantial progress in knowledge about the brain?

- Do we have a safe “roadmap” based on scientific consensus?

- Do these large-scale approaches guarantee that we will reach a better understanding of the brain?

- Paper emphasizes the contrast between the accelerating technological development and the relative lack of progress in conceptual and theoretical understanding in brain sciences.

- We may be letting technology-driven, rather than concept-driven, strategies shape the future of neuroscience.

- E.g. The Blue Brain project, the European “Human Brain Project”, and the US BRAIN initiative.

- Ways that technology has revolutionized neuroscience

- Technical level: high resolution of large-neural ensemble activity and single-spike resolution in-vivo.

- Methodological level: new standards in experimentation and data acquisition.

- Data production level: compiling genomic, structural, and functional databases.

- Analysis level: dimensionality reduction and pattern-searching algorithms.

- Modeling level: deep learning.

- This is a radical change in the way we do science, where new directions are launched by new tools rather than by new concepts.

- E.g. Many leading scientists and funding agencies now share the view that “progress in science depends on new techniques, new discoveries and new ideas, probably in that order.”

- However, the author argues that it should be the other way around. That conceptual guidance is required to make the best use of technological advances.

- “Technology is a useful servant but a dangerous master.”

- Big-data isn’t knowledge.

- Four levels of information

- Data

- Information

- Knowledge

- Wisdom/Understanding

- The major difference between brain science and particle physics is that theorists in particle physics are involved before, and not after, the hypothesis-driven data are collected.

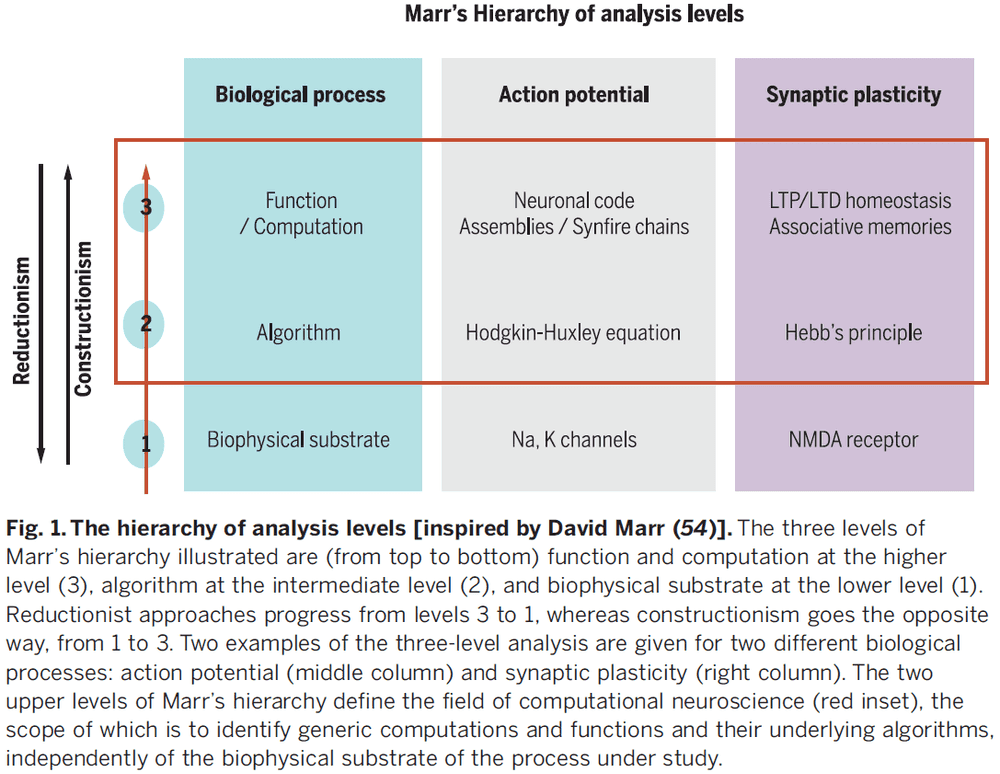

- The best-known roadmap for dealing with brain complexity is Marr’s three levels.

- Marr was convinced that a purely reductionist strategy was “genuinely dangerous”.

- Trying to understand the emergence of cognition from neuronal responses “is like trying to understand a bird’s flight by studying only feathers.”

- The critical point is that causal-mechanistic explanations are qualitatively different from understanding how a combination of component modules produces emergent behavior.

- Review of multiple realizability.

- The search for a unified theory remains at a rudimentary stage for the brain sciences.

- In the brain sciences, however, building massive database architectures without theoretical guidance may turn into a waste of time and money.

What constitutes the prefrontal cortex?

- Neuroscience started in the 1960s.

- One of its central aims is to describe how the nervous system enables and controls behavior.

- Paper reviews the arguments for and against using rodents as a prefrontal cortex model.

- The fundamental function of the prefrontal cortex could be to represent and produce new forms of goal-directed actions—actions that can be, for example, mental and internal, emotional or motor-related.

- Skimmed most of the paper due to disinterest.

Space and time in the brain

- Summarizes current neuroscience views on space and time, discusses whether the brain perceives or makes distance and duration, analyzes how assumed representations of distance and duration relate to each other, and considers the option that space and time are mental constructs.

- Representation of space in the brain

- Review of hemispatial neglect condition.

- Review of place and grid cells in the hippocampus and entorhinal.

- Review of path integration.

- Episodic memory: Mental travel in space and time

- Navigation and memory are deeply connected.

- The implication that most cortical networks have a dual use: environment-dependent and/or internally organized.

- E.g. Rats running in a real maze and on a treadmill both activate the same place cells, making it difficult to distinguish the sequences.

- Findings indicate that neuronal mechanisms associated with navigation and memory are similar: both establish order relationships. However, memory mechanisms are no longer linked to the outer world.

- The distance-duration relativity suggests that space and time correspond to the same brain computations.

- In the lab, we often find reliable correlations between neuronal activity in various brain regions and succession of events.

- We don’t directly sense time.

What is consciousness, and could machines have it?

- The word “consciousness” conflates two different types of information-processing computations in the brain.

- The selection of information for global availability (C1).

- The self-monitoring of those computations (C2).

- C1 is synonymous with “having the information in mind” and having that information available for further processing.

- C2 refers to the reflexive, self-referential relationship we have where our own cognitive system is able to monitor its own processing and obtain information about itself.

- E.g. Body position, whether we know or perceive something, whether we just made an error.

- Another term to describe C2 is introspection or meta-cognition; the ability to conceive and make use of internal representations of one’s own knowledge and abilities.

- Paper proposes that C1 and C2 are not related, but this doesn’t mean they don’t share physical substrates.

- Argument is supported by empirical and conceptual evidence that the two may come apart because there can be C1 without C2 and C2 without C1.

- E.g. When reportable processing isn’t followed with accurate metacognition and when a self-monitoring operation unfolds without being consciously reportable.

- Many computations don’t involve C1 nor C2 called the unconscious or C0 or short.

- Cognitive neuroscience confirms that complex computations such as face/speech recognition, chess playing, and meaning extraction can all occur unconsciously in the human brain, under conditions that yield neither global reportability nor self-monitoring.

- Unconscious processing (C0): Where most of our intelligence lies.

- “We can’t be conscious of what we’re not conscious of.”

- We’re blind to our unconscious processes so we tend to underestimate them.

- E.g. Priming and subliminal stimuli.

- The human brain also unconsciously processes view-invariance and meaning extraction.

- C1: Global availability of relevant information

- The organization of the brain into computationally specialized subsystems is efficient, but also raises the problem of how to integrate these subsystems.

- Integrating all of the available evidence to converge toward a single decision is a computational requirement that must be faced by any animal or AI system.

- E.g. Thirsty elephants manage to determine the location of the nearest water hole and move straight to it. Such decision-making requires

- Efficiently pooling all available sources of information.

- Considering the available options and selecting the best one.

- Sticking to this choice over time.

- Coordinating all internal and external processes toward the achievement of that goal.

- Consciousness as access to an internal global workspace.

- We call “conscious” the representation that wins the competition for access to this mental arena and it gets selected for global sharing and decision-making.

- Consciousness is therefore manifested by the temporary dominance of a thought or train of thoughts over mental processes, so that it can guide a broad variety of behaviors.

- The difference between attention and C1 is that attention has some non-conscious mechanisms such as

- Top-down attention can be oriented toward a stimuli, amplifying its processing, and yet fail to bring it to consciousness.

- Bottom-up attention attention can be attracted by a flash, even if this stimulus remains unconscious.

- What we call attention is a hierarchical system of sieves that operate unconsciously.

- Evidence for all-or-none-selection in a capacity-limited system.

- The brain has a conscious bottleneck and can only consciously access a single item at a time.

- The serial operation of consciousness is attested by phenomena such as the attentional blink and the psychological refractory period (when conscious access to a first item prevents or delays the perception of a second competing item).

- Evidence indicates that the conscious bottleneck is implemented by a network of neurons that is distributed throughout the cortex, but with a stronger emphasis on high-level associative areas.

- Ignition of this network only occurs for the conscious percept.

- Nonconscious stimuli may reach into deep networks but are small and short-lived, while conscious stimuli are more stable.

- Such meta-stability seems to be necessary for the nervous system to integrate information from a variety of modules and to broadcast that information.

- C1 consciousness is an elementary property that’s present in infants and animals.

- The prefrontal cortex appears to act as a central information sharing device and serial bottleneck in both humans and nonhuman primates.

- C2: Self-monitoring

- While C1 reflects the capacity to access external information, C2 is characterized by the ability to reflexively represent one’s self.

- When making a decision, humans feel more or less confident about their choice.

- Confidence can be defined as a sense of the probability that a decision or computation is correct.

- Almost anytime the brain perceives or decides, it also estimates its degree of confidence.

- Confidence also applies to incoming information and we can judge it against our past knowledge.

- AI currently lacks meta-knowledge of the reliability and limits of what has been learned.

- Error detection provides a clear example of self-monitoring where we know we are wrong before we even receive feedback.

- How can the brain make a mistake and detect it?

- Two possibilities

- The accumulation of sensory evidence continues after a decision is made and an error is inferred whenever this new evidence points in the opposite direction.

- Two parallel circuits operate on the same sensory data and signal an error whenever their conclusions diverge. This is more compatible with our remarkable speed of error detection.

- Human don’t just know things about the world, they also know that they know or that they don’t know.

- Meta-memory: humans can report feelings of knowing, confidence, and doubts on their memories.

- Meta-memory is thought to involve a second-order system that monitors internal signals to regulate behavior.

- It’s associated with prefrontal structures whose pharmacological inactivation leads to metacognitive impairment while sparing memory performance itself.

- Meta-memory is crucial to learning as it allows learners to develop strategies to improve memory encoding and retrieval.

- The human brain must also distinguish between self-generated versus externally-driven representations.

- E.g. Perceptions versus imaginations and memories.

- Infants also show evidence of C2 as they can communicate their own uncertainty to other agents such as hesitation.

- Dissociations between C1 and C2

- C1 and C2 are largely orthogonal (double dissociation) and complementary dimensions of consciousness.

- Self-monitoring can exist for unreported stimuli (C2 without C1).

- E.g. Automatic typing. Subjects slow down after a typing mistake even when they fail to consciously notice the error.

- Consciously reportable contents sometimes fail to be accompanied with an adequate sense of confidence (C1 without C2).

- E.g. When retrieving a memory, sometimes it comes without any accurate evaluation of its confidence, leading to false memories.

- C1 and C2 can also work together but this requires a single common currency for confidence across different modules.

- Endowing machines with C1 and C2

- Endowing machines with global information availability (C1) would allow different modules to share information and collaborate to solve problems.

- E.g. Letting the rest of the car know that it’s low on gas could mean reducing gas consumption and speed.

- Old “black-board” systems used to try to model this aspect of cognition.

- To make optimal use of global information, a machine should also have a database of its own capacities and limits (C2) such as a self-monitoring system.

- An important element of C2 that’s received little attention is reality monitoring.

- Paper argues that a machine endowed with C1 and C2 would behave as though it were conscious.

- E.g. It would know that it’s seeing something, would express confidence in it, would report it to others, could suffer hallucinations when its monitoring mechanisms break down, and may even experience the same perceptual illusions that we experience.

- Some readers may be unsatisfied with the way the paper “over-intellectualizes” consciousness and leaves aside the experiential component of consciousness.

- Does subjective experience escape a computational definition?

- In humans, the loss of both C1 and C2 covaries with a loss of subjective experience.

- E.g. Damage to the primary visual cortex may lead to a condition called “blindsight” where patients report being blind in the affected visual field. Patients can localize visual stimuli in their blind field but can’t report them (C1), nor can they assess their likelihood of success (C2) as they believe they are merely “guessing.”

The unsolved problems of neuroscience

- Problems that are solved, or soon will be

- How do single neurons compute?

- What is the connectome of a small nervous system, like that of C. elegans (300 neurons)?

- How can we image a live brain of 100,000 neurons at cellular and millisecond resolution?

- How does sensory transduction work?

- Problems that we should be able to solve in the next 50 years

- How do circuits of neurons compute?

- What is the complete connectome of the mouse brain (70,000,000 neurons)?

- How can we image a live mouse brain at cellular and millisecond resolution?

- What causes psychiatric and neurological illness?

- How do learning and memory work?

- Why do we sleep and dream?

- How do we make decisions?

- How does the brain represent abstract ideas?

- Problems that we should be able to solve, but who knows when

- How does the mouse brain compute?

- What is the complete connectome of the human brain (80,000,000,000 neurons)?

- How can we image a live human brain at cellular and millisecond resolution?

- How could we cure psychiatric and neurological diseases?

- How could we make everybody’s brain function best?

- Problems we may never solve

- How does the human brain compute?

- How can cognition be so flexible and generative?

- How and why does conscious experience arise?

- Meta-questions

- What counts as an explanation of how the brain works? (and which disciplines would be needed to provide it?)

- How could we build a brain? (how do evolution and development do it?)

- What are the different ways of understanding the brain? (what is function, algorithm, implementation?)

What Is It Like to Be a Bat?

- Paper attempts to explain why we have no idea of what an explanation of the physical nature of a mental phenomenon would be.

- Most reductionist theories don’t try to explain consciousness nor conscious experience.

- No matter how the form may vary, the fact that an organism has conscious experience means that there is something it is like to be that organism.

- An organism has conscious mental states if and only if there is something it is like to be that organism, something it is like for the organism.

- Without some idea of what subjective experience is, we can’t know what is required of a physicalist theory of consciousness.

- To defend physicalism, the phenomenological features must be given a physical account.

- But when we examine subjective experience, that result seems impossible.

- It seems impossible because every subjective phenomenon is connected with a single point of view, and it seems inevitable that an objective, physical theory will abandon that point of view.

- Thought experiment separating the subjective and objective (bat thought experiment)

- Assume that bats have experience.

- We choose bats and not wasps because if one travels too far down the phylogenetic tree, people gradually shed their faith that there is experience there at all.

- Bat have a range of behaviors and sensory organs so different from ours that the problem is exceptionally vivid.

- Anyone that’s spent some time with bats knows what it’s like to encounter a fundamentally alien form of life.

- The core of the belief that bats have experience is that there is something that it’s like to be a bat.

- We know that most bats perceive the world primarily by sonar/echolocation.

- Bat brains are designed to correlate the outgoing impulses with the incoming echoes to acquire information about distance, size, shape, motion, and texture.

- But bat sonar, though a form of perception, isn’t similar to any sense that we possess, and we can’t imagine what that experience of sonar is like.

- This creates some difficulties of what it’s like to be a bat.

- Our own experience provides the basic material for our imagination, whose range is therefore limited.

- We can imagine our simulated version of a bat, but this only tells us what it would be like for us to behave as a bat behaves.

- But this isn’t the question.

- We want to know what it’s like for a bat to be a bat, not what it’s like for humans to be a bat.

- If we try, we’re restricted to the resources of our own mind and can’t imagine additions or subtractions to our present experience.

- We can describe the objective aspects of bat experience such as how echolocation works, how flight works, and their version of pain, hunger, lust, and tiredness.

- But we believe that these experiences also have, in each case, a specific subjective character, which is beyond our ability to conceive.

- E.g. It feels like something to perform echolocation.

- We can even ignore bats and only consider humans.

- E.g. The subjective experience of a deaf or blind person isn’t accessible to us, nor the other way around.

- Such an understanding, beyond the level of language that is direct experience, may be permanently denied to us by the limits of our nature.

- From this, one might also believe that there are facts which couldn’t ever be represented or comprehended by human beings because our structure doesn’t allow us to operate with concepts of the requisite type.

- E.g. We can’t see ultraviolet light because the eye doesn’t capture ultraviolet light.

- The bat thought experiment suggests that there are facts that aren’t expressible in the human language.

- We can recognize the existence of such facts without being able to state or comprehend them.

- E.g. We know that people see red but we can’t state what it’s like to be seeing red.

- There’s a sense that phenomenological facts can be objective, in the case when the perceiver is sufficiently similar to the describer.

- E.g. Humans mostly have the same range of color vision so we can categorize them into red, green, blue, etc. which are objective.

- However, the more different from oneself the other perceiver is, the less success one can expect.

- If the facts of experience, what it’s like for the experiencing organism, are only accessible from one point of view, then it’s a mystery how the true character of experiences could be revealed in the physical operation of that organism.

- The physical operation of that organism, biology, is a domain of objective facts, the kind that can only be observed and understood from many points of view and by individuals with differing perceptual systems.

- There are no obstacles in describing an experience in neurophysiology.

- It may be more accurate to think of objectivity as a direction in which understanding can travel.

- E.g. The objectivity of “red” is less than the objectivity of “atom” because atom is further removed from a human viewpoint.

- It’s difficult to understand what could be meant by an objective description of an experience, since that removes the viewer from the experience.

- After all, what would be left of what it was like to be a bat if one removed the viewpoint of the bat?

- We appear to face a problem with psychophysical reduction.

- In other areas of science, the reduction of phenomena is a move in the direction of greater objectivity; towards a more accurate view of the real nature of things.

- This is done by reducing our dependence on individual points of view toward the object of investigation.

- We describe the phenomenon not in terms of its impression on our sense, but in terms of its more general effects and properties other than the human senses.

- E.g. To describe the phenomenon of “matter” means to go beyond our human senses into the atomic or sub-atomic realms of reality.

- Experience itself, however, doesn’t seem to fit this pattern.

- If subjective experience is only fully comprehensible from one point of view, then any shift to greater objectivity, meaning less attachment to a specific viewpoint, doesn’t take us nearer to the real nature of consciousness, it just takes us further away.

- Different species may both understand the same physical events in objective terms, but this doesn’t require them to understand the subjective experience of those events to the senses of another species.

- The reduction can only succeed if the species-specific viewpoint is left out from what is to be reduced.

- If we desire that a physical theory of mind must also account for the subjective character of experience, we must admit that we don’t know how to do so.

- Indeed, this appears as a contradiction, to objectify the subjective.

- The state of physicalism is similar to if a pre-Socratic philosopher said “matter is energy” because we don’t have an idea of how “what it is like to be a bat” might be true.

How Baseball Outfielders Determine Where to Run to Catch Fly Balls

- When a baseball is hit, the only useable information appears to be the optical trajectory of the ball; the changing position of the ball image relative to the background.

- Outfielder: the players in a baseball game that catch the ball.

- In theory, outfielders could derive the destination of the ball from an assumed parabolic trajectory, but research shows that observers are very poor at using such a purely computational approach.

- Also, air resistance, ball spin, and wind can cause trajectories to deviate from a parabolic curve.

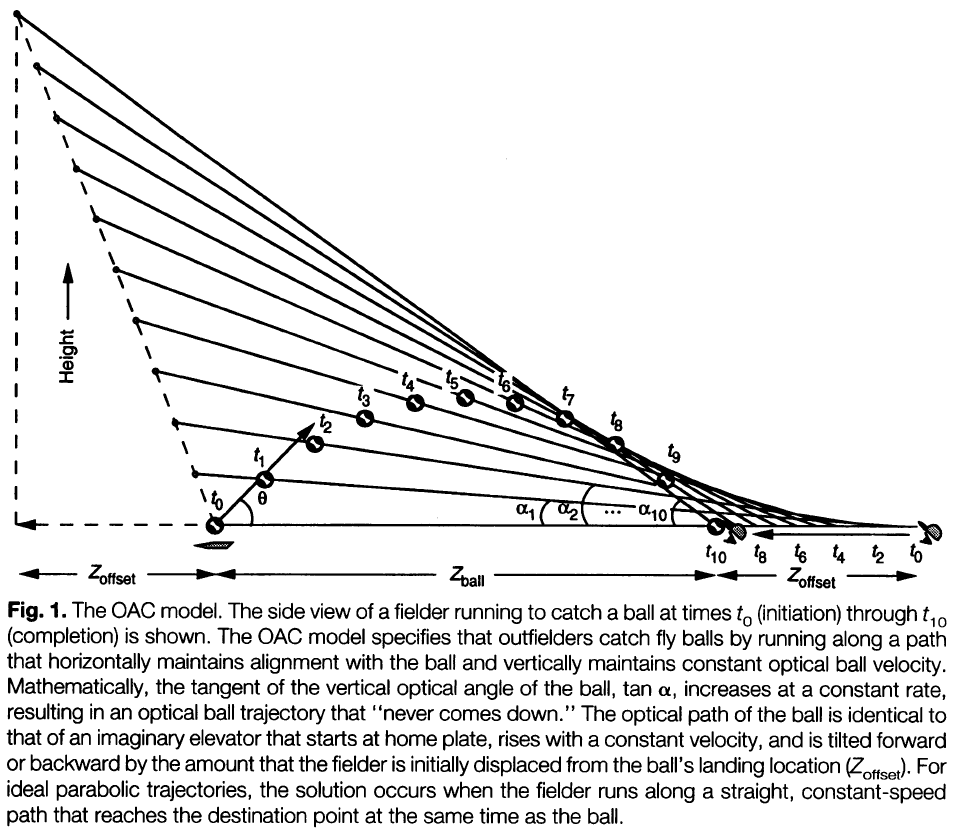

- One hypothesis is that outfielders run along a path that simultaneously maintains horizontal alignment with the ball and maintains a constant change in the tangent of the vertical optical angle of the ball.

- In other words, the fielder can arrive at the correct destination by selecting a running path that keeps optical ball speed constant, achieving optical acceleration cancellation (OAC).

- OAC works even if the trajectory isn’t parabolic because it couples the fielder’s motion with that of the ball.

- The OAC model is elegant but flawed because OAC solutions require the precise ability to discriminate accelerations, and evidence shows that people are poor at such tasks.

- Another issue is that OAC was tested on balls hit directly towards the outfielder and without sideways motion.

- However, outfielders consider balls hit directly at them to be harder to catch, not easier.

- From this, it seems that outfielders use their vantage outside the plane of the ball trajectory in a way that simplifies, rather than complicates, determination of ball destination.

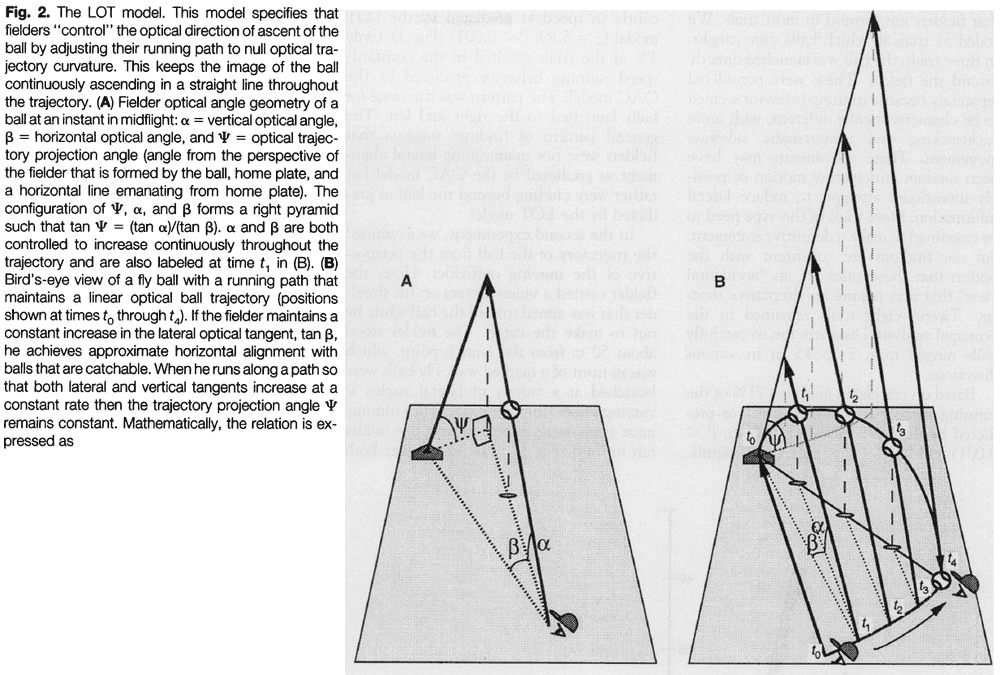

- An alternative explanation is the linear optical trajectory (LOT).

- LOT proposes that the outfielder selects a running path that maintains linear optical trajectory for the ball relative to the home plate and background.

- The solution is based on maintaining a balance between vertical and horizontal optical angular change and requires no knowledge of distance to the ball or home plate.

- In LOT, the outfielder doesn’t allow the ball to curve optically toward the ground and requires them to continuously move more directly under the ball, guaranteeing travel to the correct destination to catch it.

- The LOT strategy discerns optical acceleration as optical curvature, a feature that observers are very good at discriminating.

- Like the OAC strategy, maintaining a LOT is a error-nulling tactic that couples fielder motion with that of the ball.

- In summary

- OAC predicts that fielders select a running path that is straight with constant speed, resulting in a curved optical ball trajectory.

- LOT predicts that fielders select a running path that is curved with -shaped speed, resulting in a linear optical ball trajectory.

- In the paper’s experiments, the balls launched directly toward the fielder are a special case and requires a different strategy other than OAC and LOT.

- The results support the premise that outfielders use spatial, rather than just temporal, cues to initially guide them toward the ball destination point.

- Other cases where the optical angle to a target is maintained

- Airplane pilots use spatial error-nulling to anticipate and maintain constant angular position relative to a target.

- Predators and organisms pursing mates commonly adjust their position to maintain control of relative angle of motion between the pair.

- Findings suggest that baseball players use a similar spatial strategy.

- Once an outfielder establishes a LOT solution, they know they control the situation and that they will catch the ball, but they don’t know when.

- This explains why outfielders run into walls chasing uncatchable ball and why they don’t rush ahead to the ball’s destination point, choosing instead to catch the ball while running.

- LOT also explains why balls hit to the side are easier to catch as fielders can use their strong ability to discriminate curvature rather than resorting to their weak ability to discriminate acceleration.

- In short, the LOT strategy provides a simple and effective way to pursue and catch a target traveling with approximate parabolic motion in 3D space.

The newly sighted fail to match seen with felt

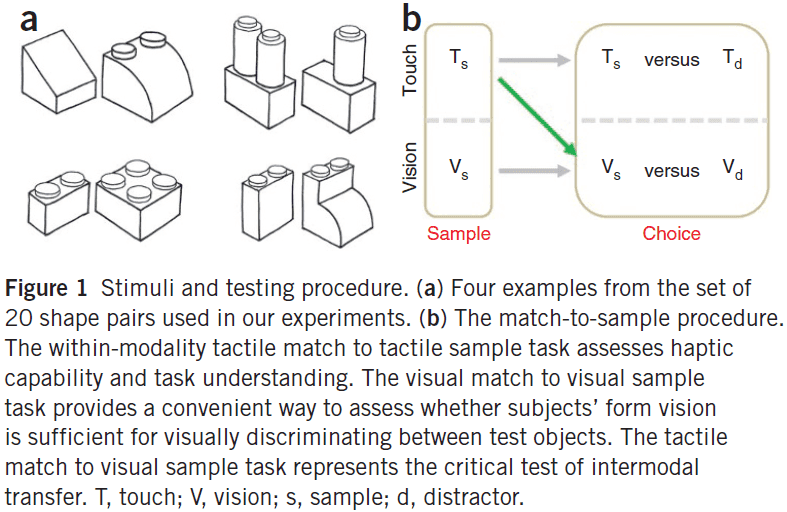

- Molyneux problem: if a person born blind was now able to see, would they recognize an object only previously known by touch?

- A positive answer—a congenitally blind person could match an object visually to tactilely. This would suggest that there’s an a priori (built-in) and amodal (not dependent on sense) conception of space common to both senses.

- A negative answer—there’s no match between sight and touch. This would suggest that pairing visual and tactile information is experience-driven by the association between the senses.

- Critical conditions for testing the Molyneux problem

- Must be congenitally/born blind but treatable and mature enough.

- Both senses, touch and vision, must be independently functional after treatment.

- An optically restored eye doesn’t imply the ability to make full use of the visual signals.

- In other words, just because the eye can see doesn’t mean the brain can see.

- There were five subjects in this study and they were blind from birth due to cataracts or corneal opacities.

- Prior to treatment, subjects were only able to discriminate between light and dark and none of them were able to perform visual discrimination.

- Four subjects underwent cataract removal surgery and intraocular lens implant, while the one subject underwent a corneal transplant to fix their vision.

- The object/stimulus set comprised of 20 pairs of simple and large 3D forms to sidestep any acuity limitations.

- E.g. Big Legos.

- Three discrimination test cases

- Touch-to-touch

- Vision-to-vision

- Touch-to-vision

- By day two of recovery from surgery, all subjects performed near-ceiling for touch-to-touch and vision-to-vision discriminations, indicating that stimuli were easily identified in both modalities.

- In contrast, touch-to-vision was at near-chancel level right after surgery.

- Three of the five subjects were later tested and performance on the touch-to-vision case improved significantly in as little as five days, given only natural real-world visual experience.

- The results suggest that the answer to the Molyneux problem is likely negative.

- Newly sighted people didn’t exhibit an immediate transfer of their tactile shape knowledge to the visual domain.

- The quickness of pairing visual and tactile information suggests that the neuronal substrates responsible for cross-model interaction might already be in place before they behaviorally manifest.

- Even in adulthood, studies using individuals with late-onset vision have suggested that the ability to form representations of new features is retained.

The free-energy principle: a unified brain theory?

- One key theme noticed in many scientific theories is the idea of optimization.

- If we look at what’s being optimized, we find the same quantity emerging, namely value (expected reward, expected utility) or its complement, surprise (prediction error, expected cost).

- This is the quantity that’s optimized under the free-energy principle.

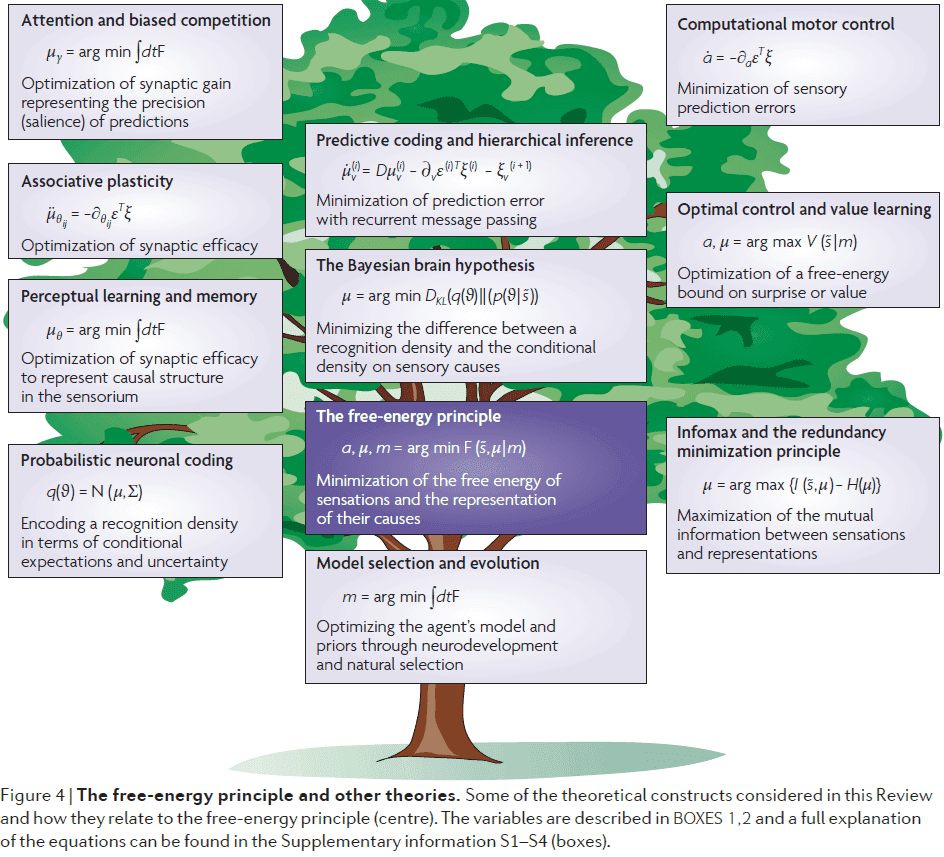

- Paper attempts to place some key theories within the free-energy framework in hopes of finding common themes.

- Free energy: an information-theory measure that bounds or limits the surprise on sampling some data, given a generative model.

- Free-energy principle: any self-organizing system that’s at equilibrium with its environment must minimize its free energy.

- In other words, it’s a mathematical description of how adaptive systems resist a natural tendency to disorder.

- One defining characteristic of biological systems is that they maintain their states in the face of a constantly changing environment.

- From the brain’s perspective, the environment includes both the external and internal milieu.

- Homeostasis: the process where an system regulates its internal environment in a changing external environment.

- Homeostasis means that the number of states that can organism can be in is limited because it wants to maintain a constant internal state.

- This also means that homeostasis must have low entropy meaning low uncertainty in which state it’ll be in.

- Biological agents must therefore minimize the long-term average of surprise to ensure that their sensory entropy remains low.

- To do so, agents both perform actions that minimize prediction errors and use perception to optimize those predictions.

- In the long-term, the goal is to maintain states within physiological bounds (homeostasis).

- In the short-term, the goal is to avoid surprise (reduce entropy).

- Biological agents must avoid surprises to ensure that their states remain within physiological bounds.

- But how does a system carry out this goal?

- Free energy is the answer. Since free energy is an upper bound on surprise, minimizing free energy means to implicitly minimize surprise.

- Two actions that agents can do to suppress free energy

- Change sensory input by acting on the world.

- Change their recognition density by changing their internal state.

- Three formulations of free energy

- Energy minus entropy

- Surprise plus divergence

- Complexity minus accuracy

- Next we consider these implications of the free-energy principle on some key theories about the brain.

- Bayesian brain hypothesis

- Views the brain as an inference machine that actively predicts and explains its sensations.

- A probabilistic model generates predictions that are tested against sensory input to update beliefs about their causes.

- Perception becomes the process of inverting the likelihood that a cause caused a sensory input. This is the Bayesian part.

- Minimizing free energy corresponds to explaining away prediction errors, also known as predictive coding.

- The free-energy principle entails the Bayesian brain hypothesis and can be implemented by the many schemes in this field.

- Principle of efficient coding

- Views the brain as to optimize mutual information/predictability between the sensorium and its internal representation.

- The infomax principle says that neuronal activity should encode sensory information in an efficient and cheap manner.

- The infomax principle is a special case of the free-energy principle.

- Cell assembly and correlation theory

- Hebbian plasticity or Hebb’s rule that neurons that fire together wire together.

- Cell assembly theory: connections between neurons are formed depending on the correlated pre- and post-synaptic activity.

- This enables the brain to distil statistical regularities from the sensorium into neurons.

- Gradient descent on free energy (changing connections to reduce free energy) is identical to Hebbian plasticity.

- The formation of cell assemblies reflects the encoding of causal regularities.

- Bias competition and attention

- Precision encodes the amplitude of random fluctuations or the reliability of prediction errors.

- How is precision encoded in the brain?

- In predictive coding, precision modulates the amplitude of prediction errors, so that prediction errors with high precision have a greater impact on units that encode conditional expectation.

- This means the precision corresponds to the synaptic gain on prediction error units.

- The obvious candidate for controlling gain are neuromodulators like dopamine and acetylcholine.

- Another candidate is correlation theory or Hebb’s rule. This fits with recent ideas about the role of synchronous activity in mediating attentional gain.

- In summary, the optimization of expected precision in terms of synaptic gain links attention to synaptic gain and synchronization.

- Neural darwinism and value learning

- Views the brain as a value-maximizing system.

- In the view of free energy, value is inversely proportional to surprise.

- The evolutionary value of a phenotype is the negative surprise averaged over all the states it experiences.

- So, the minimization of free energy ensures that agents spend most of their time in a small number of valuable states.

- This means that free energy is the complement of value, so to increase value means to decrease free energy.

- But what states are valuable?

- Valuable states are just states that the agent expects to frequent.

- The key theme here is that prior (heritable) expectations can label things as innately valuable (unsurprising).

- Optimal control theory and game theory

- Optimal control and decision theory start with the notion of cost/utility and try to construct value functions of states, which are used to guide action.

- Free energy is an upper bound on the expected cost, which optimal control theory assumes that action minimizes expected cost.

- This ensures that an agent only occupies a small set of attracting states.

- The constant theme in all of these theories is that the brain optimizes a free-energy bound on surprise or its complement.

- This manifests as perception or action.

- If the arguments of the free-energy principle are true, then the real challenge is to understand how it manifests in the brain.

The Expensive-Tissue Hypothesis: The Brain and the Digestive System in Human and Primate Evolution

- Brain tissue is metabolically expensive.

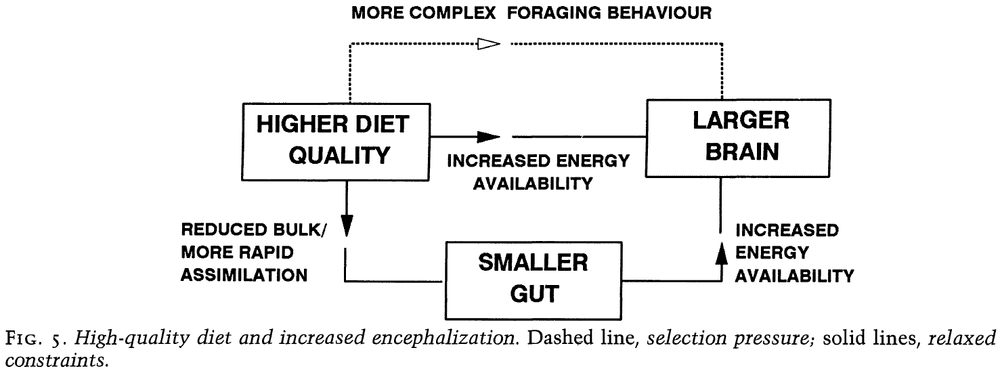

- Expensive-tissue hypothesis: the idea that the metabolic requirements of relatively large brains are offset by a corresponding reduction of the gut.

- Gut size is highly correlated with diet, and relatively small guts are compatible only with high-quality, easy-to-digest food.

- No matter what’s selecting for a large brain, it couldn’t have been achieved without a shift to a high-quality diet unless there’s also a rise in metabolic rate.

- Therefore, the incorporation of more animal products (meat) into our diet was essential in the evolution of the large human brain.

- How do primates, and particularly humans, afford such large brains?

- Three problems with understanding how we can afford large brains

- Encephalization quotient.

- We have a large brain but not a matching large body to fund the energy needed by the brain.

- Metabolic cost of the brain.

- The mass-specific metabolic rate of the brain is around 11.2 W/Kg whereas the average metabolic rate of the human body is 1.25 W/Kg.

- The majority of this energy is spent on ion pumps that are necessary to maintain the membrane potential.

- Energy is also used in the continual synthesis of neurotransmitters.

- This is also made more difficult in that the brain has no significant store of energy reserves (fat).

- No correlation between basal metabolic rate (BMR) and our larger brain.

- There’s no evidence of an increase in basal metabolism sufficient to account for the additional metabolic expenditure of the enlarged brain.

- Encephalization quotient.

- Where does the energy come from to fuel our large brain?

- One possible answer is that the increased energy demands of a larger brain are compensated/offset by a reduction in the metabolic rates of other tissues.

- E.g. Reduced average body temperature for more energy to the brain.

- Another possible answer is that the expansion of the brain was associated with a compensated reduction in the mass of other organs in the body.

- E.g. The shrinking of the kidneys to power a larger brain.

- The heart, kidneys, and splanchnic organs (liver and gastro-intestinal tract) all make substantial contributions to overall BMR.

- In fact, the heart and kidneys have mass-specific metabolic rates that are higher than the brain.

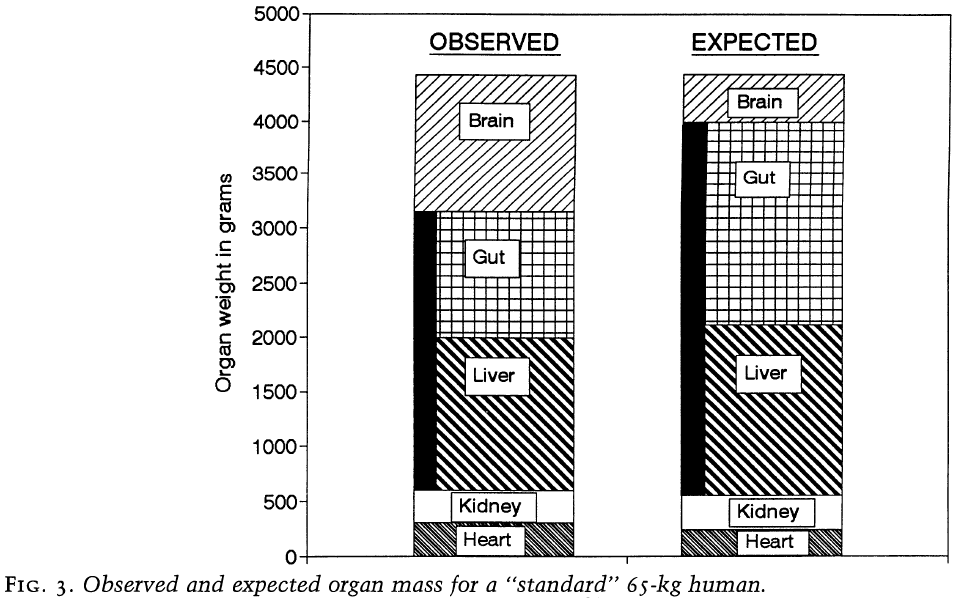

- To determine whether increased brain size came at the reduction of other organs, we compare observed mass of each organ with the expected mass for the average primate of corresponding body mass.

- The combined mass is close to expected but the contributions of individual organs are very different.

- The human heart and kidneys are both close to their expected size but the splanchnic organs are approximate 900 grams less than expected.

- Almost all of this reduction is due to a reduction in the gastro-intestinal tract, which is 60% of its expected size.

- Therefore, the increase in mass of the human brain appears to be balanced by an almost identical reduction in the size of the gastro-intestinal tract.

- It should be noted that these are size relationships rather than metabolic relationships.

- Whether the energetic savings due to reduced gut mass helped the expansion of the brain depends on the relative metabolic rates of the two tissues.

- The reduction in gut size saves around 9.5 W.

- The energy savings from the decreased gut is approximately the same as the additional cost of the larger brain.

- So, if the changes in the proportions of the two organs were recent evolutionary events, there’s no reason that the BMRs of hominids would have been elevated above the typical primate.

- This analysis implies that there’s been a coevolution between brain size and gut size in humans and other primates.

- This assumes that primates were not balancing their energy budgets in other ways, such as opting for relatively high BMR or altering the size and/or metabolic requires of other tissues.

- A higher BMR would require more energy and, unless environmental conditions were unusual, would require devoting more time to feeding behavior but also put the animal into more intense competition for limited food resources.

- Further, it’s unlikely that the size of other metabolically expensive tissues (liver, heart, kidney) could be altered substantially.

- The brain only uses glucose as its fuel and since the brain has no energy reserves, it must get this continual supply from the blood.

- If the level falls below normal concentrations for even a short period of time, it can result in significant dysfunction of the CNS.

- A major role of the liver is to replenish and maintain these levels, both by releasing glucose from glycogen stores and by making it from alternative energy reserves.

- Also, the energy demands imposed by increased brain size can’t exceed the capacity of the liver to store and ensure the uninterrupted supply of glucose necessary to fuel this metabolism.

- Since the heart is mostly made up of rhythmically contracting cardiac muscle, it’s difficult to imagine how an significant reduction in the size of this organ could take place without compromising its ability.

- It’s also difficult to see how the heart could be shrunk as our ancestors performed persistence hunting, requiring high cardiovascular performance.

- Kidneys probably weren’t reduced either as that would make urine more dilute (most of the energy is spent on water resorption) and would require hominids to drink more.

- If the hypothesis of coevolution is correct, then understanding how primates can afford large brains means we must understand how they have small guts.

- Gut size is related to body size and is strongly determined by diet (bulk and digestibility of food).

- Large amounts of low-digestible food require large guts characterized by fermenting chambers.

- E.g. Cows have many stomachs to digest large amounts of grass.

- Conversely, diets with smaller amounts of high-digestible food require smaller guts.

- E.g. Carnivores such as wolves.

- The link between gut size and diet also holds for primates.

- There’s also a close relationship between relative gut size and relative brain size.

- Animals with large guts have small brains, while animals with small guts have large brains.

- It’s unsure which direction the relationship is though.

- Did a larger brain enable more complex feeding strategies, leading to smaller guts? Or did smaller guts give more energy to the larger brain? Both?

- Cooking may have been an important factor in increasing hominid brain size.

- Cooking is a technological way of externalizing part of the digestive process and removes toxins in the process.

- Summary

- A higher quality diet was probably associated with a reduction in gut size and energy cost.

- If this is correct, then the enlargement of the hominid brain was able to continue without placing any additional demands on their overall energy budget.

- More complex feeding strategies may have also pressured the brain to increase in size.

- Further increases may have been due to the introduction of cooking to make food more digestible.

- There’s no correlation between basal metabolic rate and brain size in humans and other large-brained mammals.

- Expensive-tissue hypothesis: a reduction in the size of one energy expensive organ can allow another organ to increase in energy expenditure.

- In the case of hominids, we traded gut size for brain size.

- Other important points

- Diet can be inferred from the size of the gut and not just teeth and jaws.

- The evolution of any organ of the body can’t be studied in isolation.

What the Frog’s Eye Tells the Frog’s Brain

- Paper analyzed the activity of single fibers in the optic nerve of a frog.

- The goal is to find what stimulus causes the largest activity in the fiber and what aspect of the stimulus is responsible.

- Each fiber isn’t connected to a few rods and cones but to very many over an area.

- Results show that it isn’t the light intensity that causes the largest activity, but rather the pattern of local variation of intensity that’s the exciting factor.

- Authors found four types of fibers, each type concerned with a different pattern and each type is uniformly distributed over the whole retina.

- Thus, there’s four distinct parallel distributed channels that the frog eye informs the frog brain about the visual image in terms of local pattern independent of average illumination.

- Reasons to study the frog

- Uniformity of the retina.

- Normal lack of eye and head movements except to stabilize the retinal image.

- Relative simplicity of the eye to brain connection.

- The frog doesn’t seem to see the details of unmoving parts of the world around.

- E.g. A frog will starve to death surrounded by food if the food isn’t moving.

- A frog’s choice of food is only determined by size and movement.

- Frog retina’s have 1 million receptors, 2.5-3.5 million connecting neurons, and half a million ganglion cells.

- A ganglion cell receives information from thousands of receptors.

- Clearly, such an arrangement wouldn’t allow for good resolution if the retina maps an image in terms of light intensity point-by-point.

- The amount of overlap of adjacent ganglion cells is enormous.

- Review of receptive fields (OFF, ON, ON-OFF).

- For all three types of receptive fields, the sensitivity is greatest at the center of each field.

- An ON-OFF cell seems to be measuring inequality of illumination within its receptive field and is sensitive to movement within the field.

- The optic nerve looks at the image on the retina through three distributed channels (three receptive fields).

- In any channel, the overlap of individual receptive fields is very great.

- So the retina tells the brain that for any visual event

- The OFF channel tells how much dimming of light has occurred and where.

- The ON-OFF channel tells where the boundaries of lighted areas are moving.

- The ON channel tells where brightening has occurred.

- To an unchanging visual pattern, the optic nerve should be fairly silent after an initial burst to communicate the visual pattern.

- The resolution of the OFF and ON channels is about the size of a receptive field, meaning the resolution is blurry.